AI Therapy: Balancing Benefits And Surveillance Risks In A Police State

Table of Contents

The Potential Benefits of AI Therapy

AI therapy, while controversial in certain contexts, offers significant advantages in improving mental healthcare access and effectiveness. Its potential benefits are particularly compelling when considering the limitations of traditional therapy models.

Accessibility and Affordability

AI-powered therapy platforms can overcome geographical barriers and reduce the cost of mental healthcare, making it accessible to underserved populations. This democratization of mental health services is crucial, especially in regions lacking sufficient mental health professionals.

- Increased access in rural areas: AI therapy can reach individuals in remote locations with limited access to traditional therapists.

- Lower cost compared to traditional therapy: AI-based platforms often have lower overhead costs, translating to lower fees for patients.

- 24/7 availability: Unlike human therapists, AI therapy is available anytime, anywhere, offering immediate support when needed.

This increased accessibility can significantly improve mental health outcomes, particularly for those who might otherwise lack the resources or opportunity to seek professional help. The potential for reducing the global mental health treatment gap is substantial.

Personalized Treatment Plans

AI algorithms can analyze user data—including text, speech patterns, and responses to assessments—to create highly personalized treatment plans, tailoring interventions to individual needs and preferences. This precision medicine approach offers significant advantages over traditional "one-size-fits-all" methods.

- Data-driven insights for treatment optimization: AI can identify the most effective treatment strategies based on individual characteristics and response patterns.

- Improved adherence to treatment plans: Personalized plans can increase patient engagement and motivation, leading to better adherence to treatment protocols.

- Faster recovery times: By optimizing treatment plans, AI can potentially accelerate the recovery process and improve overall outcomes.

This personalized approach can lead to more effective and efficient therapy, maximizing positive outcomes and minimizing the time spent struggling with mental health challenges.

Early Detection and Prevention

AI's ability to process large datasets allows it to identify patterns and risk factors for mental health issues, potentially enabling early intervention and prevention strategies. This proactive approach can be crucial in preventing the development of more severe conditions.

- Analysis of user text/speech for early warning signs: AI can detect subtle changes in language or communication patterns that might indicate developing mental health problems.

- Proactive identification of at-risk individuals: Through data analysis, AI can identify individuals who may be at increased risk of developing mental health issues and offer preventative interventions.

- Development of preventative measures: By studying patterns and risk factors, AI can help researchers and clinicians develop more effective preventative measures.

By acting proactively, AI can help mitigate the onset of more severe mental health problems, improving the long-term well-being of individuals and reducing the overall burden on mental health systems.

Surveillance Risks of AI Therapy in a Police State

While AI therapy offers many potential benefits, its deployment in a police state raises serious ethical and practical concerns regarding surveillance and potential abuse. The same data used for personalized treatment can be weaponized for oppression.

Data Privacy and Security

User data collected by AI therapy platforms becomes exceptionally vulnerable to exploitation in a police state, potentially leading to profiling, discrimination, and repression. The sensitive nature of mental health data makes it a prime target for abuse.

- Risk of data breaches: AI platforms, like any digital system, are vulnerable to hacking and data breaches, exposing sensitive personal information.

- Potential for misuse of sensitive information by authorities: Government agencies could use this data for surveillance, targeting individuals based on their mental health status or perceived political views.

- Lack of robust data protection laws: In authoritarian regimes, weak or non-existent data protection laws offer little protection against state-sponsored data misuse.

The lack of robust legal frameworks exacerbates the risks, leaving individuals with limited recourse in the event of data breaches or misuse.

Psychological Manipulation and Control

AI algorithms could be used to manipulate users, influencing their thoughts, beliefs, and behaviors for political purposes. This represents a significant threat to individual autonomy and freedom of thought.

- Targeted propaganda via chatbots: AI-powered chatbots could be used to deliver subtle or overt political propaganda, shaping user opinions and beliefs.

- Subtle manipulation of therapeutic interventions: Therapeutic interventions could be subtly manipulated to reinforce desired behaviors or beliefs, undermining individual autonomy.

- Potential for gaslighting and psychological coercion: AI could be used to systematically undermine a user's sense of reality and self-worth, making them more susceptible to manipulation.

This represents a significant risk to mental and emotional well-being, as well as fundamental human rights.

Lack of Transparency and Accountability

The opacity of many AI algorithms creates a "black box" effect, making it difficult to understand how decisions are made. This lack of transparency hinders accountability and increases the risk of biased or unfair outcomes.

- "Black box" nature of AI: The complex algorithms used in AI therapy can be difficult to decipher, making it challenging to understand how they reach their conclusions.

- Difficulty in identifying algorithmic biases: AI algorithms can inherit and amplify existing biases in the data they are trained on, leading to discriminatory or unfair outcomes.

- Limited opportunities for redress: The lack of transparency makes it challenging to identify and correct errors or biases, leaving individuals with limited recourse if they feel they have been unfairly treated.

This lack of transparency undermines trust and accountability, creating a climate of fear and uncertainty surrounding the use of AI therapy in such contexts.

Conclusion

AI therapy holds immense promise for improving mental healthcare accessibility and effectiveness. However, its deployment in police states presents significant ethical and practical challenges. The potential for surveillance, manipulation, and the erosion of individual privacy necessitates careful consideration of data protection, algorithmic transparency, and robust regulatory frameworks. We must prioritize human rights and individual liberties when developing and implementing AI therapy, ensuring that this powerful technology is used to enhance wellbeing, not to suppress it. Only through careful ethical deliberation and robust safeguards can we harness the benefits of AI therapy while mitigating its considerable risks in contexts where state surveillance is a significant concern. Let's work towards responsible development and implementation of AI therapy, promoting its ethical use and protecting vulnerable populations from its potential misuse. We need to ensure that the future of AI therapy prioritizes human well-being above all else.

Featured Posts

-

Lindungi Warga Pesisir Dpr Usul Pembangunan Tembok Laut Raksasa

May 16, 2025

Lindungi Warga Pesisir Dpr Usul Pembangunan Tembok Laut Raksasa

May 16, 2025 -

Celtics Game 3 Starting Guard Absence Vs Orlando Magic

May 16, 2025

Celtics Game 3 Starting Guard Absence Vs Orlando Magic

May 16, 2025 -

How To Watch Celtics Vs Magic Nba Playoffs Game 1 Time Tv Channel And Live Stream

May 16, 2025

How To Watch Celtics Vs Magic Nba Playoffs Game 1 Time Tv Channel And Live Stream

May 16, 2025 -

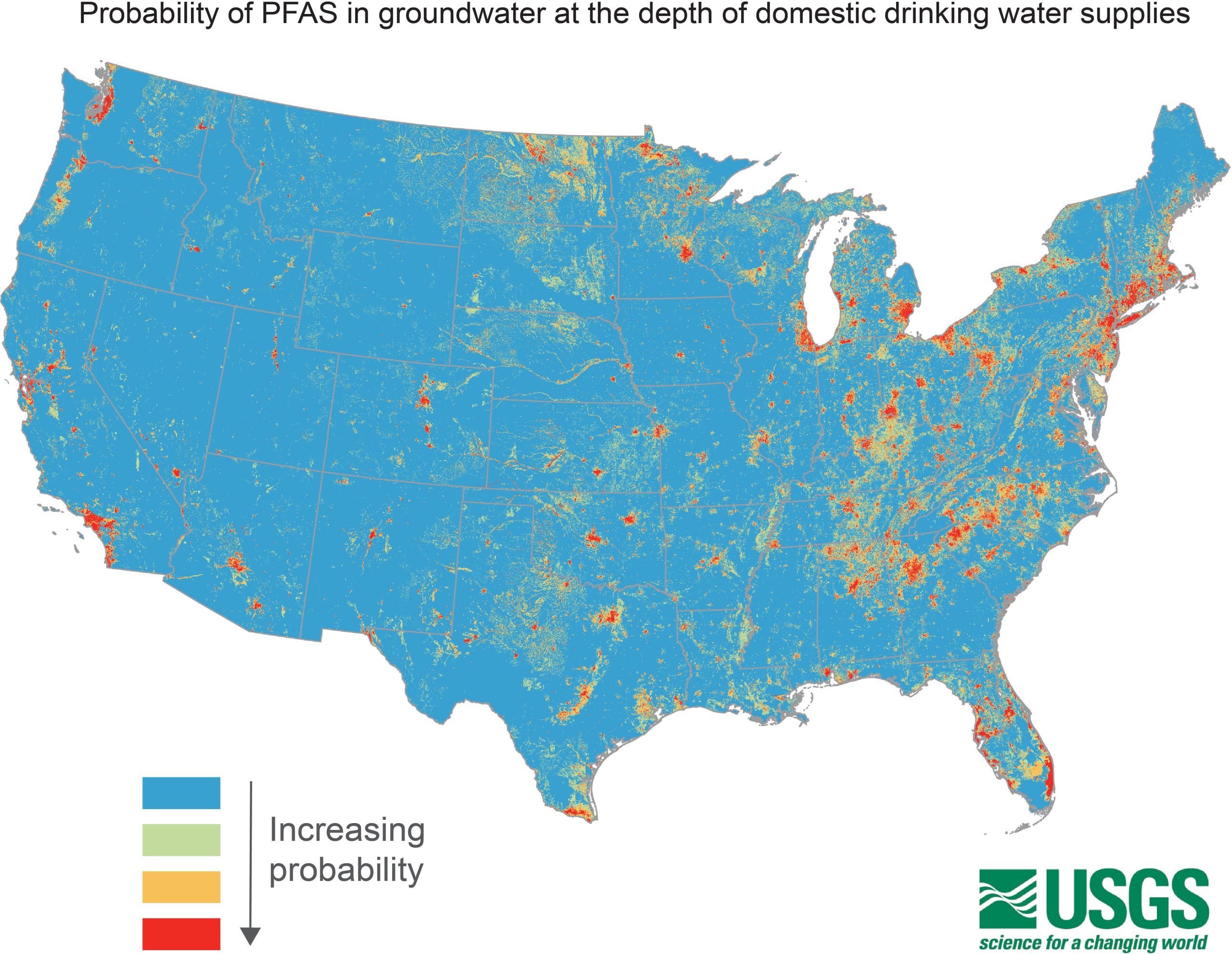

New Report Millions Of Americans Exposed To Contaminated Drinking Water Sources

May 16, 2025

New Report Millions Of Americans Exposed To Contaminated Drinking Water Sources

May 16, 2025 -

Proyek Strategis Nasional Melibatkan Swasta Dalam Pembangunan Giant Sea Wall

May 16, 2025

Proyek Strategis Nasional Melibatkan Swasta Dalam Pembangunan Giant Sea Wall

May 16, 2025