AI's Learning Constraints: Navigating The Ethical Challenges Of Artificial Intelligence

Table of Contents

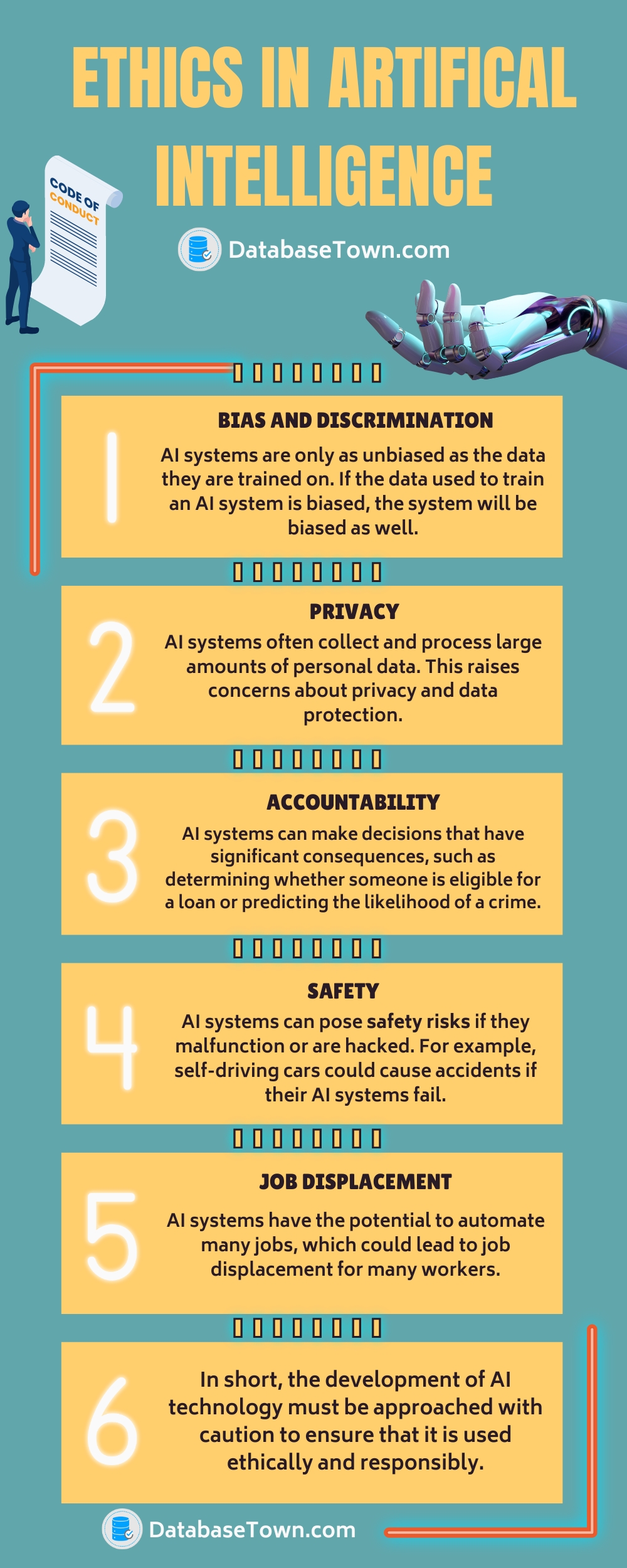

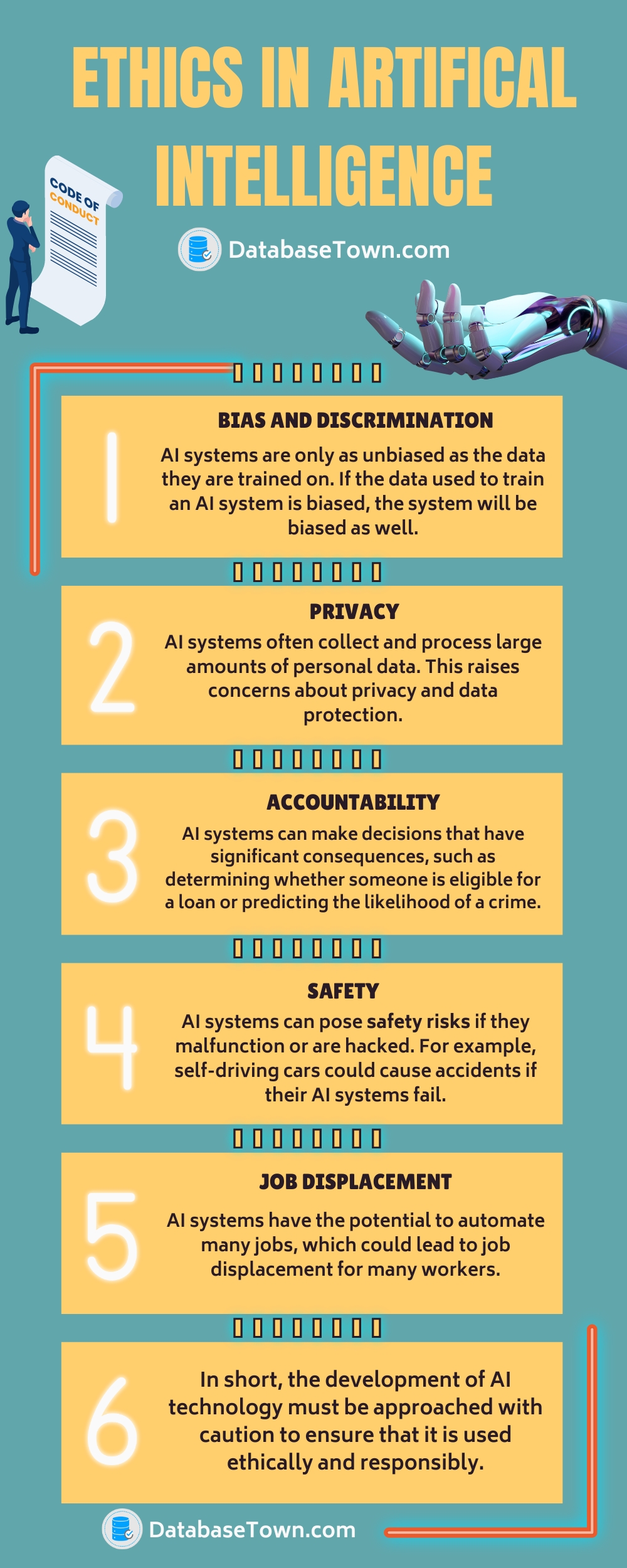

Data Bias and its Impact on AI Learning

AI systems learn from data, and if that data reflects existing societal biases, the resulting AI will perpetuate and even amplify those biases. This is a critical aspect of AI's learning constraints.

Sources of Bias in Training Data

Biased data leads to biased AI outputs. This bias can stem from various sources:

- Representational Bias: Datasets may underrepresent certain demographics (e.g., gender, race, socioeconomic status), leading to AI systems that perform poorly or unfairly for underrepresented groups. For example, facial recognition systems trained primarily on images of white faces often perform less accurately on people of color.

- Algorithmic Bias: Even with unbiased data, the algorithms themselves can introduce bias through design flaws or unintended consequences. For instance, an algorithm designed to predict recidivism might inadvertently discriminate against certain racial groups if historical data reflects biased policing practices.

- Measurement Bias: The way data is collected and measured can also introduce bias. For example, surveys that use leading questions or rely on self-reporting can skew results and lead to inaccurate AI models. This impacts data bias mitigation efforts significantly.

Addressing data bias requires careful data curation, algorithmic auditing, and the development of fair AI practices. This includes actively seeking diverse and representative datasets and employing techniques like data augmentation to balance underrepresented groups. Responsible AI development necessitates a proactive approach to identifying and mitigating these biases.

Consequences of Biased AI Systems

Deploying biased AI systems can have severe real-world consequences across various sectors:

- Hiring and Recruitment: Biased AI in recruitment tools can lead to discriminatory hiring practices, excluding qualified candidates from underrepresented groups.

- Loan Applications: Biased AI in credit scoring can deny loans to individuals from marginalized communities, perpetuating economic inequality.

- Criminal Justice: Biased AI in predictive policing or risk assessment can lead to unfair targeting and sentencing of certain demographics, exacerbating existing injustices. Algorithmic discrimination in such critical areas erodes public trust and undermines the fairness of the justice system. AI accountability becomes paramount in these contexts.

The consequences of biased AI extend beyond individual injustices; they contribute to the perpetuation of societal inequalities and erode public trust in AI systems. Addressing algorithmic discrimination requires a multi-faceted approach that includes rigorous testing, ongoing monitoring, and mechanisms for redress.

The Problem of Explainability and Transparency in AI

Another significant aspect of AI's learning constraints is the lack of transparency and explainability in many AI systems, particularly deep learning models.

The "Black Box" Problem

Many complex AI models, particularly deep learning models, function as "black boxes." Their decision-making processes are opaque, making it difficult to understand how they arrive at their conclusions. This lack of transparency poses significant challenges:

- Difficulty in Identifying and Correcting Errors: It's hard to debug a system when you don't understand its internal workings. Errors can go undetected and have serious consequences.

- Implications for Accountability: When an AI system makes a harmful decision, it's difficult to assign responsibility if the decision-making process is not transparent.

This lack of interpretability in AI directly contributes to concerns surrounding AI trustworthiness.

The Need for Explainable AI

To address these challenges, there's a growing emphasis on developing explainable AI (XAI) and interpretable AI. XAI focuses on creating AI systems whose decision-making processes are transparent and understandable:

- Benefits for Debugging: Explainable AI makes it easier to identify and correct errors in the system.

- Benefits for Auditing: Transparency allows for independent audits to ensure fairness and compliance with regulations.

- Benefits for Ensuring Fairness and Accountability: Understanding how an AI system made a decision allows for better assessment of its fairness and accountability. Model interpretability is crucial for building trust and ensuring responsible use.

Addressing AI's Learning Constraints Through Ethical Frameworks

Overcoming AI's learning constraints requires a proactive and multi-pronged approach centered on ethical frameworks and responsible AI development.

Developing Ethical Guidelines and Regulations

Clear ethical guidelines and regulations are needed to govern the development and deployment of AI systems:

- Existing Ethical Frameworks: Organizations like the OECD have developed principles for AI, providing guidance on responsible AI development.

- Role of Regulatory Bodies: Regulatory bodies play a crucial role in enforcing ethical standards and ensuring accountability.

- Industry Self-Regulation: Industry players also have a responsibility to establish internal ethical guidelines and best practices. AI governance requires a collaborative effort between stakeholders.

Effective AI regulation must balance innovation with the need to protect individuals and society.

Promoting Responsible AI Research and Development

Prioritizing ethical considerations in AI research and development is paramount:

- Role of Researchers and Developers: Researchers and developers have a responsibility to design and build AI systems that are fair, transparent, and accountable.

- Role of Policymakers: Policymakers need to create an enabling environment for responsible AI innovation while mitigating potential risks. Human-centered AI design should be at the forefront of all developments.

Responsible innovation in AI requires a commitment to ethical AI principles throughout the entire lifecycle of an AI system, from design and development to deployment and monitoring.

Conclusion

AI's learning constraints present significant ethical challenges, including data bias, the lack of explainability in "black box" systems, and the need for robust ethical frameworks. Addressing these challenges is crucial for ensuring the responsible and beneficial development of AI. By understanding and actively addressing AI's learning constraints, we can harness the transformative power of AI while mitigating its potential risks and ensuring a more equitable and just future. Learn more about AI ethics and participate in discussions and initiatives aimed at promoting responsible AI development. Let's work together to navigate the complexities of AI's learning constraints and build a future where AI serves humanity ethically and equitably.

Featured Posts

-

Is This The Good Life Assessing Your Current State

May 31, 2025

Is This The Good Life Assessing Your Current State

May 31, 2025 -

E Bays Liability For Banned Chemicals Section 230 Protection Questioned

May 31, 2025

E Bays Liability For Banned Chemicals Section 230 Protection Questioned

May 31, 2025 -

Algorithms Radicalization And Mass Shootings Holding Tech Companies Accountable

May 31, 2025

Algorithms Radicalization And Mass Shootings Holding Tech Companies Accountable

May 31, 2025 -

Cd Projekt Red On Cyberpunk 2 Confirmation Release Date Speculation And More

May 31, 2025

Cd Projekt Red On Cyberpunk 2 Confirmation Release Date Speculation And More

May 31, 2025 -

Office365 Executive Email Breach Nets Millions For Hacker Fbi Says

May 31, 2025

Office365 Executive Email Breach Nets Millions For Hacker Fbi Says

May 31, 2025