AI's Learning Limitations: Responsible Development And Deployment Strategies

Table of Contents

Data Bias and its Impact on AI Learning

AI models learn from the data they are trained on. If this data reflects existing societal biases (gender, racial, socioeconomic, etc.), the AI system will likely perpetuate and even amplify these biases in its outputs. This is a significant concern regarding AI's learning limitations.

The Problem of Biased Datasets

The quality and representativeness of training data are paramount. Biased datasets lead to biased AI.

- Example: A facial recognition system trained primarily on images of white faces may perform poorly on images of people with darker skin tones, highlighting a crucial aspect of AI's learning limitations. This inaccuracy can have serious consequences in areas like law enforcement and security.

- Example: A loan application AI trained on historical data reflecting existing lending biases might unfairly deny loans to applicants from certain demographic groups. This showcases a clear limitation in AI's learning process.

- Mitigation: Careful data curation is essential. This includes:

- Employing diverse and representative datasets to ensure a balanced representation of all relevant groups.

- Implementing bias detection algorithms during development to proactively identify and address potential biases in the data.

- Utilizing techniques like data augmentation to increase the diversity and size of the dataset.

Addressing Bias Through Algorithmic Fairness

Developing algorithms that are explicitly designed to mitigate bias is crucial. This involves techniques like fairness-aware machine learning and algorithmic accountability, directly addressing some of the core AI's learning limitations.

- Methods:

- Pre-processing techniques: Adjusting skewed data before training the model.

- In-processing techniques: Modifying the learning algorithm itself to be less sensitive to biases.

- Post-processing techniques: Adjusting model outputs to ensure fairness after the model is trained.

- Challenge: Defining and measuring fairness can be complex and context-dependent. There's no single definition of "fairness" that applies universally.

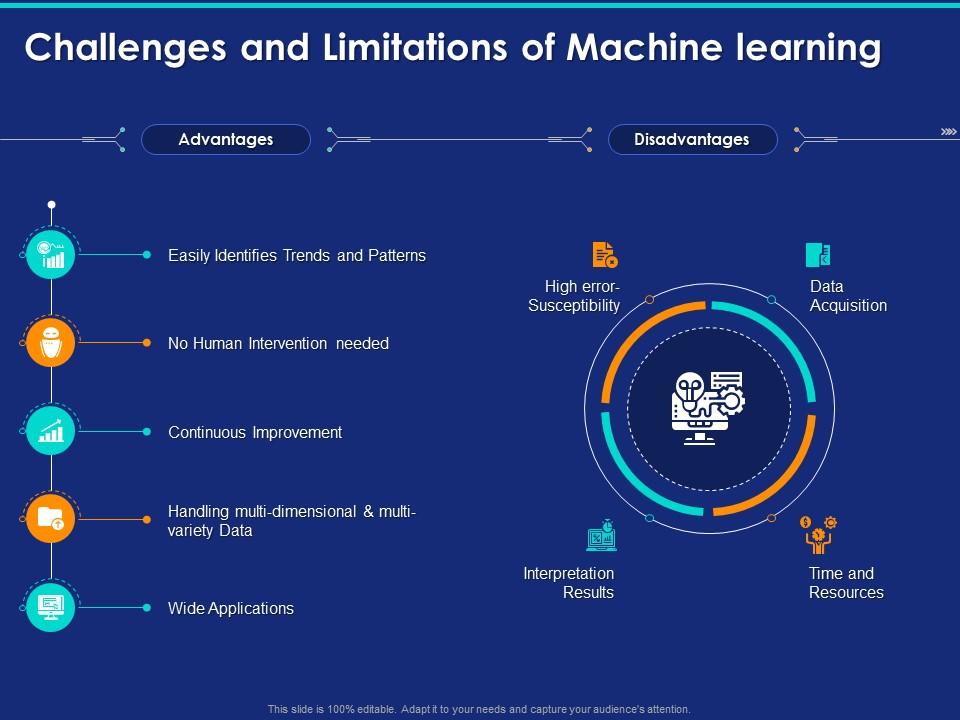

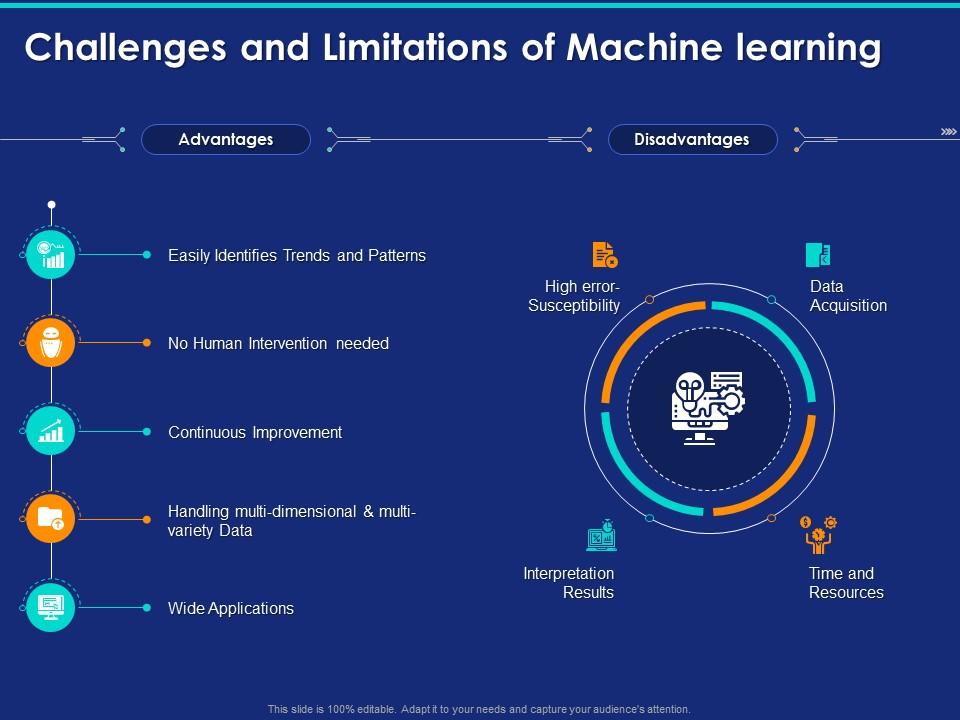

The Limits of Generalization and Transfer Learning

AI models often struggle to generalize their learning to new, unseen data. This is a critical aspect of AI's learning limitations.

Overfitting and Underfitting

- Overfitting: The model performs exceptionally well on the training data but poorly on new data. It has essentially memorized the training set instead of learning underlying patterns.

- Underfitting: The model is too simplistic to capture the complexities of the data, leading to poor performance on both training and new data.

- Mitigation: Techniques like cross-validation, regularization (L1 and L2), dropout, and careful model selection (using techniques like hyperparameter tuning) are crucial to avoid overfitting and underfitting.

- Example: A model trained to identify cats in one specific environment (e.g., a brightly lit room) might fail to recognize cats in a different setting (e.g., a dimly lit room, or a different breed of cat). This illustrates one of the limitations of generalization in AI.

Challenges in Transfer Learning

Transfer learning aims to apply knowledge learned in one domain to another, potentially saving time and resources. However, it's not a silver bullet.

- Challenge: The effectiveness of transfer learning depends heavily on the similarity between the source and target domains. Transferring knowledge from identifying cats to identifying dogs might be relatively easy, but transferring knowledge from identifying cats to diagnosing medical images is far more challenging. This highlights a significant limitation in the transferability of AI learning.

Explainability and Interpretability in AI

Many advanced AI models, particularly deep learning models, are notoriously difficult to interpret, posing significant ethical and practical challenges. This is a major concern regarding AI's learning limitations.

The "Black Box" Problem

The lack of transparency in complex AI models makes it hard to understand their decision-making processes. This "black box" problem hinders debugging, trust, and accountability.

- Mitigation: Developing explainable AI (XAI) techniques is crucial. These techniques aim to make AI models more transparent and understandable. Examples include LIME and SHAP.

- Example: Understanding why a loan application was rejected by an AI system is essential for both the applicant and the lender. Without explainability, unfair or erroneous decisions may go unnoticed.

The Importance of Explainable AI (XAI) for Trust and Accountability

Explainable AI is essential for building trust in AI systems and ensuring accountability for their actions. It helps identify and rectify errors and biases, addressing a key aspect of AI's learning limitations.

Ensuring Data Security and Privacy in AI Development

AI systems often rely on large amounts of sensitive data, making them vulnerable to data breaches and privacy violations. This is a significant consideration in the context of AI's learning limitations, as these limitations are often intertwined with data handling.

Data Breaches and Security Risks

Robust security measures are crucial to protect data used in AI development and deployment.

- Mitigation: Employing strong encryption, access control mechanisms, and regular security audits is vital. Data anonymization techniques and differential privacy methods can also help protect sensitive information.

Ethical Considerations and Data Governance

Developing ethical guidelines and governance frameworks for data usage is crucial to protect individual privacy and prevent misuse of AI systems. This addresses a fundamental aspect of AI's learning limitations—namely, the responsible use of the data driving the system.

Conclusion

Addressing AI's learning limitations requires a multi-faceted approach encompassing responsible data handling, algorithmic fairness, explainable AI, and robust security measures. By acknowledging these limitations and implementing appropriate strategies during development and deployment, we can build AI systems that are both effective and ethical. Understanding and mitigating AI's learning limitations is crucial for creating a future where AI benefits society as a whole. Continue exploring the intricacies of AI's learning limitations to build safer and more responsible AI systems.

Featured Posts

-

Essex Bannatyne Health Club Padel Court Proposal Unveiled

May 31, 2025

Essex Bannatyne Health Club Padel Court Proposal Unveiled

May 31, 2025 -

Cyberpunk 2077s Successor What We Know About Cd Projekt Reds Cyberpunk 2

May 31, 2025

Cyberpunk 2077s Successor What We Know About Cd Projekt Reds Cyberpunk 2

May 31, 2025 -

Wildfires In Canada Severe Air Quality Issues In Minnesota

May 31, 2025

Wildfires In Canada Severe Air Quality Issues In Minnesota

May 31, 2025 -

Behind The Scenes Of Dragons Den Authenticity And Deception

May 31, 2025

Behind The Scenes Of Dragons Den Authenticity And Deception

May 31, 2025 -

Eastern Manitoba Wildfires Rage A Critical Update On The Emergency

May 31, 2025

Eastern Manitoba Wildfires Rage A Critical Update On The Emergency

May 31, 2025