Are Tech Companies Responsible When Algorithms Radicalize Mass Shooters?

Table of Contents

The Role of Algorithmic Amplification in Radicalization

Algorithms, the invisible engines driving our online experiences, play a significant role in shaping how we consume information. However, their design can inadvertently contribute to the radicalization of vulnerable individuals.

Echo Chambers and Filter Bubbles

Social media algorithms, designed to maximize user engagement, often create echo chambers and filter bubbles. These personalized online environments predominantly expose users to information confirming their pre-existing beliefs, reinforcing extremist views and limiting exposure to diverse perspectives.

- Examples: Algorithms prioritizing content from like-minded sources, leading users down rabbit holes of extremist content.

- Studies: Research consistently demonstrates the correlation between echo chamber effects and the polarization of opinions, potentially leading to radicalization.

- Recommendation Systems: The recommendation systems on platforms like YouTube, Facebook, and Twitter are particularly potent in this regard, suggesting increasingly extreme content based on past viewing or engagement.

Targeted Advertising and the Spread of Misinformation

Targeted advertising, while beneficial for many businesses, can be exploited to spread propaganda and misinformation to individuals susceptible to extremist ideologies. This highly personalized approach allows malicious actors to reach vulnerable populations with tailored messages promoting violence.

- Examples: Targeted ads promoting hate speech, conspiracy theories, and extremist groups, often bypassing traditional content moderation systems.

- Regulation Challenges: The decentralized nature of online advertising makes regulation extremely difficult, creating a challenging environment for both tech companies and governments.

- Ethical Dilemmas: Tech companies grapple with the ethical implications of profiting from ads that may contribute to real-world harm.

The Difficulty of Content Moderation

Identifying and removing extremist content from massive online platforms is a monumental task. Current moderation strategies struggle to keep pace with the sheer volume of user-generated content and the rapid evolution of extremist tactics.

- Scale of the Problem: The vastness of online platforms makes comprehensive monitoring practically impossible, leaving gaps for extremist content to proliferate.

- Speed of Content Creation: Extremists constantly adapt their language and methods, making it challenging to develop effective detection mechanisms.

- Algorithmic Bias: Content moderation algorithms, trained on existing data, can inherit biases that may inadvertently lead to the removal of legitimate content while overlooking extremist material.

Legal and Ethical Responsibilities of Tech Companies

The legal and ethical landscape surrounding tech company responsibility in online radicalization is complex and rapidly evolving.

Section 230 and its Limitations

Section 230 of the Communications Decency Act in the US (and equivalent laws in other countries) shields online platforms from liability for user-generated content. However, its applicability in cases involving algorithmic amplification of extremist content is fiercely debated.

- Arguments for Reform: Many argue that Section 230 needs reform to hold tech companies more accountable for the content promoted by their algorithms.

- Debate on Immunity: The debate centers on the balance between protecting free speech and preventing the spread of harmful content.

- Need for Stricter Regulations: Increased regulation is necessary to address the specific challenges of algorithmic amplification of harmful ideologies.

Corporate Social Responsibility and Ethical Considerations

Beyond legal obligations, tech companies have an ethical duty to prevent their platforms from being used to radicalize individuals, even if not explicitly mandated by law.

- Proactive Measures: Examples include investing in improved content moderation, developing more ethical algorithm design principles, and partnering with anti-extremism organizations.

- Ethical Guidelines and Policies: Clear internal guidelines and policies regarding content moderation and algorithm design are crucial for fostering responsible practices.

- Transparency and Accountability: Openness and accountability in their approach to content moderation and algorithm development are essential for building public trust.

The Potential for Legal Precedent

The legal landscape surrounding tech company liability in cases of algorithm-driven radicalization is still evolving. However, potential lawsuits and future legal precedents could significantly reshape the industry.

- Existing Lawsuits: Several lawsuits are already underway, aiming to hold tech companies accountable for their role in promoting extremist content.

- Future Litigation: Future litigation will likely focus on the specific design and implementation of algorithms, their contribution to radicalization, and the companies’ knowledge of such effects.

- Evolving Legal Landscape: The legal framework is dynamic, constantly adapting to technological advancements and evolving societal understanding of online responsibility.

Solutions and Mitigation Strategies

Addressing the complex issue of algorithm-driven radicalization requires a multi-pronged approach involving technological advancements, regulatory changes, and collaborative efforts.

Improved Algorithm Design and Transparency

Redesigning algorithms to minimize the risk of radicalization requires a shift towards prioritizing diverse content and fostering greater transparency in algorithmic decision-making.

- Alternative Recommendation Systems: Exploring alternatives to current recommendation systems that prioritize engagement over responsible content curation.

- Prioritizing Diverse Content: Algorithms should be designed to expose users to a wider range of viewpoints, challenging echo chambers and filter bubbles.

- Explainable AI: Developing more explainable AI (XAI) techniques to increase transparency and allow for better oversight of algorithmic processes.

Enhanced Content Moderation Techniques

Improving content moderation requires a combination of advanced technological solutions and human oversight to effectively identify and remove harmful content.

- AI-Assisted Moderation: Leveraging AI to enhance the speed and efficiency of content moderation, while ensuring accuracy and minimizing bias.

- Human Reviewers: The role of human reviewers remains crucial in ensuring context and nuance are considered during content moderation.

- Community-Based Moderation: Empowering communities to actively participate in identifying and reporting harmful content.

Collaboration and Public-Private Partnerships

Addressing this critical challenge necessitates collaboration between tech companies, governments, researchers, and civil society organizations.

- Successful Collaborations: Examples of successful public-private partnerships that have tackled similar online challenges.

- Interdisciplinary Approaches: The problem requires interdisciplinary expertise encompassing technology, social sciences, law, and ethics.

- Research and Data Sharing: Increased research and open data sharing are essential to fostering innovation and developing effective solutions.

Conclusion

The question of whether tech companies bear responsibility when algorithms radicalize mass shooters is multifaceted and demands a nuanced response. Algorithms, while powerful tools, can inadvertently contribute to the spread of extremist ideologies through echo chambers, targeted advertising, and the limitations of content moderation. This necessitates a multifaceted approach that encompasses improved algorithm design, enhanced content moderation strategies, and increased transparency and accountability from tech companies. It also demands a serious examination of existing legal frameworks, such as Section 230, and the development of new regulations that effectively address the unique challenges of online radicalization while upholding principles of free speech. The ultimate responsibility rests not solely with tech companies, but also with governments, researchers, and society as a whole to actively combat this dangerous trend. We must demand accountability from tech companies regarding their role in preventing algorithms from radicalizing mass shooters. Contact your lawmakers, support organizations dedicated to countering online extremism, and advocate for policy changes that hold tech companies responsible and prevent future tragedies. The fight against the misuse of algorithms to radicalize mass shooters demands our urgent and collective attention.

Featured Posts

-

Kawasaki W175 Vs Honda St 125 Dax Mana Yang Lebih Baik

May 30, 2025

Kawasaki W175 Vs Honda St 125 Dax Mana Yang Lebih Baik

May 30, 2025 -

Mastering Dwi Defense The Edward Burke Jr Approach In The Hamptons

May 30, 2025

Mastering Dwi Defense The Edward Burke Jr Approach In The Hamptons

May 30, 2025 -

Gun Violence Claims Baylor Football Player Alex Foster City Implements Curfew

May 30, 2025

Gun Violence Claims Baylor Football Player Alex Foster City Implements Curfew

May 30, 2025 -

House Of Kong Exhibition Gorillaz Take Over Londons Copper Box Arena This Summer

May 30, 2025

House Of Kong Exhibition Gorillaz Take Over Londons Copper Box Arena This Summer

May 30, 2025 -

Bruno Fernandes Transfer Al Hilal Negotiations Update

May 30, 2025

Bruno Fernandes Transfer Al Hilal Negotiations Update

May 30, 2025

Latest Posts

-

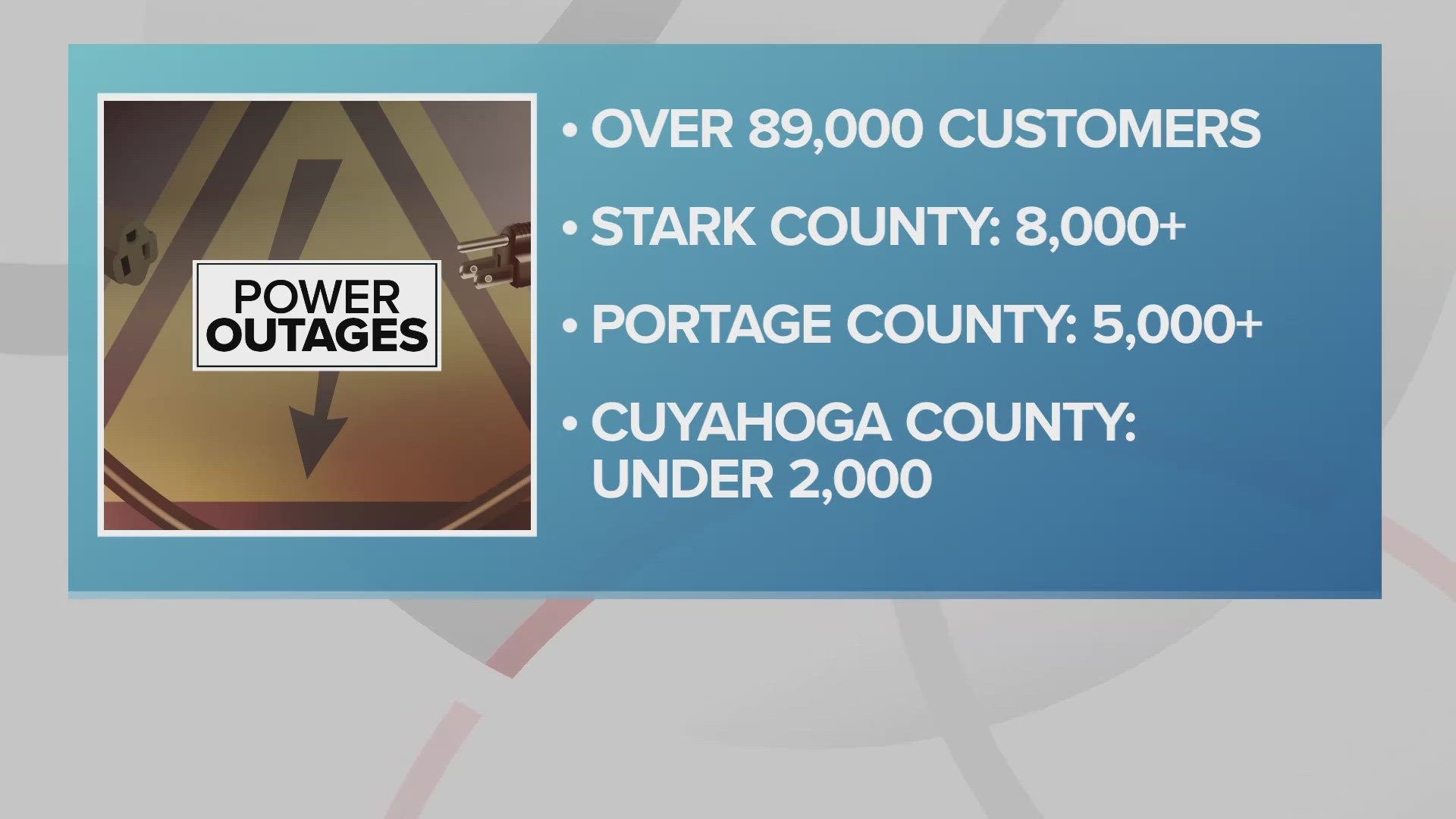

Northeast Ohio Faces Widespread Power Outages Amidst Severe Thunderstorm Warnings

May 31, 2025

Northeast Ohio Faces Widespread Power Outages Amidst Severe Thunderstorm Warnings

May 31, 2025 -

Severe Thunderstorms Bring Power Outages To Northeast Ohio Stay Safe And Informed

May 31, 2025

Severe Thunderstorms Bring Power Outages To Northeast Ohio Stay Safe And Informed

May 31, 2025 -

Power Outages And Weather Alerts Issued Across Northeast Ohio Due To Intense Thunderstorms

May 31, 2025

Power Outages And Weather Alerts Issued Across Northeast Ohio Due To Intense Thunderstorms

May 31, 2025 -

April 29th Twins Guardians Game Progressive Field Weather And Potential Delays

May 31, 2025

April 29th Twins Guardians Game Progressive Field Weather And Potential Delays

May 31, 2025 -

Rain Possible On Election Day In Northeast Ohio

May 31, 2025

Rain Possible On Election Day In Northeast Ohio

May 31, 2025