Building Voice Assistants Made Easy: OpenAI's New Tools

Table of Contents

Simplifying Natural Language Understanding (NLU) with OpenAI's Models

Natural Language Understanding (NLU) is the core of any effective voice assistant. Traditionally, building robust NLU capabilities required extensive data annotation and complex model training. However, OpenAI's pre-trained models drastically reduce this complexity. These models handle the heavy lifting of language processing, allowing developers to focus on application logic rather than intricate linguistic details.

- Reduced development time through pre-trained models: OpenAI's pre-trained models come ready-to-use, significantly cutting down on the time needed for training and fine-tuning. This accelerates the development process, enabling faster time-to-market for your voice assistant.

- Improved accuracy in understanding user intent: These sophisticated models are trained on massive datasets, resulting in superior accuracy in understanding user intent, even with nuanced or ambiguous phrasing. This leads to more reliable and helpful voice assistant experiences.

- Support for multiple languages: Many OpenAI models offer multilingual support, expanding the potential reach of your voice assistant to a global audience. This opens doors for international markets and diverse user bases.

- Easy integration with existing voice recognition APIs: OpenAI's models seamlessly integrate with popular voice recognition APIs, simplifying the overall architecture of your voice assistant. This streamlined integration ensures a smoother development workflow.

Specific OpenAI models relevant to NLU in voice assistants include GPT-3 and its successors, known for their contextual understanding and ability to generate human-like text. For example, you can use GPT-3 to interpret user requests, extract key information, and formulate appropriate responses. Whisper, OpenAI's speech-to-text model, provides accurate transcriptions, feeding clean data into the NLU pipeline.

Streamlining Voice Recognition and Synthesis

Integrating robust voice recognition and text-to-speech (TTS) capabilities is crucial for a seamless user experience. OpenAI simplifies this process by providing access to high-quality APIs.

- Access to high-quality speech-to-text and text-to-speech APIs: OpenAI offers APIs for both speech-to-text and text-to-speech, eliminating the need to build these complex components from scratch. These APIs provide accurate and natural-sounding audio processing.

- Reduced cost and complexity compared to building custom solutions: Developing custom voice recognition and synthesis engines is expensive and resource-intensive. OpenAI's APIs offer a cost-effective and readily available alternative.

- Support for various accents and dialects: OpenAI's APIs strive for broad accent and dialect support, enhancing the inclusivity and accessibility of your voice assistant. This helps your voice assistant cater to a wider range of users.

- Improved voice clarity and naturalness: The APIs provide high-quality audio output, ensuring clear and natural-sounding speech, leading to a more engaging user experience.

OpenAI's Whisper API ([link to Whisper API documentation]) is a prime example of a powerful speech-to-text solution, while their text-to-speech capabilities are constantly evolving, offering increasingly natural and expressive synthetic voices.

Creating Engaging Conversational Flows

Building an engaging conversational experience is critical for user satisfaction. OpenAI's tools help you design intuitive and responsive interactions.

- Tools for designing conversational dialogs: While OpenAI doesn't offer a dedicated visual dialog designer, its models can be used to create dynamic conversational flows. You can design prompts to guide the conversation and manage user input effectively.

- Techniques for managing user input and generating appropriate responses: OpenAI's models excel at understanding context and generating relevant responses based on user input. This ensures natural and informative interactions.

- Strategies for creating personalized and context-aware interactions: You can leverage OpenAI's capabilities to personalize the user experience based on past interactions or user profiles, creating a more tailored and engaging experience.

- Methods for handling unexpected user input or errors: OpenAI's models are designed to handle unexpected or erroneous input gracefully, leading to more robust and forgiving voice assistants.

By carefully crafting prompts and leveraging the model's contextual understanding, you can build dynamic and responsive conversations that feel natural and intuitive.

Deploying and Scaling Your Voice Assistant

OpenAI's infrastructure simplifies deployment and scaling, ensuring your voice assistant can handle increasing user demand.

- Cloud-based infrastructure for easy deployment and scaling: OpenAI's APIs are cloud-based, providing easy deployment and scalability. You can effortlessly adapt your voice assistant to handle growing user traffic without significant infrastructure management.

- Cost-effective solutions for handling large volumes of requests: OpenAI's pricing models are designed to be cost-effective, even when handling a large number of requests. This makes scaling your voice assistant financially feasible.

- Integration with popular cloud platforms: OpenAI's APIs readily integrate with other popular cloud platforms, facilitating seamless integration into your existing infrastructure. This allows for streamlined development and deployment.

- Monitoring and management tools for optimizing performance: OpenAI provides monitoring tools to track API performance and optimize resource allocation for your voice assistant.

Deploying your voice assistant using OpenAI often involves integrating their APIs into your chosen backend framework. Scaling is managed automatically by OpenAI's infrastructure, allowing you to focus on improving the user experience rather than infrastructure management.

Conclusion

OpenAI's suite of tools has significantly lowered the barrier to entry for building voice assistants. By simplifying NLU, voice recognition/synthesis, conversational design, and deployment, OpenAI empowers developers of all skill levels to create innovative and engaging voice-activated applications. The ease of use and powerful capabilities offered by these tools promise a future where building voice assistants is accessible to everyone. Start building your own voice assistant today and experience the ease and efficiency of OpenAI's innovative solutions! Learn more about OpenAI's offerings for voice assistant development at [link to relevant OpenAI documentation/website].

Featured Posts

-

Trump Administrations China Tariffs A 2025 Outlook

May 18, 2025

Trump Administrations China Tariffs A 2025 Outlook

May 18, 2025 -

Angels Pari Post Rain Game Winning Homer Against White Sox

May 18, 2025

Angels Pari Post Rain Game Winning Homer Against White Sox

May 18, 2025 -

Metropolis Japan Culture History And Modernity

May 18, 2025

Metropolis Japan Culture History And Modernity

May 18, 2025 -

Best Mlb Dfs Picks For May 8th Sleeper Plays And One To Avoid

May 18, 2025

Best Mlb Dfs Picks For May 8th Sleeper Plays And One To Avoid

May 18, 2025 -

Asamh Bn Ladn Ky Shkhsyt Alka Yagnk Ke Mtabq

May 18, 2025

Asamh Bn Ladn Ky Shkhsyt Alka Yagnk Ke Mtabq

May 18, 2025

Latest Posts

-

Taran Killam Discusses His Friendship With Amanda Bynes

May 18, 2025

Taran Killam Discusses His Friendship With Amanda Bynes

May 18, 2025 -

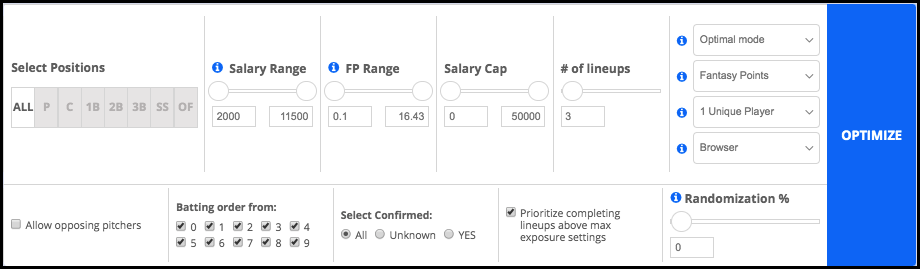

May 8th Mlb Dfs Lineup Optimizer Sleeper Picks And Value Plays

May 18, 2025

May 8th Mlb Dfs Lineup Optimizer Sleeper Picks And Value Plays

May 18, 2025 -

Mlb Dfs May 8th Sleeper Picks And Hitter To Target

May 18, 2025

Mlb Dfs May 8th Sleeper Picks And Hitter To Target

May 18, 2025 -

Amanda Bynes And Rachel Green The Unexpected Comparison Made By Drake Bell

May 18, 2025

Amanda Bynes And Rachel Green The Unexpected Comparison Made By Drake Bell

May 18, 2025 -

Best Mlb Dfs Picks For May 8th Sleeper Plays And One To Avoid

May 18, 2025

Best Mlb Dfs Picks For May 8th Sleeper Plays And One To Avoid

May 18, 2025