Debunking The Myth Of AI Learning: Towards Responsible AI Practices

Table of Contents

The Reality of AI Training: Data Dependency and Human Oversight

Contrary to popular belief, AI systems are not self-learning; they are trained. This training relies heavily on vast amounts of data, making data quality and representation absolutely critical. The performance of any AI model is directly tied to the data used to train it. This highlights the critical role of human involvement throughout the entire AI lifecycle.

- Biased data leads to biased AI: If the data used to train an AI system reflects existing societal biases (e.g., gender, racial, or socioeconomic biases), the AI will likely perpetuate and even amplify those biases in its output. This can have serious consequences in areas like loan applications, hiring processes, and even criminal justice.

- Data cleaning and curation are vital steps: Before an AI system can be trained, the data must be carefully cleaned, curated, and pre-processed to remove errors, inconsistencies, and irrelevant information. This is a labor-intensive process requiring significant human expertise.

- The need for diverse and inclusive datasets: To mitigate bias and ensure fairness, AI systems must be trained on diverse and inclusive datasets that accurately reflect the real-world populations they will impact. This requires careful consideration of data representation and the proactive inclusion of underrepresented groups.

Furthermore, human intervention remains essential throughout the AI development process.

- Human-in-the-loop systems are crucial for monitoring and control: Continuous human oversight is necessary to monitor AI systems’ performance, identify and correct errors, and ensure they operate within ethical guidelines.

- Addressing ethical considerations and potential biases: Ethical considerations must be integrated into every stage of AI development, from data collection to deployment. This includes proactively identifying and mitigating potential biases and ensuring fairness and accountability.

- Regular audits and evaluations of AI systems: AI systems should undergo regular audits and evaluations to assess their performance, identify areas for improvement, and ensure they continue to meet ethical standards and legal requirements.

The Limits of Current AI: Narrow vs. General AI

It's vital to distinguish between two types of AI: narrow or weak AI and general or strong AI. Current AI systems overwhelmingly fall into the category of narrow AI.

- Focus on specific tasks and lack generalizability: Narrow AI excels at specific, pre-defined tasks, like playing chess or recognizing faces. However, they lack the generalizability and adaptability of human intelligence.

- Inability to transfer knowledge between different domains: A narrow AI trained to play chess cannot easily transfer its knowledge to play Go or solve mathematical problems. This limitation is a fundamental difference between current AI and human cognition.

- Limitations in understanding context and nuance: Current AI systems often struggle with understanding context, sarcasm, and other nuances inherent in human language and communication.

The concept of general AI, possessing human-level intelligence and capable of performing any intellectual task a human being can, remains largely hypothetical. Debunking the myth of rapidly approaching superintelligence is crucial; current AI capabilities are far from achieving this.

Responsible AI Development: Mitigating Bias and Promoting Transparency

Responsible AI development necessitates a strong ethical foundation. Mitigating bias and promoting transparency are paramount.

- Careful data selection and preprocessing: The careful selection and preprocessing of data is fundamental to minimizing bias. This includes actively seeking diverse data sources and employing techniques to identify and mitigate biases within the data.

- Algorithmic fairness techniques: Researchers are developing algorithmic fairness techniques to mitigate bias in AI algorithms themselves. These techniques aim to ensure that AI systems make fair and equitable decisions across different groups.

- Regular bias audits and monitoring: Ongoing monitoring and regular bias audits are crucial for identifying and addressing biases that may emerge over time, as datasets evolve and AI systems are updated.

Transparency and explainability are equally crucial.

- Understanding how AI systems make decisions: It's essential to understand how AI systems arrive at their decisions. This requires the development of interpretable AI models that allow us to understand the reasoning behind their outputs.

- The need for interpretable AI models: Interpretable AI models enable greater trust and accountability, allowing humans to identify and correct errors or biases more easily.

- Promoting trust and accountability: Transparency builds trust and promotes accountability, essential aspects of responsible AI development and deployment.

The Future of AI: Collaboration and Continuous Improvement

The future of AI depends on collaboration and continuous improvement. Responsible AI development requires a multidisciplinary approach.

- Involving experts from diverse fields: Collaboration between computer scientists, ethicists, social scientists, and domain experts is crucial to address the complex ethical and societal implications of AI.

- Interdisciplinary research and collaboration: Interdisciplinary research initiatives are vital for advancing our understanding of AI ethics and developing effective strategies for responsible AI development.

- Open-source initiatives and community involvement: Open-source initiatives and community involvement can foster transparency and collaboration, accelerating the development of responsible AI practices.

Continuous learning and improvement are essential.

- Adapting to emerging challenges: The landscape of AI is constantly evolving, presenting new ethical and societal challenges that require continuous adaptation and learning.

- Regular updates and refinements of AI systems: AI systems require regular updates and refinements to address emerging issues and ensure they remain aligned with ethical principles.

- Ongoing monitoring and evaluation: Continuous monitoring and evaluation are crucial to identifying and addressing potential risks and biases as AI systems are deployed and used in real-world applications.

Embracing Responsible AI Practices

In conclusion, debunking the myth of self-learning AI is paramount. AI is a powerful tool trained by humans, not a self-evolving entity. Responsible development requires careful data handling, meticulous attention to ethical considerations, unwavering transparency, and ongoing monitoring. Let's move beyond the myth of self-learning AI and embrace responsible AI practices to build a more equitable and beneficial future. Learn more about responsible AI development through resources like [link to resource 1], [link to resource 2], and [link to resource 3].

Featured Posts

-

Deficit Foire Au Jambon 2025 Le Maire De Bayonne Questionne La Charge Financiere Sur La Ville

May 31, 2025

Deficit Foire Au Jambon 2025 Le Maire De Bayonne Questionne La Charge Financiere Sur La Ville

May 31, 2025 -

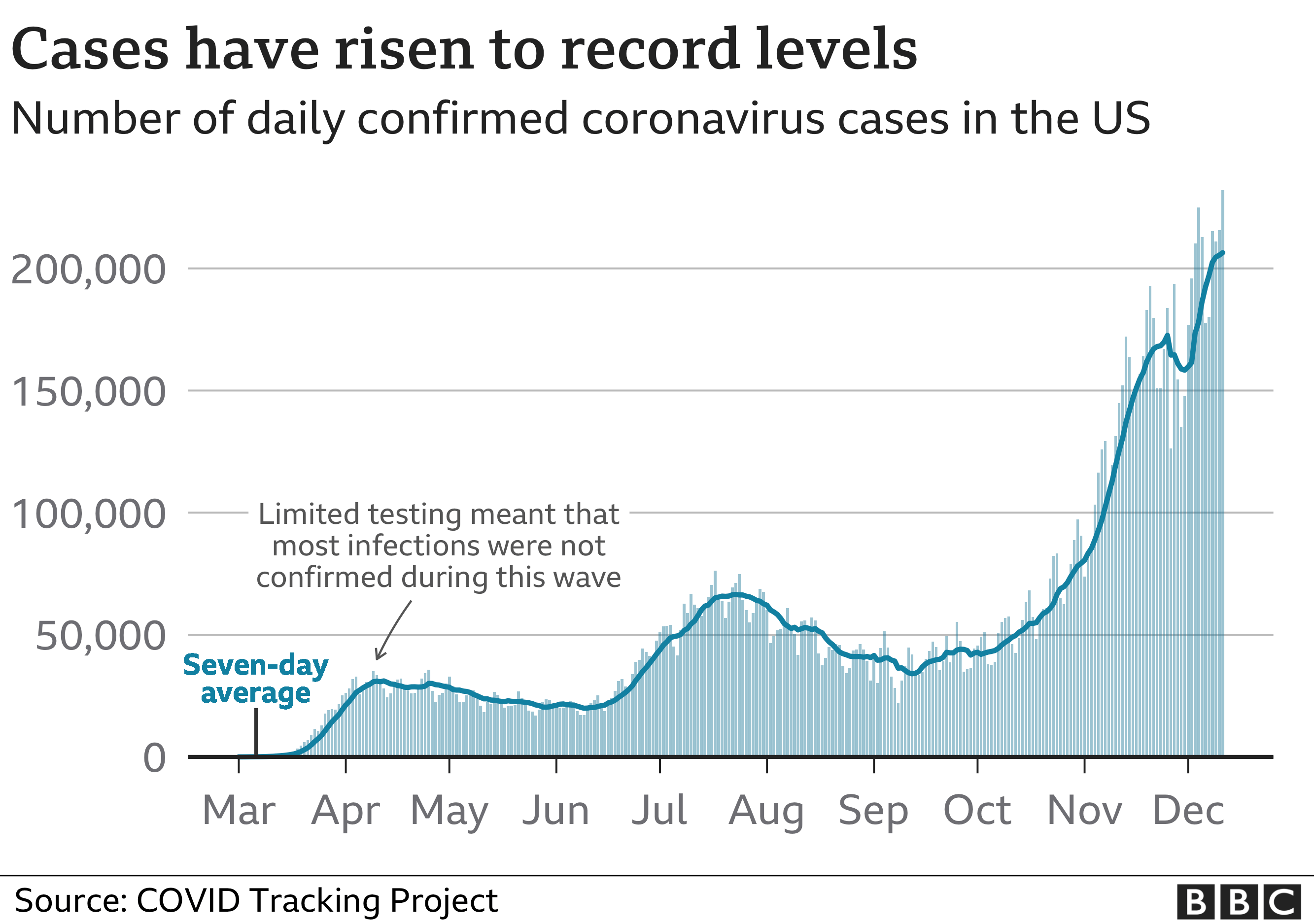

Rising Covid 19 Infections The Who Points To A New Variant

May 31, 2025

Rising Covid 19 Infections The Who Points To A New Variant

May 31, 2025 -

The Good Life Finding Purpose And Fulfillment

May 31, 2025

The Good Life Finding Purpose And Fulfillment

May 31, 2025 -

Munguias Adverse Vada Finding A Detailed Analysis

May 31, 2025

Munguias Adverse Vada Finding A Detailed Analysis

May 31, 2025 -

Rejets Toxiques De Sanofi Le Geant Pharmaceutique Face Aux Accusations

May 31, 2025

Rejets Toxiques De Sanofi Le Geant Pharmaceutique Face Aux Accusations

May 31, 2025