Deepfake Detection Foiled: Cybersecurity Expert's CNN Business Demonstration

Table of Contents

The Cybersecurity Expert's CNN Business Demonstration: A Detailed Look

The demonstration, showcased on CNN Business, involved a leading cybersecurity expert, [Insert Expert's Name and credentials here, e.g., Dr. Anya Sharma, a renowned AI specialist from MIT], who meticulously crafted several deepfakes to highlight vulnerabilities in existing detection systems. The methodology involved leveraging advanced Generative Adversarial Networks (GANs) and neural rendering techniques to create highly convincing deepfakes. These weren't crude imitations; they were expertly produced videos and audio clips designed to bypass current detection algorithms.

-

Deepfake Examples: The demonstration included:

- A video deepfake of a prominent politician delivering a fabricated speech endorsing a controversial policy.

- An audio deepfake of a CEO authorizing a large, fraudulent financial transaction.

- A manipulated video altering a person's appearance in a security footage context.

-

Detection Tools Tested: A range of commercially available and academic deepfake detection tools were subjected to these deepfakes, including both video and audio analysis software, as well as several AI-based detectors. The expert meticulously documented the process of creating the deepfakes and the specific techniques employed to evade detection.

Weaknesses Exposed in Current Deepfake Detection Technology

The CNN Business demonstration starkly revealed significant limitations in current deepfake detection capabilities. The expert successfully bypassed many leading detection methods, highlighting the following crucial weaknesses:

- Inability to Detect Subtle Manipulations: Current systems often struggle with subtle alterations, such as slight changes in facial expressions or lip movements, that are difficult for the human eye to catch.

- Vulnerability to Advanced Deepfake Techniques: Sophisticated techniques like GANs and neural rendering create incredibly realistic deepfakes that easily fool current detection algorithms.

- Dependence on Specific Features: Many detection methods rely on identifying specific artifacts or inconsistencies present in older deepfakes. These features can be easily manipulated or removed with advanced techniques.

- Lack of Real-Time Detection Capabilities: Many existing tools require significant processing time, rendering them unsuitable for real-time applications where immediate detection is critical.

Implications of Deepfake Detection Failures for Businesses and Individuals

The consequences of failing to detect deepfakes are severe, impacting both businesses and individuals. For businesses, the implications include:

- Reputational Damage: A deepfake video or audio could severely damage a company's reputation, leading to loss of customer trust and potential financial losses.

- Financial Losses: Deepfakes can be used to commit fraud, leading to significant financial losses for businesses.

- Legal Liabilities: Companies could face legal repercussions for failing to adequately protect themselves against deepfake-related attacks.

Individuals also face significant risks:

- Risk of Identity Theft and Fraud: Deepfakes can be used to impersonate individuals, leading to identity theft and financial fraud.

- Spread of Misinformation: Deepfakes can be used to spread misinformation, impacting personal relationships and damaging reputation.

- Potential for Blackmail and Extortion: Deepfakes can be used for blackmail and extortion, putting individuals at significant risk.

Future Directions in Deepfake Detection and Mitigation

The need for enhanced deepfake detection is undeniable. Future solutions will likely involve:

- More Robust AI Models: Developing more sophisticated AI models capable of detecting subtle manipulations and adapting to new deepfake techniques is crucial.

- Multimodal Analysis: Combining video and audio analysis with other data sources, such as metadata and social media context, can improve detection accuracy.

- Blockchain Technology: Blockchain technology could be used to create tamper-proof digital signatures for videos and audio, making deepfakes easier to identify.

Beyond technological advancements, education and awareness are vital.

- Potential Future Mitigation Strategies:

- Enhanced AI algorithms using advanced machine learning techniques.

- Improved digital watermarking technologies to embed verifiable information within media.

- Government regulations and legislation to hold perpetrators accountable.

- Public education campaigns to increase awareness and critical thinking skills.

Collaboration between researchers, cybersecurity professionals, and policymakers is crucial to develop comprehensive solutions to counter this growing threat.

Conclusion: The Urgent Need for Enhanced Deepfake Detection Strategies

The CNN Business demonstration served as a wake-up call. The current state of deepfake detection is insufficient to combat the sophisticated techniques being employed. The implications are far-reaching, impacting businesses, individuals, and society as a whole. We urgently need to develop more effective deepfake detection techniques, invest in research, and promote public awareness. We must improve deepfake detection methods to safeguard ourselves from this insidious threat. To learn more about the latest advancements in deepfake detection and to contribute to this crucial effort, explore resources from [Insert Link to relevant research or expert's website here]. Let's work together to strengthen our deepfake detection defenses and protect ourselves against the growing threat of AI-generated misinformation and fraud.

Featured Posts

-

Experience Uber One Free Deliveries And Discounts Now In Kenya

May 17, 2025

Experience Uber One Free Deliveries And Discounts Now In Kenya

May 17, 2025 -

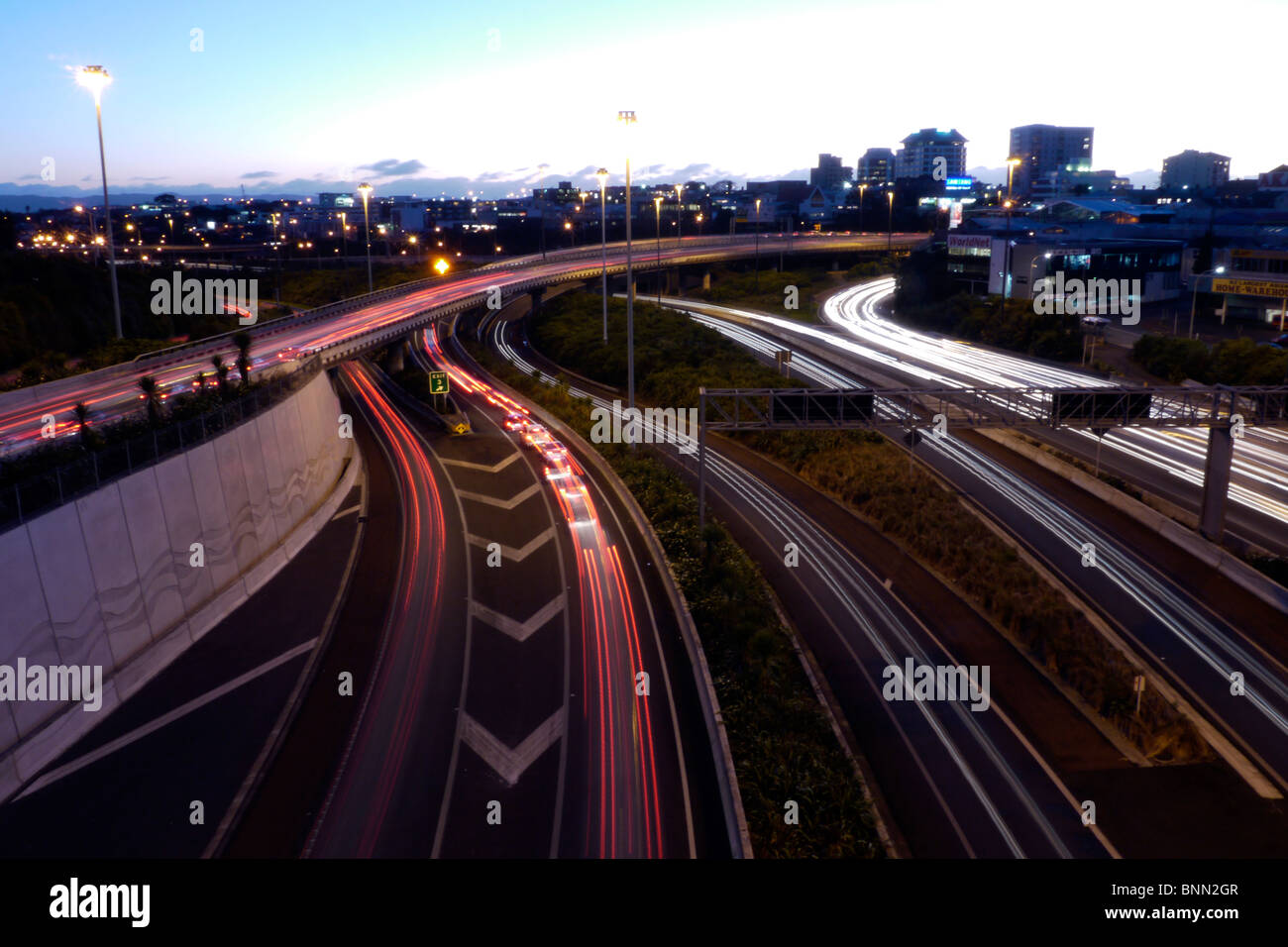

Auckland Southern Motorway E Scooter Riders Reckless Journey Dashcam

May 17, 2025

Auckland Southern Motorway E Scooter Riders Reckless Journey Dashcam

May 17, 2025 -

Ewdt Stylr Anbae Sart Lshtwtjart Qbl Mwajht Nhayy Alkas

May 17, 2025

Ewdt Stylr Anbae Sart Lshtwtjart Qbl Mwajht Nhayy Alkas

May 17, 2025 -

Victoria Del Palmeiras 2 0 Contra Bolivar Goles Y Resumen

May 17, 2025

Victoria Del Palmeiras 2 0 Contra Bolivar Goles Y Resumen

May 17, 2025 -

Jalen Brunsons Return Knicks Playoff Push Intensifies

May 17, 2025

Jalen Brunsons Return Knicks Playoff Push Intensifies

May 17, 2025