Is AI Therapy A Surveillance Tool In A Police State? A Critical Examination

Table of Contents

Data Privacy and Security Concerns in AI Therapy

AI therapy platforms collect vast amounts of sensitive personal data, raising serious concerns about data privacy and security in the context of a potential police state. This data, including emotional states, thoughts, and behavioral patterns, is incredibly vulnerable to breaches or unauthorized access, transforming AI therapy into a potential tool of AI Therapy Surveillance.

The Volume and Sensitivity of Data Collected

AI therapy platforms harvest a wealth of intimate personal information. The sheer volume and sensitivity of this data create significant risks.

- Data breaches can expose intimate details of a patient's life, leading to identity theft, blackmail, or social stigma. Imagine the devastating consequences of private anxieties and therapeutic vulnerabilities being publicly exposed.

- The lack of robust security measures in some AI platforms increases the risk of data exploitation. Many platforms lack the sophisticated encryption and security protocols necessary to protect such sensitive information from cyberattacks.

- The decentralized nature of data storage can make it difficult to track and regulate. Data may be stored across multiple servers and jurisdictions, making it challenging to enforce data protection laws and hold companies accountable.

Lack of Transparency and User Control

Users often lack clarity on how their data is collected, stored, used, and protected. This lack of transparency is a major vulnerability in the context of AI Therapy Surveillance.

- Lack of transparency fosters distrust and hinders informed consent. Users may unwittingly agree to terms that compromise their privacy.

- Limited user control over data deletion or modification poses significant risks. Users should have the right to access, correct, and delete their data, but this is not always guaranteed.

- Algorithmic bias in data handling can lead to unfair or discriminatory outcomes. AI algorithms trained on biased datasets may perpetuate and amplify existing societal inequalities.

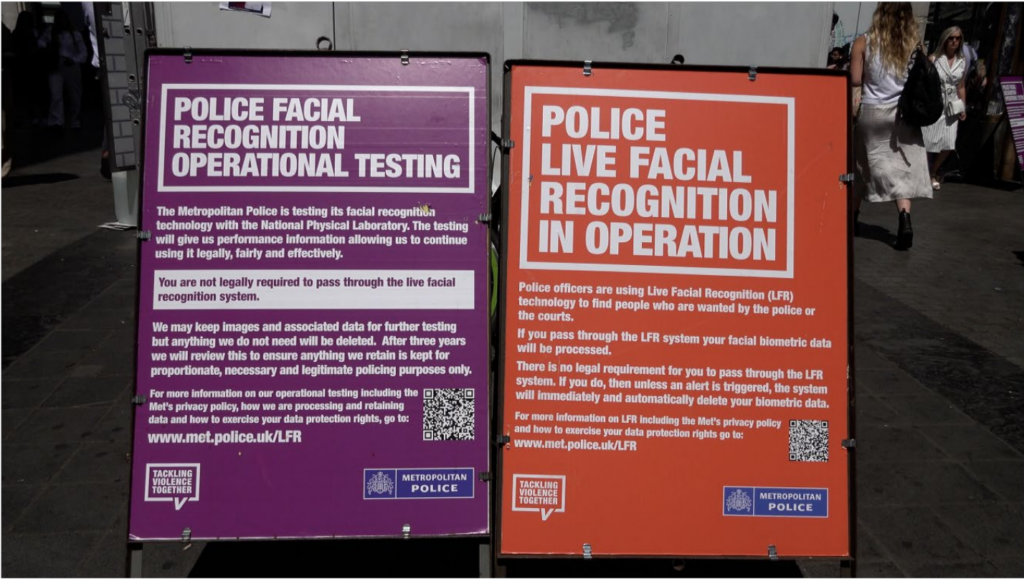

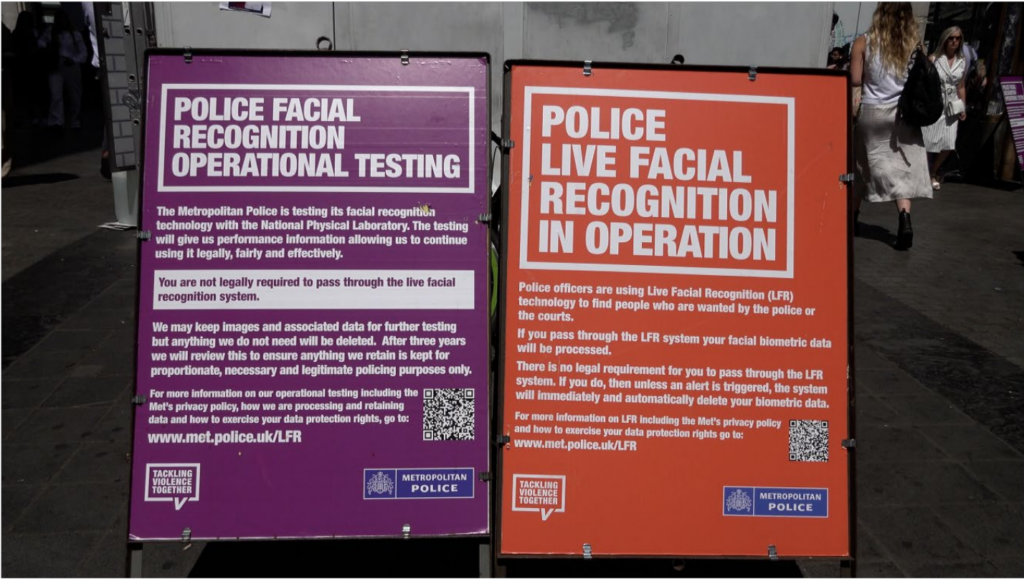

Potential for Government Surveillance and Abuse

The potential for government surveillance and abuse of AI therapy data is a particularly alarming aspect of the AI Therapy Surveillance Police State scenario.

Government Access to Sensitive Data

AI therapy data could become a valuable intelligence resource for authoritarian governments. Legal loopholes or direct access could allow authorities to monitor individuals' mental health and political leanings.

- Governments could use this data to identify and target dissidents or those deemed a threat. This could lead to preemptive arrests, censorship, or other forms of repression.

- This could lead to preemptive arrests, censorship, or other forms of repression. Individuals expressing unconventional views or struggling with mental health issues could be unfairly targeted.

- The lack of strong legal protections against government access is a critical vulnerability. Stronger regulations are needed to prevent unwarranted government intrusion.

Manipulation and Psychological Profiling

AI algorithms could analyze user data to identify patterns indicative of specific beliefs or behaviors, enabling manipulation and psychological profiling, thereby turning AI therapy into a tool for AI Therapy Surveillance.

- AI-driven manipulation could reinforce biases and influence individual decisions. Targeted propaganda or manipulative advertising could be used to sway public opinion.

- Psychological profiling based on AI therapy data could lead to discrimination and unfair treatment. Individuals could be unfairly judged based on their mental health history.

- The lack of safeguards against such manipulation poses significant ethical concerns. Ethical guidelines and regulations are urgently needed to prevent such abuse.

Mitigating the Risks: Towards Responsible AI Therapy Development

Addressing the potential for AI Therapy Surveillance requires a multi-pronged approach, focusing on both technical and ethical solutions.

Strengthening Data Protection Regulations

Robust legal frameworks are essential to protect user privacy and prevent data misuse, thus mitigating the risks of AI Therapy Surveillance.

- Implementing strong encryption and anonymization techniques. This can help to protect data from unauthorized access and breaches.

- Developing clear data retention policies. Data should only be stored for as long as necessary, and securely deleted afterwards.

- Establishing independent oversight bodies to monitor compliance. Independent audits can help to ensure that companies are adhering to data protection regulations.

Promoting Ethical AI Development

Prioritizing ethical considerations in the design and deployment of AI therapy platforms is crucial.

- Regular audits to ensure fairness and accountability. Regular audits should be conducted to identify and address any biases or vulnerabilities in AI algorithms.

- User education and awareness campaigns to inform users about data privacy risks. Users need to be aware of the risks associated with sharing their data and how to protect their privacy.

- Promoting the development of open-source and transparent AI systems. Open-source systems allow for greater scrutiny and can help to reduce the risk of bias and manipulation.

Conclusion

While AI therapy offers immense potential for improving mental healthcare access, the risks associated with its misuse as a surveillance tool in a police state cannot be ignored. The potential for data breaches, government surveillance, and psychological manipulation necessitates a critical and proactive approach. Strengthening data protection regulations, promoting ethical AI development, and fostering open dialogue are essential steps to ensure that AI therapy remains a tool for good and not an instrument of oppression. We must demand greater transparency and accountability from developers and governments to prevent AI therapy from becoming a threat to individual liberties. Let's work together to ensure responsible development and deployment of AI therapy, safeguarding against its potential use as an AI Therapy Surveillance Police State tool.

Featured Posts

-

Tam Krwz Ke Jwte Pr Chrhne Waly Mdah Ky Wydyw Swshl Mydya Pr Mqbwl

May 16, 2025

Tam Krwz Ke Jwte Pr Chrhne Waly Mdah Ky Wydyw Swshl Mydya Pr Mqbwl

May 16, 2025 -

Grizzlies Vs Warriors A Play In Game Preview

May 16, 2025

Grizzlies Vs Warriors A Play In Game Preview

May 16, 2025 -

Catch Joe And Jill Biden On The View Interview Schedule And Broadcast Information

May 16, 2025

Catch Joe And Jill Biden On The View Interview Schedule And Broadcast Information

May 16, 2025 -

Why Coca Cola Doesnt Sell Dasani Bottled Water In The United Kingdom

May 16, 2025

Why Coca Cola Doesnt Sell Dasani Bottled Water In The United Kingdom

May 16, 2025 -

San Diego Padres Vs New York Yankees Prediction 7 Game Winning Streak Analysis

May 16, 2025

San Diego Padres Vs New York Yankees Prediction 7 Game Winning Streak Analysis

May 16, 2025