Is There A BBC Agatha Christie Deepfake? Evidence And Analysis

Table of Contents

What Constitutes a Deepfake?

Deepfakes are synthetic media, most often videos or audio recordings, created using artificial intelligence (AI) and machine learning. This technology allows malicious actors to convincingly superimpose one person's face or voice onto another's, creating incredibly realistic, yet entirely fabricated, content. The process involves extensive training on large datasets of images and videos of the target individual, allowing the AI to mimic their expressions, movements, and even voice inflections with remarkable accuracy.

Several indicators can help identify a deepfake:

- Unnatural blinking: Deepfakes often struggle to replicate the subtle nuances of natural blinking. Eyes may blink too infrequently, too frequently, or in an unnatural pattern.

- Inconsistent lighting: Discrepancies in lighting between the subject's face and the background can be a sign of manipulation.

- Subtle distortions in facial features: Close examination might reveal slight distortions around the edges of the face or inconsistencies in facial features, particularly around the mouth and eyes.

- Artifacts in video compression: Deepfakes may exhibit artifacts that are typical of compressed video, but not consistent with original source material.

Examples of deepfakes in politics and entertainment illustrate the technology's potential for manipulation and misinformation. These examples highlight the need for vigilance when encountering online media.

Evidence of a Potential BBC Agatha Christie Deepfake (or Lack Thereof)

At the time of writing, there is no credible evidence to support the existence of a BBC Agatha Christie deepfake. However, let's examine potential lines of inquiry:

Analyzing Visual Evidence:

To date, no verifiable visual evidence of a BBC Agatha Christie deepfake has surfaced. Any alleged screenshots or videos claiming to show such a deepfake should be scrutinized for the following:

- Unnatural lip synchronization: Does the actor's lip movement perfectly match the dialogue? Slight inconsistencies are common in deepfakes.

- Inconsistencies in skin texture: Deepfakes may struggle to accurately replicate fine details like skin pores and wrinkles.

- Blurring or artifacts around the edges of the face: These artifacts can be a telltale sign of digital manipulation.

No expert opinions or forensic analyses currently exist to support claims of a BBC Agatha Christie deepfake.

Examining Audio Evidence:

Similarly, no verifiable audio evidence exists to support claims of a deepfake featuring Agatha Christie's voice. Any purported audio should be analyzed for:

- Voice pitch inconsistencies: Does the voice maintain a consistent pitch and tone? Deepfakes may struggle to perfectly replicate subtle vocal variations.

- Artificial-sounding vocalizations: The voice may sound slightly robotic or unnatural.

- Lack of background noise consistency: If the audio is purported to come from a scene, does the background ambience match what should be present?

No expert audio analysis currently exists to support claims of a BBC Agatha Christie deepfake.

Contextual Clues and Source Analysis:

The origin and source of any purported deepfake are crucial. Tracing the alleged deepfake back to its source can reveal clues about its authenticity:

- Credibility of the source: Was the video or audio shared by a reputable news outlet or a known purveyor of misinformation?

- Metadata analysis: Examining metadata (date created, location data, etc.) can reveal discrepancies or inconsistencies.

- Social media analysis: Checking for patterns of distribution and engagement can help determine the nature and purpose of the content.

No credible source has yet presented verifiable evidence of a BBC Agatha Christie deepfake.

Debunking Common Misconceptions about the Alleged BBC Agatha Christie Deepfake

Rumors surrounding a BBC Agatha Christie deepfake are largely unsubstantiated:

- Myth: A BBC spokesperson confirmed a deepfake's existence. Fact: No such confirmation has been made by the BBC.

- Myth: Leaked footage from a future production shows a deepfake. Fact: No credible evidence of leaked footage exists.

- Myth: A prominent AI expert verified the deepfake. Fact: No such expert verification has been presented.

The Implications of Deepfakes in the Media Landscape

The potential implications of deepfakes are far-reaching. In the entertainment industry, they pose a threat to the authenticity of media and could damage the reputations of actors and production companies. More broadly, deepfakes present a significant threat in the propagation of misinformation and could be used to influence political elections or incite social unrest. Therefore, developing critical thinking skills and media literacy is paramount to detecting and combating deepfakes.

Conclusion

Currently, there is no conclusive evidence to support the existence of a BBC Agatha Christie deepfake. Our analysis reveals a lack of credible visual and audio evidence, and the origin of the rumour remains unsubstantiated. However, the very possibility underscores the growing threat of deepfakes and the importance of media literacy. Remain vigilant. Critically evaluate any online content before sharing it. Learn more about deepfake detection techniques and report suspicious content immediately. Let's work together to combat the spread of misinformation and prevent the proliferation of "BBC Agatha Christie deepfakes" and similar fraudulent content.

Featured Posts

-

Nyt Mini Crossword Answers For March 16 2025 Your Daily Puzzle Help

May 20, 2025

Nyt Mini Crossword Answers For March 16 2025 Your Daily Puzzle Help

May 20, 2025 -

Gina Maria Schumacher Kci Michaela Schumachera

May 20, 2025

Gina Maria Schumacher Kci Michaela Schumachera

May 20, 2025 -

Going Solo Tips And Advice For First Time Solo Travelers

May 20, 2025

Going Solo Tips And Advice For First Time Solo Travelers

May 20, 2025 -

Innovation Spatiale En Afrique Le Mass Lance Sa Premiere Edition A Abidjan

May 20, 2025

Innovation Spatiale En Afrique Le Mass Lance Sa Premiere Edition A Abidjan

May 20, 2025 -

Cote D Ivoire Le Port D Abidjan Accueille Le Plus Grand Navire De Son Histoire

May 20, 2025

Cote D Ivoire Le Port D Abidjan Accueille Le Plus Grand Navire De Son Histoire

May 20, 2025

Latest Posts

-

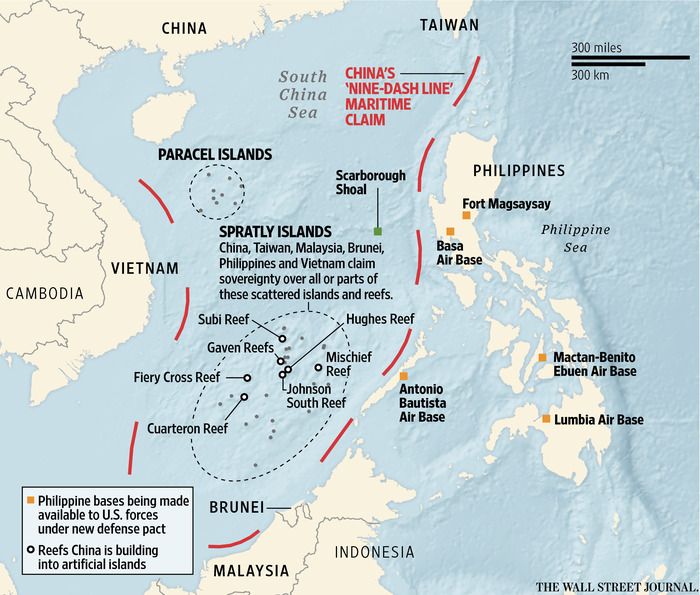

Us Typhon Missile System In Philippines A Counter To Chinese Aggression

May 20, 2025

Us Typhon Missile System In Philippines A Counter To Chinese Aggression

May 20, 2025 -

South China Sea Tensions China Pressures Philippines On Missile Deployment

May 20, 2025

South China Sea Tensions China Pressures Philippines On Missile Deployment

May 20, 2025 -

Drone Truck For Tomahawk Missiles A Usmc Army Collaboration

May 20, 2025

Drone Truck For Tomahawk Missiles A Usmc Army Collaboration

May 20, 2025 -

Usmc Tomahawk Missile Launch Army Eyes Drone Truck Technology

May 20, 2025

Usmc Tomahawk Missile Launch Army Eyes Drone Truck Technology

May 20, 2025 -

Philippines Should Withdraw Missile System Chinas Demand In South China Sea

May 20, 2025

Philippines Should Withdraw Missile System Chinas Demand In South China Sea

May 20, 2025