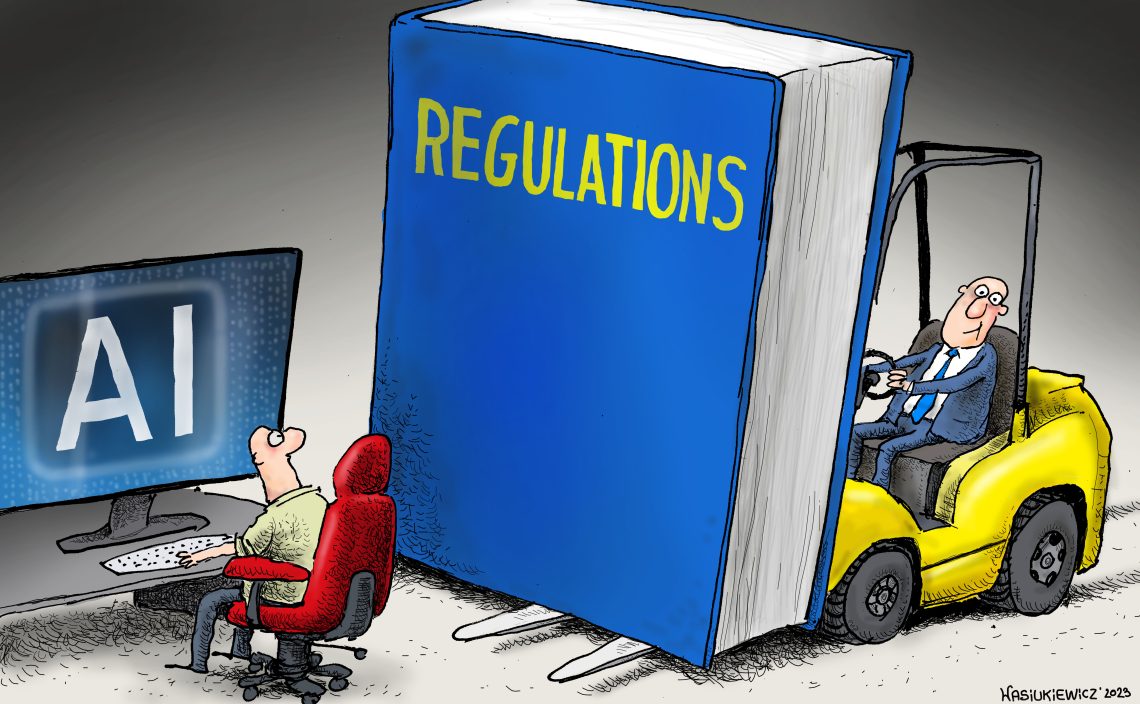

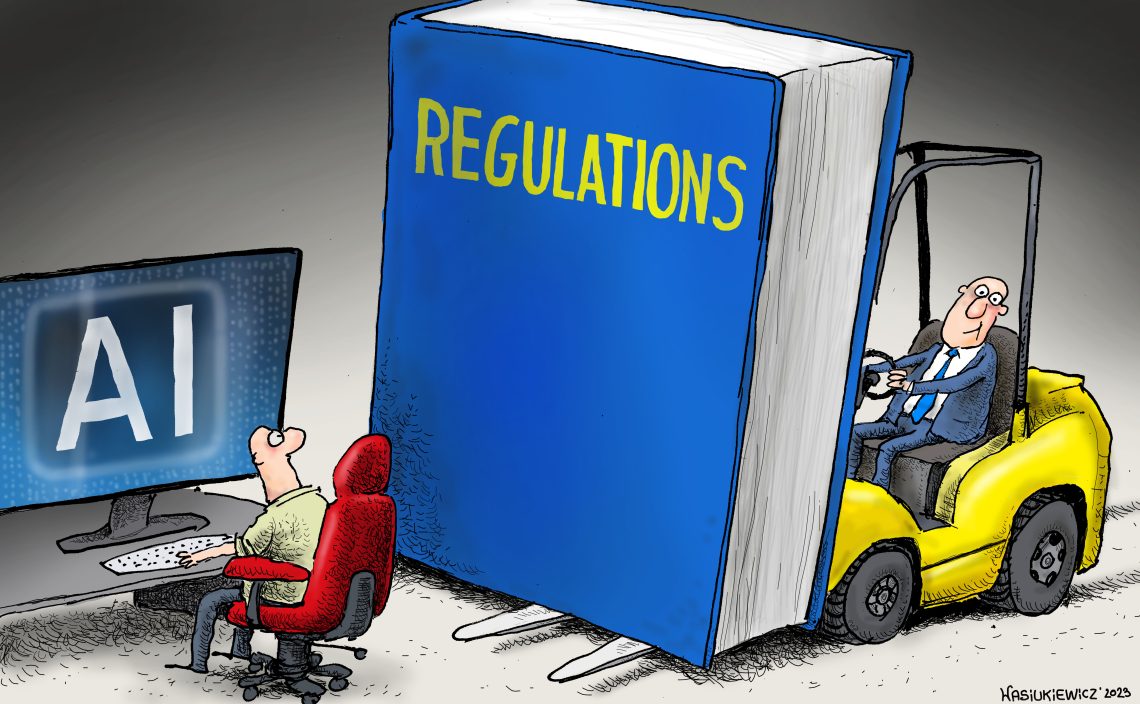

Negotiating AI Regulations: The Trump Administration And Europe's Response

Table of Contents

The Trump Administration's Approach to AI Regulation

The Trump administration's approach to AI regulation can best be described as laissez-faire, prioritizing innovation through minimal government intervention. This "light-touch regulation" philosophy aimed to foster a vibrant AI ecosystem in the US, largely leaving the development and deployment of AI to the private sector.

A Laissez-Faire Approach:

- Limited Federal Regulations: Unlike the EU, the Trump administration largely avoided comprehensive federal AI regulations. There was a notable absence of overarching legislation specifically addressing AI ethics or safety.

- Emphasis on Industry Self-Regulation: The administration largely relied on industry self-regulation and voluntary guidelines, encouraging companies to adopt ethical AI practices independently.

- Potential Drawbacks: This approach, while boosting AI innovation in the short term, presented several drawbacks. Ethical concerns surrounding algorithmic bias, data privacy violations, and the lack of consumer protection mechanisms were largely unaddressed by this strategy of US AI policy. Concerns over unchecked AI development, potentially leading to job displacement and societal disruption, also emerged.

Focus on Specific Applications:

While largely avoiding broad AI regulation, the Trump administration did engage in targeted AI regulation in specific areas deemed crucial for national security.

- National Security AI: Efforts focused on using AI to enhance national defense capabilities and counter emerging threats, leading to investments in AI research and development for military applications.

- Targeted Regulation in Specific Sectors: Regulations were implemented in certain sectors with potential AI-related risks, but these remained largely sector-specific, lacking the holistic approach adopted by the EU.

- Limitations: This targeted approach, while addressing some concerns, failed to provide a cohesive framework for addressing the broader ethical and societal implications of AI development across various sectors.

The European Union's Approach to AI Regulation

In stark contrast to the US approach, the European Union has adopted a proactive and comprehensive strategy for AI regulation, prioritizing ethical considerations and data protection.

The EU's Emphasis on Ethical Considerations:

The EU's approach to AI regulation is deeply rooted in its commitment to fundamental rights and data protection, significantly influenced by the General Data Protection Regulation (GDPR).

- GDPR's Influence: The GDPR's emphasis on data privacy and individual consent has significantly shaped the EU's approach to AI regulation, demanding transparency and accountability in the use of personal data.

- Development of the AI Act: The EU's AI Act, currently under development, proposes a risk-based approach to regulate AI systems, categorizing them based on their potential harm and imposing increasingly stringent requirements for higher-risk applications.

- Focus on Transparency, Accountability, and Human Oversight: The EU emphasizes the importance of transparency in AI algorithms, mechanisms for accountability in case of errors, and the maintenance of meaningful human oversight in AI systems. This represents a clear prioritization of "responsible AI" and "ethical AI."

A Risk-Based Approach:

The EU's proposed AI Act employs a risk-based approach, categorizing AI systems based on their potential risks:

- High-Risk AI Systems: AI systems used in critical infrastructure, healthcare, law enforcement, and other areas with significant potential for harm are subject to the strictest regulatory requirements. This includes stringent testing procedures, rigorous documentation, and human oversight.

- Limited-Risk AI Systems: AI systems with lower risk levels face less stringent regulations.

- Unacceptable-Risk AI Systems: Certain AI applications, such as those considered manipulative or discriminatory, may be outright banned. This approach reflects a clear commitment to "AI ethics" and safeguards.

Comparing and Contrasting Approaches: A Transatlantic Divide

The contrasting approaches of the US and EU to AI regulation reveal fundamental differences in their philosophical underpinnings.

Differences in Philosophical Approaches:

The US prioritizes innovation and a free market approach, while the EU champions ethical considerations and data protection.

- Innovation vs. Ethics: This fundamental difference shapes their respective regulatory strategies. The US approach risks potential harms from unchecked AI development, while the EU's approach might stifle innovation through overly stringent regulations.

- Implications for International AI Cooperation: These differing approaches pose a challenge to international AI cooperation and the development of global AI standards.

Implications for Global AI Governance:

The divergence between US and EU approaches highlights the challenges in achieving international consensus on AI regulation.

- Challenges of Achieving International Consensus: Harmonizing regulations across different jurisdictions with varying priorities and approaches presents a major obstacle to global AI governance.

- The Need for Harmonization of Regulations: A lack of harmonization can lead to fragmented markets, regulatory arbitrage, and uneven protection of rights.

- The Role of International Organizations: International organizations like the OECD and the UN play a crucial role in fostering dialogue and collaboration, facilitating the development of global AI standards and best practices.

Conclusion: Negotiating AI Regulations: A Path Forward

The Trump administration's laissez-faire approach contrasted sharply with the EU's proactive, risk-based, and ethically-focused regulatory strategy. These different approaches reflect distinct priorities – innovation versus ethical considerations – and pose significant challenges for global AI governance. The key takeaways are the need for a balanced approach that fosters innovation while protecting fundamental rights, and the necessity for international collaboration to establish global AI standards. To ensure the responsible development and deployment of AI, further research into "negotiating AI regulations" is essential. Understanding the various perspectives and participating in the ongoing dialogue is crucial. The successful negotiation of AI regulations will require ongoing dialogue and collaboration between nations to ensure both innovation and ethical considerations are addressed.

Featured Posts

-

The Hollywood Strike What It Means For The Entertainment Industry

Apr 26, 2025

The Hollywood Strike What It Means For The Entertainment Industry

Apr 26, 2025 -

Denmark Accuses Russia Of Spreading False Greenland News To Exacerbate Us Tensions

Apr 26, 2025

Denmark Accuses Russia Of Spreading False Greenland News To Exacerbate Us Tensions

Apr 26, 2025 -

Russias Disinformation Campaign False Greenland News Fuels Denmark Us Discord

Apr 26, 2025

Russias Disinformation Campaign False Greenland News Fuels Denmark Us Discord

Apr 26, 2025 -

A Side Hustle Access To Elon Musks Private Company Stakes

Apr 26, 2025

A Side Hustle Access To Elon Musks Private Company Stakes

Apr 26, 2025 -

Emerging Markets Investment Point72 Traders Depart After Fund Closure

Apr 26, 2025

Emerging Markets Investment Point72 Traders Depart After Fund Closure

Apr 26, 2025