OpenAI's 2024 Developer Event: Simplifying Voice Assistant Creation

Table of Contents

Streamlined Development Tools and APIs

OpenAI's commitment to simplifying voice assistant creation is evident in its focus on improving development tools and APIs. This will drastically reduce the time and resources required to bring voice-enabled applications to market.

Simplified API Access

OpenAI is expected to unveil significantly improved APIs for natural language processing (NLP) and speech processing. This includes:

- Reduced latency: Faster response times mean a more fluid and responsive user experience.

- Enhanced documentation: Clearer and more comprehensive documentation will make API integration significantly easier for developers of all skill levels.

- Improved error handling: More robust error messages and handling will expedite debugging and troubleshooting.

- Easier authentication: Simplified authentication processes will streamline the onboarding process for developers.

Easier API access translates directly into reduced development time and cost. Imagine the possibilities – developers can focus on building unique features and functionalities instead of grappling with complex API integrations. By streamlining the technical aspects, OpenAI is paving the way for innovation.

Pre-trained Models for Faster Prototyping

Access to a library of pre-trained models for speech recognition, speech synthesis, and natural language understanding is a game-changer. These models accelerate the development cycle significantly. Developers can leverage these pre-built components, focusing their efforts on the unique aspects of their applications rather than building foundational models from scratch. This includes:

- Pre-trained models for various accents, languages, and domains: Support for a wide range of linguistic variations allows developers to create voice assistants accessible to a global audience.

- Customizable models: The ability to fine-tune pre-trained models ensures that developers can tailor voice assistants to their specific needs and requirements.

- Easy model fine-tuning options: Simple and intuitive tools for fine-tuning pre-trained models further reduce development time and complexity.

The benefits of pre-trained models are undeniable: faster prototyping, reduced development costs, and a faster time to market. For example, a developer building a voice-controlled smart home assistant can leverage pre-trained models for speech recognition and natural language understanding, focusing their efforts on integrating with existing smart home devices and creating a unique user interface.

Enhanced Capabilities for Natural and Intuitive Interactions

The next level of voice assistant development focuses on creating more natural and engaging interactions. OpenAI’s advancements are key to achieving this.

Improved Contextual Understanding

OpenAI's advancements in NLP are expected to enable voice assistants with a far superior understanding of context. This leads to more natural and engaging conversations. Key improvements include:

- Better handling of complex queries: Voice assistants will be better equipped to handle nuanced and multi-part queries.

- Improved dialogue management: More sophisticated dialogue management will enable more natural-flowing conversations.

- Enhanced intent recognition: Accurate intent recognition is crucial for providing relevant and helpful responses.

Contextual understanding is paramount for creating a realistic and user-friendly experience. Imagine a voice assistant that remembers previous interactions, understands the user's preferences, and anticipates their needs. This level of sophistication significantly improves user satisfaction and engagement.

Lifelike Speech Synthesis

Improved speech synthesis technology is crucial for creating voice assistants that sound natural and engaging. OpenAI's advancements promise:

- Wider range of voices and accents: A broader selection of voices will allow developers to customize the personality of their voice assistants.

- Improved emotional expression: The ability to convey emotion in speech synthesis creates a more engaging and human-like interaction.

- Customization options for voice tone and personality: Developers will have greater control over the voice characteristics of their voice assistants, allowing for better brand alignment and user experience tailoring.

Lifelike speech synthesis greatly impacts user experience. Natural-sounding voices increase user engagement and satisfaction, making interactions feel more intuitive and less robotic.

Accessibility and Democratization of Voice Assistant Technology

OpenAI's efforts are focused on making voice assistant creation accessible to a broader range of developers.

Lower Barrier to Entry

The simplified tools and APIs are designed to democratize voice assistant creation, regardless of a developer's AI expertise. This is achieved through:

- Easier learning curve: Intuitive tools and simplified processes make it easier for developers to get started.

- Comprehensive tutorials and documentation: Detailed tutorials and documentation provide the support developers need to succeed.

- Robust community support: A vibrant community of developers fosters collaboration and knowledge sharing.

OpenAI is actively reducing the technical hurdles that previously hindered broader adoption. This will encourage greater innovation and a more diverse range of voice assistant applications.

Focus on Ethical Considerations

OpenAI recognizes the ethical implications of AI development and is incorporating safeguards to mitigate potential risks:

- Tools to mitigate bias in training data: OpenAI is actively working to address bias in training data to ensure fair and unbiased voice assistants.

- Improved data privacy measures: Enhanced privacy measures protect user data and maintain trust.

- Enhanced security protocols: Robust security protocols protect voice assistants from malicious attacks.

Addressing ethical concerns is crucial for responsible AI development. OpenAI's proactive approach ensures that voice assistant technology is developed and used responsibly.

Conclusion

OpenAI's 2024 Developer Event is set to revolutionize voice assistant creation. The streamlined tools, enhanced capabilities, and focus on accessibility promise to accelerate the adoption of conversational AI across various industries. By lowering the barrier to entry and focusing on ethical considerations, OpenAI is democratizing access to this powerful technology. Learn more about the groundbreaking advancements in voice assistant creation at the OpenAI 2024 Developer Event and start building your next voice-enabled application today!

Featured Posts

-

Amber Heards Twins The Elon Musk Fatherhood Question

May 16, 2025

Amber Heards Twins The Elon Musk Fatherhood Question

May 16, 2025 -

Auction Of Kid Cudis Personal Items Nets Unexpectedly High Prices

May 16, 2025

Auction Of Kid Cudis Personal Items Nets Unexpectedly High Prices

May 16, 2025 -

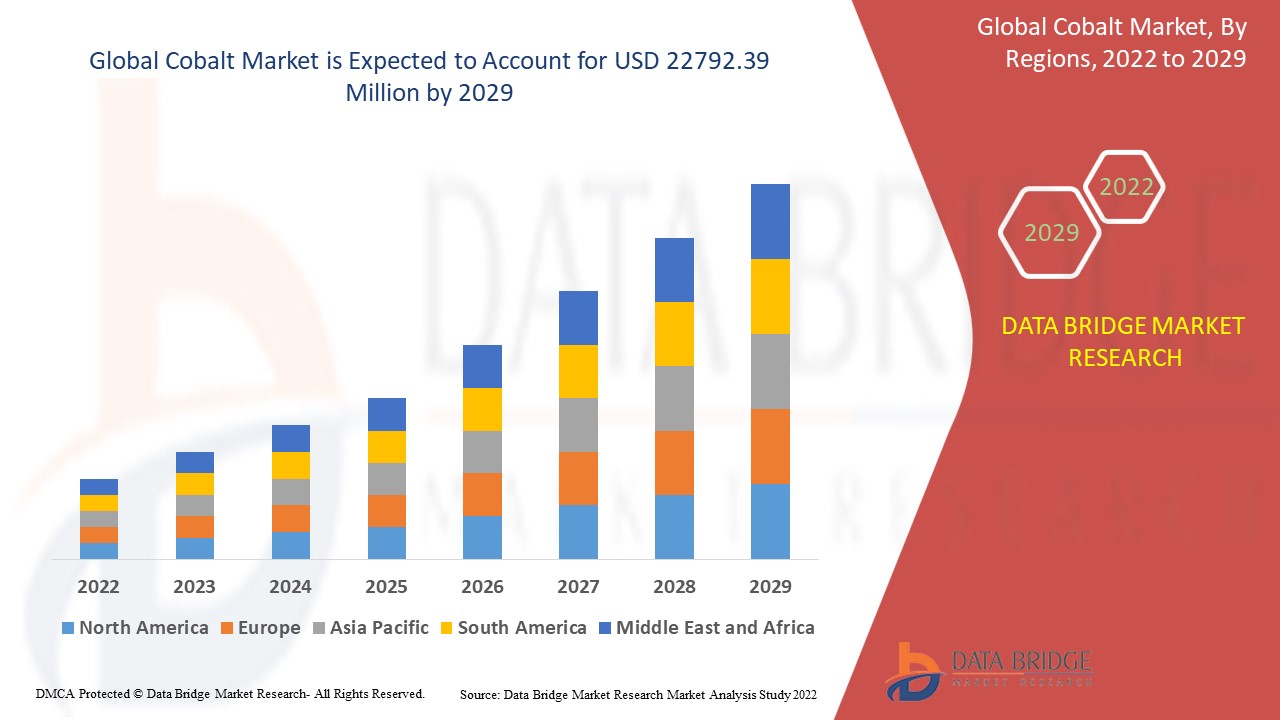

Congos Cobalt Export Restrictions Implications For The Global Cobalt Market

May 16, 2025

Congos Cobalt Export Restrictions Implications For The Global Cobalt Market

May 16, 2025 -

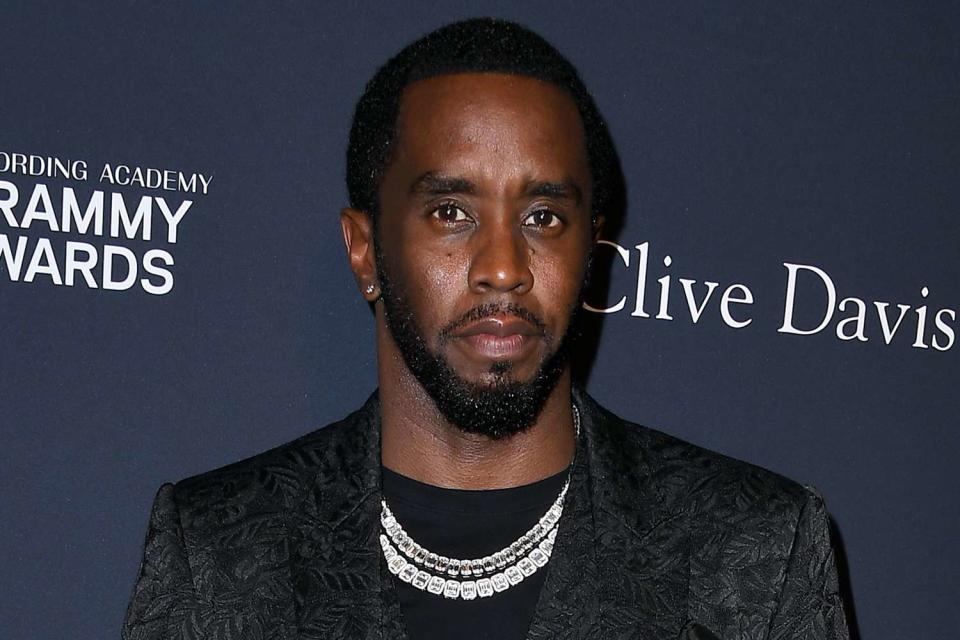

Diddy Sex Trafficking Trial Cassie Venturas Explosive Testimony

May 16, 2025

Diddy Sex Trafficking Trial Cassie Venturas Explosive Testimony

May 16, 2025 -

Athletic Club De Bilbao Vavel Usas Coverage Of The Lions

May 16, 2025

Athletic Club De Bilbao Vavel Usas Coverage Of The Lions

May 16, 2025