Tech Companies And Mass Violence: The Algorithmic Connection

Table of Contents

The Role of Social Media Algorithms in Spreading Extremist Ideologies

Social media algorithms, designed to maximize user engagement, often create environments ripe for the spread of extremist viewpoints. This occurs through two primary mechanisms: echo chambers and targeted advertising.

Echo Chambers and Filter Bubbles

Algorithms prioritize content that keeps users engaged, often leading to echo chambers and filter bubbles. These reinforce existing biases and limit exposure to diverse perspectives.

- Engagement over Accuracy: Algorithms prioritize engagement metrics (likes, shares, comments) over factual accuracy. This leads to the amplification of sensationalist, misleading, and even outright false information, including conspiracy theories and hate speech.

- Personalized Content Feeds: Personalized feeds, tailored to individual user preferences, can inadvertently isolate users within echo chambers, exposing them to increasingly extreme viewpoints without counterbalancing perspectives. This phenomenon contributes to online radicalization by reinforcing existing biases and making users more susceptible to extremist propaganda.

- This algorithmic bias, favoring engagement, creates a feedback loop that strengthens extremist narratives and weakens the ability of users to access diverse and balanced information. Effective content moderation strategies are crucial to mitigate these effects.

Targeted Advertising and Recruitment

Extremist groups exploit targeted advertising to reach individuals susceptible to their ideologies. Algorithms enable precise targeting based on user data, allowing propaganda and recruitment materials to reach specific demographics.

- Precision Targeting: Extremist groups utilize social media platforms to run targeted ad campaigns focusing on individuals who have previously engaged with similar content or expressed specific interests aligning with extremist viewpoints.

- Recruitment Campaigns: These targeted ads can range from seemingly innocuous content to direct recruitment calls, effectively reaching potential recruits within their existing online spaces.

- The challenge in combating this lies in the difficulty of detecting and preventing such campaigns while respecting users' privacy and freedom of speech.

The Spread of Misinformation and Hate Speech

The speed and scale at which algorithms facilitate the spread of misinformation and hate speech are alarming. This creates a toxic online environment that can incite real-world violence.

The Speed and Scale of Online Disinformation

Algorithms exponentially accelerate the dissemination of false or misleading narratives, making it incredibly difficult to counter their influence.

- Viral Content: Misinformation spreads rapidly through viral content, often outpacing fact-checking efforts. This rapid spread can create a sense of urgency and legitimacy around false narratives, making them more credible to susceptible individuals.

- Real-World Consequences: Numerous examples link viral misinformation campaigns to real-world violence, ranging from attacks on specific individuals or groups to larger-scale events.

- Combating this requires a multi-pronged approach involving fact-checking organizations, media literacy initiatives, and improved algorithmic design to limit the reach of false information.

The Lack of Effective Content Moderation

Tech companies struggle to effectively moderate content and remove hate speech at the scale required. Automated systems have limitations, and human oversight is costly and time-consuming.

- Scale of the Problem: The sheer volume of content uploaded to social media platforms overwhelms even sophisticated content moderation systems.

- Limitations of Automation: Automated systems often struggle to identify nuanced forms of hate speech or sarcasm, leading to both false positives and false negatives.

- Ethical Considerations: The balance between freedom of speech and the prevention of hate speech is a complex ethical challenge with no easy answers. Overly aggressive censorship can be counterproductive, while inadequate moderation can have dangerous consequences.

The Ethical and Legal Implications for Tech Companies

The ethical and legal responsibilities of tech companies in addressing the spread of extremism and violence online are paramount.

Corporate Responsibility and Accountability

Tech companies face increasing pressure to take responsibility for the misuse of their platforms. This includes calls for greater transparency and accountability.

- Legal Ramifications: Tech companies could face legal repercussions for failing to adequately address the spread of harmful content on their platforms.

- Balancing Free Speech and Public Safety: The challenge lies in striking a balance between protecting free speech and ensuring public safety. This necessitates thoughtful regulation and robust content moderation strategies.

- Corporate social responsibility initiatives are no longer sufficient; proactive measures are needed to prevent the spread of extremism and violence online.

The Need for Improved Algorithmic Transparency and Design

Greater transparency in algorithmic decision-making and the development of more ethical AI systems are crucial.

- Algorithmic Transparency: Users and regulators need greater insight into how algorithms prioritize and distribute content to effectively address bias and promote fairness.

- Ethical AI Development: AI can play a significant role in identifying and flagging harmful content, but it must be developed and deployed responsibly to avoid bias and unintended consequences.

- Human-in-the-loop systems, which combine the strengths of both human judgment and AI-powered analysis, are a promising approach to improving content moderation.

Conclusion

The relationship between tech companies' algorithms and mass violence is complex and multifaceted. While algorithms are not the sole cause of such tragedies, evidence suggests they can significantly contribute to the spread of extremism and violence by creating echo chambers, facilitating targeted advertising, and accelerating the dissemination of misinformation and hate speech. The lack of effective content moderation and the ethical challenges associated with algorithmic decision-making further complicate the issue. We must critically examine our own online consumption habits and demand greater transparency and accountability from tech companies regarding their algorithms. Supporting policies that promote ethical AI development and effective content moderation is crucial to addressing the dangerous "Tech Companies and Mass Violence: The Algorithmic Connection" and preventing future tragedies. Let's work together to build a safer and more responsible digital world.

Featured Posts

-

Canadas Measles Elimination A Status Report And The Risks Ahead

May 30, 2025

Canadas Measles Elimination A Status Report And The Risks Ahead

May 30, 2025 -

Tileoptikes Metadoseis M Savvatoy 19 Aprilioy Odigos Programmatos

May 30, 2025

Tileoptikes Metadoseis M Savvatoy 19 Aprilioy Odigos Programmatos

May 30, 2025 -

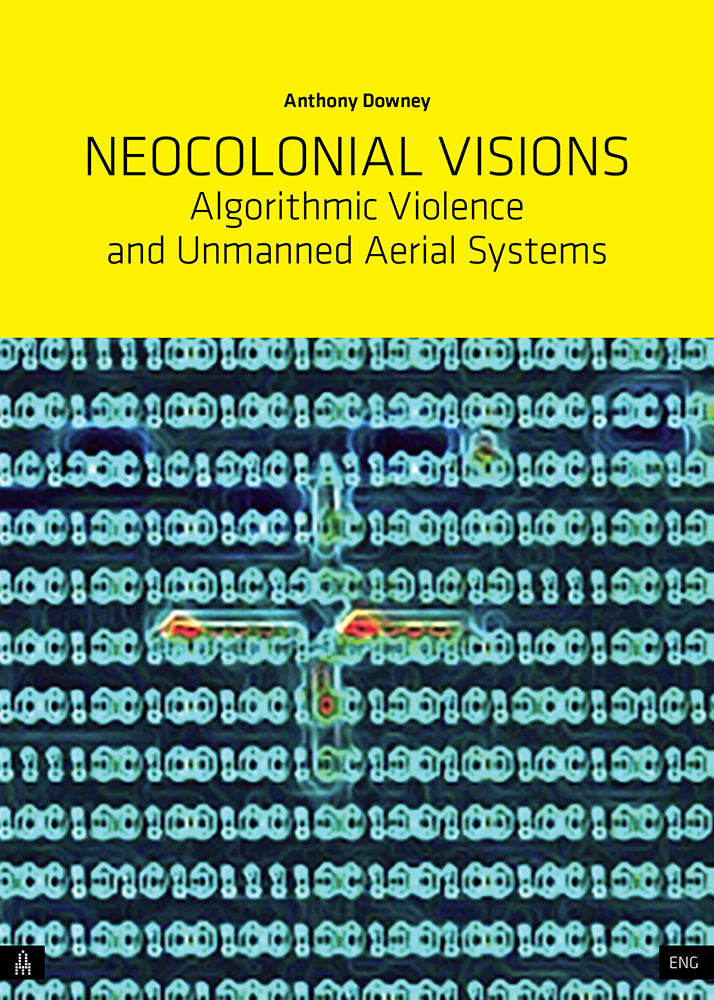

Wybory Prezydenckie 2025 Wplyw Mentzena Na Polska Polityke

May 30, 2025

Wybory Prezydenckie 2025 Wplyw Mentzena Na Polska Polityke

May 30, 2025 -

Le Pen Les Lois Et L Interpretation De Jacobelli Decryptage

May 30, 2025

Le Pen Les Lois Et L Interpretation De Jacobelli Decryptage

May 30, 2025 -

2025 Kawasaki Ninja 650 Krt Everything We Know About The Launch

May 30, 2025

2025 Kawasaki Ninja 650 Krt Everything We Know About The Launch

May 30, 2025

Latest Posts

-

Power Outages And Weather Alerts Issued Across Northeast Ohio Due To Intense Thunderstorms

May 31, 2025

Power Outages And Weather Alerts Issued Across Northeast Ohio Due To Intense Thunderstorms

May 31, 2025 -

April 29th Twins Guardians Game Progressive Field Weather And Potential Delays

May 31, 2025

April 29th Twins Guardians Game Progressive Field Weather And Potential Delays

May 31, 2025 -

Rain Possible On Election Day In Northeast Ohio

May 31, 2025

Rain Possible On Election Day In Northeast Ohio

May 31, 2025 -

Cleveland Fire Station Temporary Closure After Significant Water Leaks

May 31, 2025

Cleveland Fire Station Temporary Closure After Significant Water Leaks

May 31, 2025 -

Northeast Ohio Weather Alert Severe Thunderstorms Cause Widespread Power Outages

May 31, 2025

Northeast Ohio Weather Alert Severe Thunderstorms Cause Widespread Power Outages

May 31, 2025