The Algorithmic Influence On Mass Shooters: A Call For Corporate Responsibility

Table of Contents

H2: The Role of Social Media Algorithms in Radicalization

Social media algorithms, designed to maximize user engagement, inadvertently create environments conducive to radicalization. Their influence on potential mass shooters is a critical concern.

H3: Echo Chambers and Filter Bubbles

Algorithms create echo chambers by prioritizing content aligning with a user's existing beliefs, regardless of its veracity. This reinforcement of extremist views isolates individuals, making them more susceptible to violent ideologies.

- Algorithms often promote extremist content through personalized recommendations.

- Lack of robust content moderation allows hate speech and misinformation to flourish.

- The spread of conspiracy theories and false narratives further fuels radicalization.

The psychological impact is profound. Constant exposure to extreme viewpoints within a closed online community can distort reality, leading to a sense of validation and justification for violence. Individuals feel increasingly alienated from mainstream society, strengthening their commitment to extremist groups and ideologies.

H3: Targeted Advertising and Recruitment

Sophisticated algorithms enable the targeted delivery of extremist propaganda and recruitment materials to vulnerable individuals. This personalized approach significantly increases the effectiveness of these malicious campaigns.

- Targeted ads promoting hate groups or violence easily bypass content moderation systems.

- Tracking and regulating such ads across numerous platforms remains a significant challenge.

- The hyper-personalization of online experiences makes radical content more appealing and accessible.

Algorithms essentially personalize the radicalization process, making extremist content feel relevant and tailored to individual needs and anxieties. This targeted approach is a crucial element in the online recruitment of individuals prone to violence.

H2: The Spread of Misinformation and Conspiracy Theories

The pursuit of engagement, a core metric for social media platforms, often leads to the algorithmic amplification of false narratives and conspiracy theories. This, in turn, fuels violence by distorting perceptions of reality and normalizing extremism.

H3: Algorithmic Amplification of False Narratives

Algorithms prioritize content that generates high engagement, regardless of its truthfulness. This inadvertently elevates misinformation and conspiracy theories linked to violence, creating a breeding ground for radicalization.

- False narratives surrounding mass shootings are rapidly spread through social media.

- The speed at which misinformation proliferates online overwhelms efforts at debunking.

- The lack of fact-checking and verification contributes to the normalization of false beliefs.

"Fake news" plays a significant role in desensitizing individuals to violence, making extremist actions seem more acceptable and even justifiable within certain online communities.

H3: The Difficulty of Content Moderation

Effectively moderating harmful content at scale presents significant challenges for tech companies. The sheer volume of content, limitations of automated systems, and biases inherent in algorithms all hinder effective moderation.

- The sheer volume of online content makes manual moderation impractical.

- Automated systems often fail to identify subtle forms of hate speech or incitement.

- Algorithmic biases can lead to the disproportionate removal of certain types of content.

Content moderators face ethical and practical dilemmas daily, grappling with the impossible task of policing vast amounts of online content while balancing free speech concerns.

H2: The Call for Corporate Responsibility

Addressing the algorithmic influence on mass shooters requires a fundamental shift in corporate responsibility. Tech companies must move beyond reactive measures to proactive strategies that prevent radicalization and violence.

H3: Enhanced Content Moderation and Transparency

Stricter content moderation policies, greater algorithm transparency, and improved accountability mechanisms are crucial.

- Improved AI-based content moderation systems require more sophisticated detection capabilities.

- Human oversight is essential to address the limitations of automated systems.

- Independent audits of algorithms can help ensure fairness and transparency.

Proactive measures, rather than reactive responses, are essential to prevent the spread of extremist content and the radicalization of vulnerable individuals.

H3: Investing in Mental Health and Early Intervention

Tech companies have a responsibility to invest in research and initiatives to identify and support individuals at risk of violence.

- Collaborations with mental health organizations can provide crucial resources and support.

- Early warning systems can identify individuals exhibiting warning signs of violent tendencies.

- The promotion of responsible online behavior through educational campaigns is critical.

Technology can play a positive role in mental health and violence prevention. Investing in research and developing innovative solutions is a crucial part of addressing the algorithmic influence on mass shooters.

3. Conclusion

The algorithmic influence on mass shooters is a complex and multifaceted issue. Tech companies bear significant responsibility in mitigating the risk by addressing how their algorithms contribute to online radicalization and the spread of misinformation. Enhanced content moderation, algorithmic transparency, and investment in mental health initiatives are crucial steps towards preventing future tragedies. We must demand greater algorithmic responsibility from tech corporations and advocate for stricter regulations to curb the spread of online violence. Sign our petition at [link to petition] and contact your representatives to demand stronger laws surrounding corporate accountability in online violence and improved mass shooting prevention strategies. Let's work together to hold tech companies accountable and build a safer online environment.

Featured Posts

-

Bts Comeback Speculation Soars After Reunion Teaser

May 30, 2025

Bts Comeback Speculation Soars After Reunion Teaser

May 30, 2025 -

Glastonbury Festival 2024 Fans Devastated By Line Up Absence

May 30, 2025

Glastonbury Festival 2024 Fans Devastated By Line Up Absence

May 30, 2025 -

Upset In Madrid Potapova Defeats Zheng Qinwen

May 30, 2025

Upset In Madrid Potapova Defeats Zheng Qinwen

May 30, 2025 -

Manitoba Mineral Development Fund Invests 300 000 In Canadian Gold Corps Tartan Mine Project

May 30, 2025

Manitoba Mineral Development Fund Invests 300 000 In Canadian Gold Corps Tartan Mine Project

May 30, 2025 -

Charleston Open Keys Falls To Kalinskaya In Quarterfinal Clash

May 30, 2025

Charleston Open Keys Falls To Kalinskaya In Quarterfinal Clash

May 30, 2025

Latest Posts

-

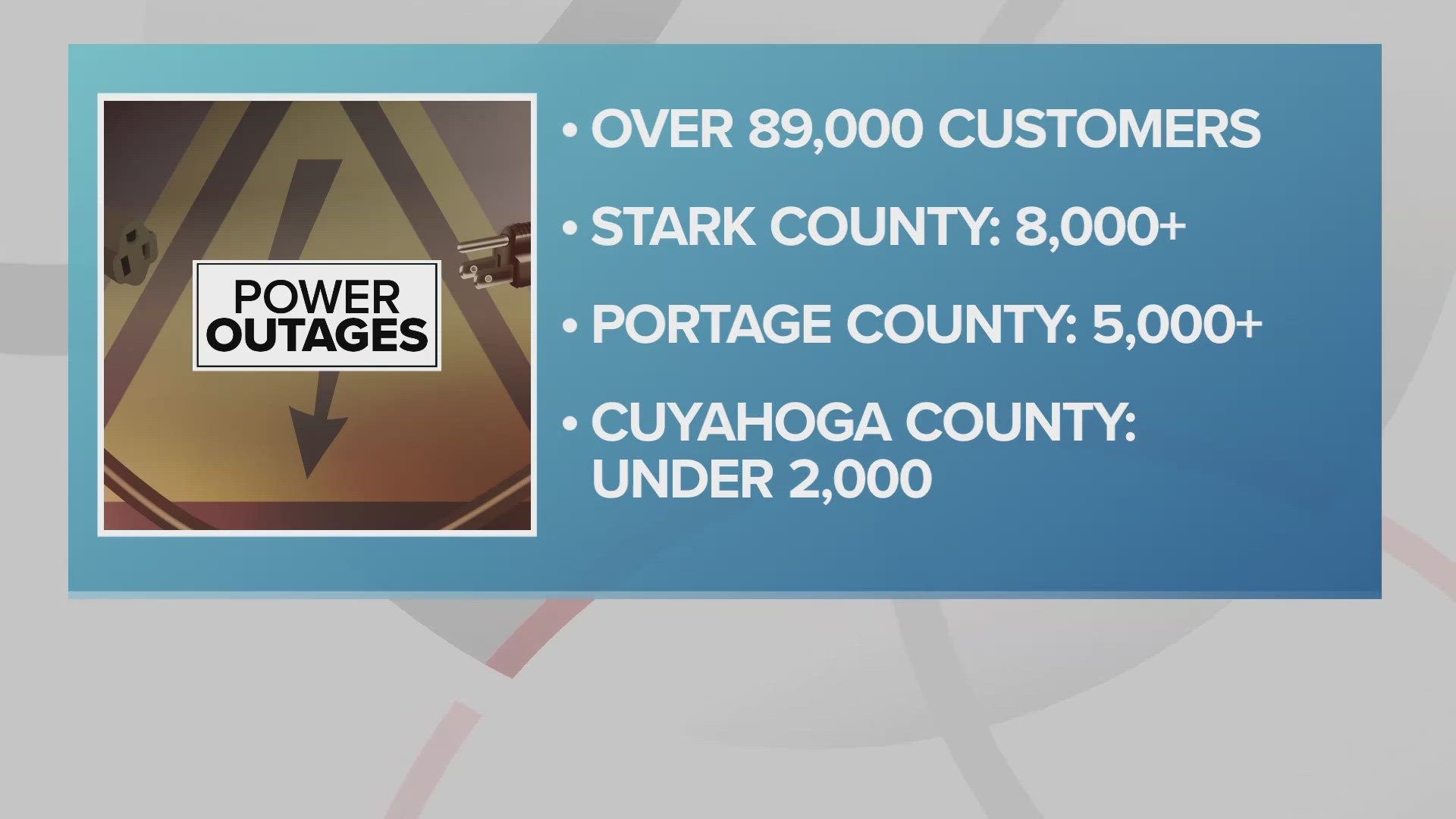

Northeast Ohio Faces Widespread Power Outages Amidst Severe Thunderstorm Warnings

May 31, 2025

Northeast Ohio Faces Widespread Power Outages Amidst Severe Thunderstorm Warnings

May 31, 2025 -

Severe Thunderstorms Bring Power Outages To Northeast Ohio Stay Safe And Informed

May 31, 2025

Severe Thunderstorms Bring Power Outages To Northeast Ohio Stay Safe And Informed

May 31, 2025 -

Power Outages And Weather Alerts Issued Across Northeast Ohio Due To Intense Thunderstorms

May 31, 2025

Power Outages And Weather Alerts Issued Across Northeast Ohio Due To Intense Thunderstorms

May 31, 2025 -

April 29th Twins Guardians Game Progressive Field Weather And Potential Delays

May 31, 2025

April 29th Twins Guardians Game Progressive Field Weather And Potential Delays

May 31, 2025 -

Rain Possible On Election Day In Northeast Ohio

May 31, 2025

Rain Possible On Election Day In Northeast Ohio

May 31, 2025