The Dark Side Of AI Therapy: Surveillance And The Police State

Table of Contents

AI therapy generally refers to the use of artificial intelligence technologies in mental healthcare. This includes chatbot-based therapy apps that offer conversational support, AI-powered diagnostic tools that analyze symptoms, and personalized treatment recommendations generated by algorithms. Examples include Woebot, Youper, and others, all of which collect significant amounts of user data.

Data Collection and Privacy Violations in AI Therapy

AI therapy apps collect vast amounts of personal data, raising serious concerns about data privacy and AI data security. This data often includes detailed conversations, emotional responses, location data, and other sensitive information revealing intimate details of a user's mental state. The lack of transparency regarding data usage and sharing practices exacerbates these concerns.

- Examples of sensitive data collected: Personal health information (PHI), including diagnoses, treatment plans, and medication details; intimate details from therapy sessions; location data revealing user movements; social media activity linked to accounts.

- Potential for data breaches and misuse: Data breaches could expose highly sensitive personal information to malicious actors, leading to identity theft, blackmail, and other serious harms. Misuse of this data by companies or even government entities is also a significant threat.

- Lack of robust data protection regulations specifically for AI therapy: Current regulations like GDPR and HIPAA might not adequately address the unique challenges posed by AI therapy's extensive data collection and processing practices. We urgently need specialized regulations tailored to this emerging field. The complexities of AI data security are also often overlooked.

The Potential for Algorithmic Bias and Discrimination in AI Therapy

Algorithms used in AI therapy are trained on datasets, and if these datasets are not diverse and representative, the resulting AI systems can exhibit significant biases. This algorithmic bias can lead to discriminatory outcomes in diagnoses and treatment recommendations.

- Examples of potential biases: Racial bias might lead to misdiagnosis or inadequate treatment for certain ethnic groups; gender bias could result in different treatment plans for men and women experiencing similar symptoms; socioeconomic bias might lead to overlooking mental health needs in low-income populations.

- Consequences of biased diagnoses and treatment plans: Biased AI systems can perpetuate and amplify existing societal inequalities, denying individuals access to appropriate and effective mental healthcare. This can exacerbate mental health disparities and lead to worsening outcomes.

- The need for diverse and representative datasets: To mitigate algorithmic bias, it is crucial to develop AI models trained on diverse and representative datasets that accurately reflect the population they serve. Fairness in AI is not just an ethical ideal, but a necessity for equitable access to care.

AI Therapy as a Tool for Predictive Policing and Social Control

The potential for misuse of AI therapy data extends beyond individual privacy violations. The data collected could be leveraged for predictive policing and social control, raising serious ethical questions.

- Examples of how data could be misused for surveillance: Identifying individuals deemed "at risk" based on their conversations or emotional responses, tracking their movements and interactions, and using this information for preemptive interventions. This mass surveillance could erode trust in mental healthcare.

- Potential for preemptive interventions based on flawed predictions: Predictions made by AI systems are prone to errors and biases. Preemptive interventions based on inaccurate predictions can lead to undue harassment, stigmatization, and unnecessary intrusion into people’s lives.

- Erosion of civil liberties and due process: The use of AI therapy data for predictive policing undermines fundamental principles of due process and civil liberties. It allows for surveillance and potential intervention without probable cause or a fair hearing.

The Role of Government Regulation and Corporate Responsibility

Addressing the potential harms requires a multi-pronged approach involving stricter government regulations and increased corporate responsibility.

- Suggestions for government regulations: Mandatory data anonymization, transparent data usage policies, independent audits of AI systems, and clear guidelines on the permissible uses of AI therapy data. AI regulation needs to be proactive, not reactive.

- Best practices for companies to ensure ethical AI development: Prioritizing data privacy, using diverse and representative datasets, conducting thorough bias assessments, and implementing robust data security measures. Ethical AI development must be a core principle.

- Importance of independent audits and accountability mechanisms: Regular audits of AI therapy apps are needed to ensure compliance with data protection regulations and ethical guidelines. Strong accountability mechanisms are essential to address instances of misuse or unethical practices. Corporate social responsibility should be a driving force in this space.

Conclusion: Addressing the Dark Side of AI Therapy

The potential for AI therapy to be misused for surveillance and social control is a serious concern. The unchecked collection of sensitive mental health data, coupled with the potential for algorithmic bias and a lack of robust regulation, poses a significant threat to individual privacy and civil liberties. Robust data protection, ethical AI development, and responsible government regulation are essential to mitigate these risks.

Let's work together to ensure that AI therapy remains a tool for healing, not a mechanism for surveillance and the erosion of our fundamental rights. Demand transparency and accountability in the development and deployment of AI in mental healthcare. Learn more about the risks and advocate for responsible AI therapy today!

Featured Posts

-

Padres Opening Series Details Unveiled Sycuan Casino Resort Sponsorship Announced

May 15, 2025

Padres Opening Series Details Unveiled Sycuan Casino Resort Sponsorship Announced

May 15, 2025 -

Ufc 314 Paddy Pimbletts Top Three Hit List Includes Ilia Topuria

May 15, 2025

Ufc 314 Paddy Pimbletts Top Three Hit List Includes Ilia Topuria

May 15, 2025 -

The Nhl Draft Lottery Why Fans Are Upset

May 15, 2025

The Nhl Draft Lottery Why Fans Are Upset

May 15, 2025 -

Major League Soccer Injury Report Saturdays Lineup Changes

May 15, 2025

Major League Soccer Injury Report Saturdays Lineup Changes

May 15, 2025 -

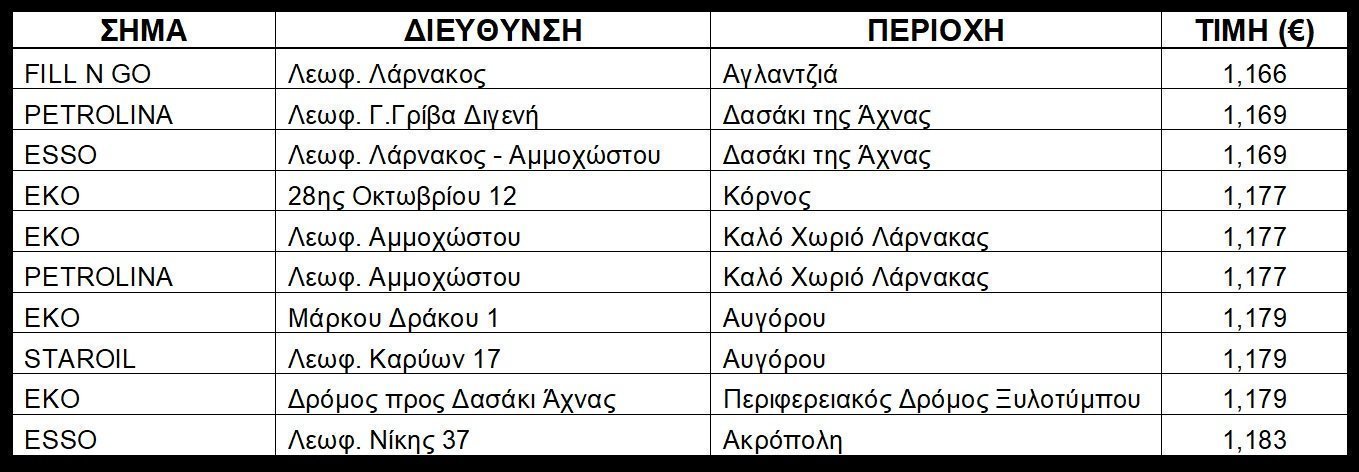

Times Kaysimon Kypros Breite Ta Fthinotera Pratiria

May 15, 2025

Times Kaysimon Kypros Breite Ta Fthinotera Pratiria

May 15, 2025

Latest Posts

-

Le Potentiel De Lane Hutson Decryptage Du Jeune Defenseur Pour La Lnh

May 15, 2025

Le Potentiel De Lane Hutson Decryptage Du Jeune Defenseur Pour La Lnh

May 15, 2025 -

Nhl Minority Owner Faces Suspension Following Online Attack And Comments On Terrorism

May 15, 2025

Nhl Minority Owner Faces Suspension Following Online Attack And Comments On Terrorism

May 15, 2025 -

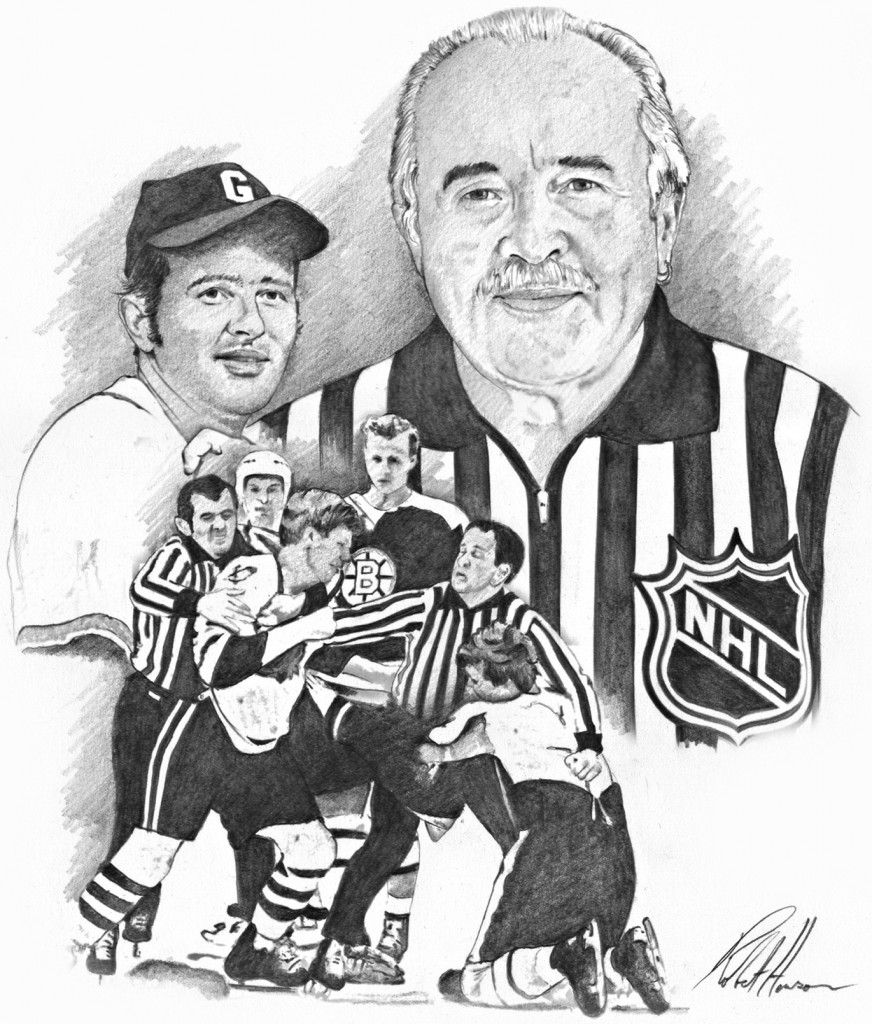

Apple Watches And Nhl Officials A New Era In Officiating

May 15, 2025

Apple Watches And Nhl Officials A New Era In Officiating

May 15, 2025 -

The Nhl Draft Lottery Why Fans Are Upset

May 15, 2025

The Nhl Draft Lottery Why Fans Are Upset

May 15, 2025 -

Peut On S Attendre A Voir Lane Hutson Comme Un Defenseur Numero 1 Dans La Lnh

May 15, 2025

Peut On S Attendre A Voir Lane Hutson Comme Un Defenseur Numero 1 Dans La Lnh

May 15, 2025