The First Amendment And AI Chatbots: Examining Character AI's Legal Position

Table of Contents

Character AI and the Scope of Free Speech Protection

The First Amendment to the US Constitution guarantees freedom of speech, a cornerstone of American democracy. This protection extends to online platforms, though its application is constantly evolving, particularly with the rise of new technologies. A central question regarding AI chatbots like Character AI is whether they themselves are considered "speakers" under the First Amendment. This is far from straightforward. Determining authorship and intent in AI-generated content presents significant legal complexities.

- Definition of "speech" in the context of AI-generated text: Does AI-generated text constitute "speech" in the same way human-generated text does? The legal definition may need to be adapted to account for the unique characteristics of AI communication.

- Legal precedents concerning online platforms and content moderation: Existing case law regarding online platforms and their responsibility for user-generated content will likely influence how courts address AI-generated content. Section 230 of the Communications Decency Act, for instance, plays a significant role in this context.

- The role of algorithms and training data in shaping chatbot responses: The algorithms and training data used to develop Character AI significantly influence its responses. This raises questions about the extent to which Character AI, as a platform, is responsible for the content it generates.

Content Moderation and the First Amendment Challenges for Character AI

Character AI faces significant hurdles in moderating user-generated content and prompts. Balancing the protection of free speech with the prevention of harmful or illegal content presents a major challenge. The platform must grapple with the potential liability for content generated by its chatbot, even if it wasn't directly created or endorsed by Character AI itself. This necessitates a robust content moderation strategy.

- Examples of problematic content generated by AI chatbots: AI chatbots can be used to generate hate speech, misinformation, and illegal instructions, creating significant legal and ethical dilemmas.

- Different content moderation strategies and their legal implications: Various content moderation strategies, from reactive measures to proactive filtering, carry different legal implications concerning free speech and due process.

- The impact of Section 230 of the Communications Decency Act on Character AI's liability: Section 230 provides immunity to online platforms for user-generated content, but its applicability to AI-generated content remains a subject of ongoing debate.

The Role of User Intent and Responsibility in Character AI Interactions

The legal landscape surrounding user intent and responsibility when interacting with Character AI is murky. Can users be held accountable for illegal or harmful content generated using the platform? This question highlights the complex interplay between platform responsibility and user responsibility.

- Examples of user misuse of Character AI and potential legal consequences: Users could exploit Character AI to generate illegal content, potentially leading to legal action against both the user and the platform.

- The debate on platform responsibility versus user responsibility for generated content: The allocation of responsibility between Character AI and its users is a key area of legal uncertainty.

- Potential legal frameworks for addressing user-generated harmful content within AI chatbot platforms: New legal frameworks may be needed to address the unique challenges posed by AI-generated content and user interactions.

Comparing Character AI to Other AI Platforms and their Legal Battles (Optional)

A comparison with other AI platforms facing similar legal challenges provides valuable context. For example, examining the legal battles faced by companies developing large language models can shed light on potential future challenges for Character AI. Each platform will have unique approaches to content moderation and legal strategies, leading to varying legal outcomes.

Navigating the Legal Landscape of AI Chatbots Like Character AI

Character AI, and AI chatbots in general, operate in a complex legal environment. Balancing free speech with the need for responsible content moderation is a critical ongoing challenge. The legal landscape will undoubtedly continue to evolve as AI technology advances, necessitating proactive approaches to mitigate legal risks. The future of AI and its legal ramifications will be shaped by the ongoing dialogue and legal precedents set in cases involving platforms like Character AI.

We urge readers to continue researching the intersection of the First Amendment, AI chatbots, and Character AI's legal position. Further exploration of relevant case law and technological developments is crucial. Share your thoughts on the evolving legal implications of AI chatbots and free speech in the comments below!

Featured Posts

-

Historic Win Zimbabwe Conquers Sylhet In Thrilling Test Match

May 23, 2025

Historic Win Zimbabwe Conquers Sylhet In Thrilling Test Match

May 23, 2025 -

Iste En Tasarruflu 3 Burc Ve Oezellikleri

May 23, 2025

Iste En Tasarruflu 3 Burc Ve Oezellikleri

May 23, 2025 -

Deciphering The Big Rig Rock Report 3 12 97 1 Double Q Results

May 23, 2025

Deciphering The Big Rig Rock Report 3 12 97 1 Double Q Results

May 23, 2025 -

Hydrogen Engine Breakthrough Cummins And Partners Celebrate Success

May 23, 2025

Hydrogen Engine Breakthrough Cummins And Partners Celebrate Success

May 23, 2025 -

Karate Kid Legends Trailer Family Honor Tradition And Legacy

May 23, 2025

Karate Kid Legends Trailer Family Honor Tradition And Legacy

May 23, 2025

Latest Posts

-

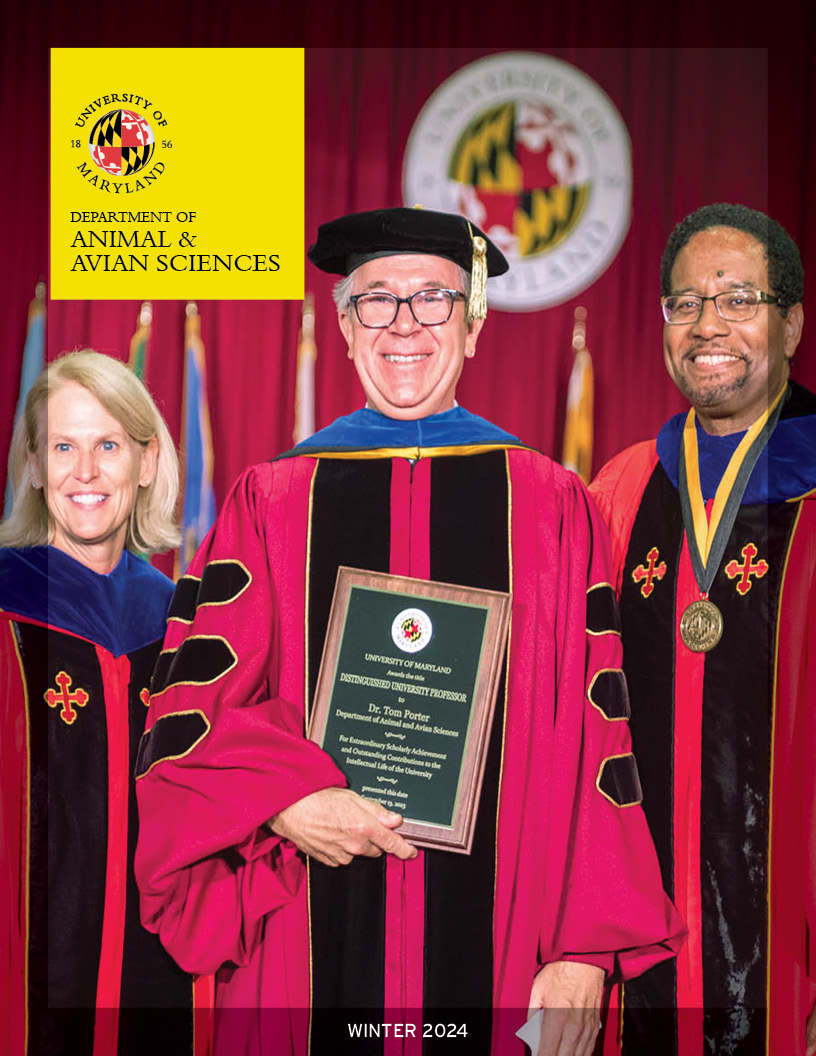

University Of Maryland Commencement Famous Amphibian To Deliver Speech

May 23, 2025

University Of Maryland Commencement Famous Amphibian To Deliver Speech

May 23, 2025 -

2025 Umd Graduation The Unexpected Kermit The Frog Appearance

May 23, 2025

2025 Umd Graduation The Unexpected Kermit The Frog Appearance

May 23, 2025 -

Umd Commencement 2025 Kermit The Frogs Unexpected Announcement

May 23, 2025

Umd Commencement 2025 Kermit The Frogs Unexpected Announcement

May 23, 2025 -

Internet Reacts Kermit The Frog As Umds 2025 Commencement Speaker

May 23, 2025

Internet Reacts Kermit The Frog As Umds 2025 Commencement Speaker

May 23, 2025 -

Umd Commencement 2025 Kermit The Frog To Address Graduates

May 23, 2025

Umd Commencement 2025 Kermit The Frog To Address Graduates

May 23, 2025