The Reality Of AI Learning: Guiding Principles For Responsible Use

Table of Contents

Understanding the Limitations of Current AI Learning Systems

AI learning, while powerful, is not without its limitations. A clear understanding of these limitations is paramount for responsible development and deployment.

Data Bias and its Impact

AI systems learn from data, and biased data inevitably leads to biased outcomes. This can perpetuate and amplify existing societal inequalities, creating unfair or discriminatory results. This is a significant concern in many applications of AI learning, from loan applications to criminal justice.

- Example: Facial recognition systems have shown significantly higher error rates for people of color, leading to misidentification and potential for wrongful accusations.

- Mitigation: Addressing data bias requires a multi-pronged approach. This includes:

- Carefully curating and auditing datasets for bias, actively seeking out and removing skewed data points.

- Employing diverse and representative development teams to identify and challenge biases embedded in algorithms.

- Developing techniques to detect and mitigate bias during the training process itself.

Lack of Explainability ("Black Box" Problem)

Many AI models, particularly deep learning systems, are notoriously opaque. Their decision-making processes are often difficult or impossible to understand, leading to the "black box" problem. This lack of transparency raises concerns about accountability and trust. How can we trust a system if we don't understand how it reaches its conclusions?

- Challenge: Debugging and improving AI models becomes significantly harder when their internal workings are obscure. Identifying and correcting errors becomes a complex and time-consuming process.

- Solution: The field of explainable AI (XAI) is actively researching methods to make AI models more transparent. These techniques aim to provide insights into the decision-making process, improving understanding and trust.

The Potential for Unintended Consequences

AI systems, even those trained on vast amounts of data, can behave in unexpected ways, leading to unforeseen negative consequences. These unintended consequences can range from minor inconveniences to significant harms.

- Example: AI-powered recruitment tools have been shown to exhibit gender bias, favoring male candidates over equally qualified female candidates.

- Mitigation: To mitigate the risk of unintended consequences, a robust approach to AI development is vital:

- Thorough testing and rigorous evaluation before deployment are crucial.

- Ongoing monitoring and adaptation are necessary to identify and address unexpected behaviors.

- Human oversight and intervention should be integrated into AI systems to provide a safety net.

Ethical Considerations in AI Learning Development and Deployment

Beyond technical limitations, significant ethical considerations must be addressed in the development and deployment of AI learning systems.

Privacy and Data Security

AI learning often relies on vast amounts of personal data. This raises significant concerns about privacy violations and data breaches. Protecting sensitive information is paramount.

- Best Practices: Implementing strong data encryption, anonymization techniques, and complying with relevant data protection regulations (GDPR, CCPA, etc.) are essential.

- Consideration: Data minimization – only collecting the necessary data for a specific purpose – should be a guiding principle.

Accountability and Responsibility

Determining who is responsible when an AI system makes a mistake is a crucial ethical challenge. Clear lines of accountability must be established.

- Challenge: Establishing legal frameworks for AI accountability is complex and requires careful consideration of various factors.

- Solution: Developing robust auditing mechanisms and ethical guidelines for AI development and deployment is crucial to ensure responsibility.

Job Displacement and Economic Impact

The automation potential of AI learning raises concerns about job displacement and the need for workforce retraining and adaptation. The transition to an AI-driven economy requires careful planning and proactive measures.

- Mitigation: Investing in education and reskilling programs to prepare the workforce for the changing job market is essential.

- Consideration: Focusing on human-AI collaboration rather than complete replacement of human workers can mitigate job losses and create new opportunities.

Promoting Responsible AI Learning Through Collaboration and Regulation

Responsible AI learning requires a collaborative effort across various stakeholders.

Industry Collaboration and Best Practices

Sharing knowledge and best practices among researchers, developers, and policymakers is crucial for establishing ethical guidelines and standards.

- Benefits: Fostering innovation while mitigating risks through shared learnings and collaborative problem-solving.

- Example: The development of ethical AI frameworks by organizations like the OECD provides valuable guidance for responsible AI development.

The Role of Government Regulation

Clear and effective regulations are needed to govern the development and use of AI systems, ensuring responsible innovation.

- Important aspects: Data privacy regulations, algorithmic transparency requirements, and liability frameworks are all essential components of a comprehensive regulatory approach.

- Consideration: Balancing innovation with consumer protection and preventing the misuse of AI technologies is a delicate balance that policymakers must navigate.

Public Education and Awareness

Educating the public about the capabilities and limitations of AI learning is crucial for fostering informed discussions and responsible adoption.

- Methods: Public awareness campaigns, educational programs, and open dialogues can help foster understanding and responsible use of AI technologies.

- Goal: Promoting critical thinking and responsible use of AI technologies is vital for maximizing benefits and mitigating risks.

Conclusion

The reality of AI learning is a complex interplay of immense potential and significant challenges. By acknowledging the limitations of current systems, prioritizing ethical considerations, and fostering collaboration and regulation, we can guide the development and use of AI learning towards a future that benefits all of humanity. Responsible development and deployment of AI learning requires a concerted effort from researchers, developers, policymakers, and the public. Let's work together to harness the power of AI learning responsibly and ethically. Embrace the opportunities while mitigating the risks, building a future where AI learning serves humanity.

Featured Posts

-

The Doubt That Doomed Trumps Relationship With Elon Musk

May 31, 2025

The Doubt That Doomed Trumps Relationship With Elon Musk

May 31, 2025 -

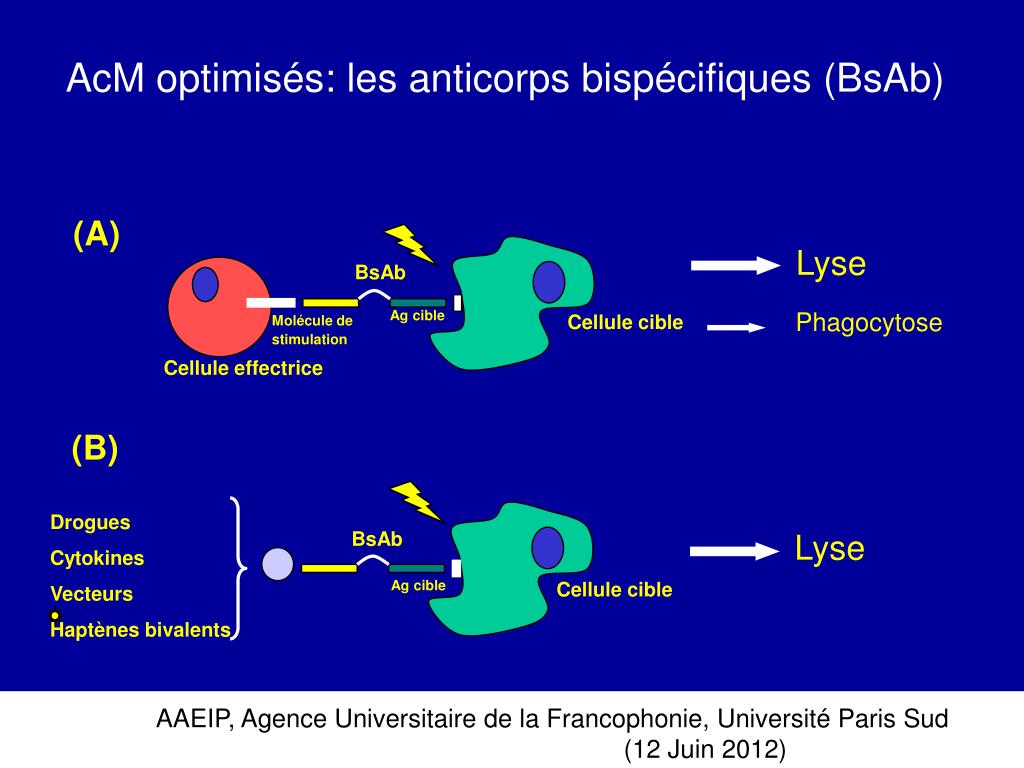

Sanofi Acquiert L Anticorps Bispecifique De Dren Bio Un Portefeuille Immunologie Renforce

May 31, 2025

Sanofi Acquiert L Anticorps Bispecifique De Dren Bio Un Portefeuille Immunologie Renforce

May 31, 2025 -

Arnarulunguaq Contribution D Une Femme Inuit A Sa Communaute

May 31, 2025

Arnarulunguaq Contribution D Une Femme Inuit A Sa Communaute

May 31, 2025 -

Chase Lees Scoreless Mlb Return Roll Call May 12 2025

May 31, 2025

Chase Lees Scoreless Mlb Return Roll Call May 12 2025

May 31, 2025 -

Are Vets Being Forced To Compromise Care For Profit A Bbc Report

May 31, 2025

Are Vets Being Forced To Compromise Care For Profit A Bbc Report

May 31, 2025