Understanding AI's Learning Constraints For Ethical And Effective Use

Table of Contents

Data Bias and its Impact on AI's Learning Capabilities

A major constraint on AI's learning capabilities stems from data bias. AI algorithms learn from the data they are trained on, and if that data reflects existing societal biases, the resulting AI system will likely perpetuate and even amplify those biases. This is because AI models, however sophisticated, are simply mathematical functions that identify patterns in data; they don't inherently understand fairness or ethics.

For example, facial recognition systems trained primarily on images of white faces often perform poorly on individuals with darker skin tones, leading to misidentification and potentially discriminatory outcomes. Similarly, AI systems used in loan applications, if trained on historical data reflecting existing lending biases, might unfairly deny loans to specific demographic groups.

Mitigating data bias requires a multifaceted approach. Techniques such as data augmentation (adding more diverse data to balance the dataset) and algorithmic fairness (developing algorithms specifically designed to minimize bias) are crucial. Careful data preprocessing and rigorous dataset selection are also vital steps in building unbiased AI systems.

- Biased data leads to biased predictions. Garbage in, garbage out – a simple but powerful principle.

- Algorithmic bias can perpetuate societal inequalities. AI systems can unintentionally reinforce harmful stereotypes.

- Data preprocessing and careful dataset selection are crucial. Addressing bias starts with the data itself.

The Limitations of Current AI Architectures

Current AI architectures, primarily based on deep learning models, also possess inherent limitations. One significant challenge is the lack of common sense reasoning and explainability. Many AI models operate as "black boxes," producing accurate outputs without providing insights into their decision-making processes. This lack of transparency makes it difficult to understand why an AI system made a specific decision, hindering trust and accountability.

The "black box" problem is particularly concerning in high-stakes applications like healthcare and criminal justice. If an AI system makes an incorrect diagnosis or predicts recidivism wrongly, understanding the reasoning behind the error is essential for improving the system and ensuring fairness.

Fortunately, research in explainable AI (XAI) is actively addressing these limitations. XAI aims to develop AI models that are more transparent and interpretable, providing insights into their decision-making processes.

- Current AI models often lack generalizability. They may perform well on specific tasks but fail to adapt to new situations.

- Interpretability issues hinder the understanding of AI decision-making. This lack of transparency raises concerns about trust and accountability.

- Advancements in XAI aim to improve transparency. This is crucial for building responsible and trustworthy AI systems.

Computational Constraints and Resource Requirements

Training and deploying advanced AI models demand substantial computational resources. Large language models and other complex AI systems require massive datasets, powerful computing hardware (like GPUs and TPUs), and significant energy consumption. This high resource demand presents challenges related to cost, accessibility, and environmental impact.

The high energy consumption associated with training large AI models raises serious environmental concerns. The carbon footprint of AI is a growing area of research and needs careful consideration. Furthermore, the high cost of training and deploying these models limits accessibility, potentially exacerbating existing inequalities in access to technology.

Fortunately, researchers are exploring solutions, including the development of more energy-efficient algorithms and hardware advancements.

- Training large AI models requires significant computing power. This translates to high costs and energy consumption.

- High energy consumption poses environmental concerns. The carbon footprint of AI training needs to be addressed.

- Resource limitations can hinder broader AI adoption. Making AI accessible to everyone is a key challenge.

Ethical Considerations and Responsible AI Development

Understanding AI's learning constraints is vital for addressing the ethical implications of AI development and deployment. The potential for misuse and unintended consequences necessitates a strong emphasis on responsible AI practices. AI systems should be designed for fairness, accountability, and transparency. Human oversight is essential to ensure that AI aligns with human values and avoids causing harm.

Several ethical guidelines and frameworks for AI are emerging, providing principles for responsible AI development and deployment. These frameworks emphasize the need for transparency, accountability, and human oversight in AI systems.

- AI systems should be designed for fairness and accountability. Bias mitigation and error analysis are crucial.

- Transparency and explainability are vital for ethical AI. Users should understand how AI systems make decisions.

- Human oversight is essential for responsible AI development. Humans must remain in the loop to ensure ethical and safe AI deployment.

Navigating the Challenges of AI Learning for a Better Future

In conclusion, AI's learning constraints – encompassing data bias, limitations of current architectures, computational resource demands, and ethical considerations – present significant challenges. However, by acknowledging these limitations and actively working to mitigate them through ongoing research and development, we can build more ethical, effective, and beneficial AI systems. By understanding AI's learning constraints and their implications, we can work towards a future where AI empowers humanity, rather than exacerbating existing inequalities. Continue your exploration of responsible AI development by researching the latest advancements in mitigating bias and enhancing transparency.

Featured Posts

-

Kontuziyata Na Grigor Dimitrov V Rolan Garos Detayli I Posleditsi

May 31, 2025

Kontuziyata Na Grigor Dimitrov V Rolan Garos Detayli I Posleditsi

May 31, 2025 -

Foire Au Jambon Bayonne 2025 Crise Financiere Et Interrogation Sur La Responsabilite Municipale

May 31, 2025

Foire Au Jambon Bayonne 2025 Crise Financiere Et Interrogation Sur La Responsabilite Municipale

May 31, 2025 -

Djokovic In Yeni Rekoru Tenis Duenyasini Sarsan Basari

May 31, 2025

Djokovic In Yeni Rekoru Tenis Duenyasini Sarsan Basari

May 31, 2025 -

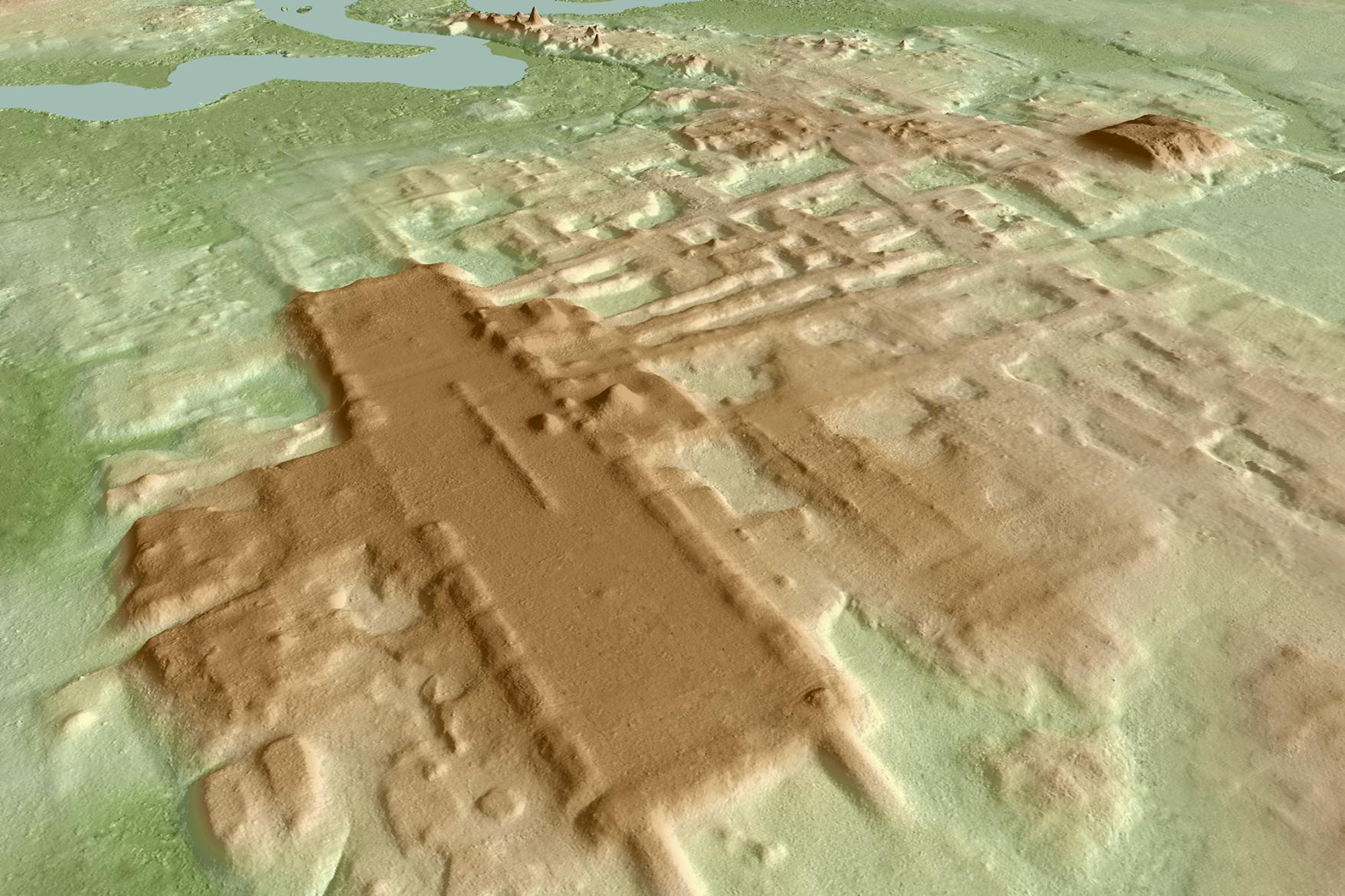

Major Archaeological Find 3 000 Year Old Mayan Pyramids And Canal System Discovered

May 31, 2025

Major Archaeological Find 3 000 Year Old Mayan Pyramids And Canal System Discovered

May 31, 2025 -

The Viral Image Separating Fact From Fiction In Donald Trumps Friendship Story

May 31, 2025

The Viral Image Separating Fact From Fiction In Donald Trumps Friendship Story

May 31, 2025