Understanding AI's Learning Limitations For Responsible Application

Table of Contents

Data Bias and its Impact on AI Learning

AI models learn from data, and biased data leads to biased AI. This is a critical AI learning limitation with significant consequences.

The Problem of Biased Datasets

AI models are trained on data, and if that data reflects existing societal biases (gender, racial, socioeconomic), the AI system will perpetuate and even amplify those biases in its outputs. This can lead to unfair or discriminatory outcomes.

- Biased algorithms leading to unfair or discriminatory outcomes: For example, a loan application AI trained on data showing historical lending bias against certain demographics will likely continue this bias.

- Difficulty in identifying and mitigating bias in complex datasets: Uncovering subtle biases embedded within vast datasets is a complex challenge. Many biases are implicit and not easily detectable.

- The need for diverse and representative datasets for fairer AI: Creating truly unbiased AI requires carefully curated datasets reflecting the diversity of the population.

- Examples of biased AI in loan applications, facial recognition, and recruitment: Numerous examples exist across various sectors demonstrating the real-world impact of biased AI algorithms. Facial recognition systems have shown higher error rates for individuals with darker skin tones, highlighting the critical need for addressing AI learning limitations related to data bias.

Mitigating Bias in AI Training

Addressing data bias requires proactive measures throughout the AI lifecycle. This involves techniques to identify and reduce bias in both data and algorithms.

- Careful data curation and preprocessing techniques: This includes actively identifying and removing biased data points or correcting skewed representations.

- Algorithmic fairness techniques to counteract biases: Specific algorithms can be employed to mitigate the effects of bias within the model itself.

- Regular auditing and monitoring of AI systems for bias detection: Ongoing monitoring is crucial to catch and address emerging biases over time.

- Transparency and explainability in AI models to understand bias origins: Understanding why an AI model makes a particular decision is vital in identifying and fixing biases.

Limited Generalizability and Transfer Learning Challenges

Another significant AI learning limitation is the challenge of generalizability. AI models often struggle to adapt their knowledge to new situations.

The Narrow Scope of AI Expertise

Most AI systems excel in specific, narrowly defined tasks. Generalizing their learning to new, unseen situations remains a significant challenge. This is a key aspect of understanding AI learning limitations.

- Overfitting to training data, leading to poor performance on new data: Models can become overly specialized to the training data and fail to generalize to new, slightly different data.

- Difficulty in transferring knowledge learned in one context to another: Knowledge acquired in one domain often does not easily transfer to another, limiting the versatility of the AI.

- The need for robust and adaptable AI models capable of handling novel situations: Future AI development requires models that can learn and adapt to changing conditions.

- Examples of AI systems failing when presented with data outside their training distribution: Self-driving cars, for instance, might struggle with unexpected weather conditions or unusual traffic scenarios.

Improving Generalization Through Advanced Techniques

Research is actively exploring methods to improve AI's ability to generalize and overcome this AI learning limitation.

- Transfer learning to leverage knowledge from related tasks: Applying knowledge learned in one task to a similar task can improve performance and efficiency.

- Domain adaptation to adjust models for new domains: Adapting models to new domains with minimal retraining improves their versatility.

- Meta-learning to learn how to learn more effectively: This approach focuses on teaching AI systems how to learn more efficiently and adapt more quickly to new tasks.

- Reinforcement learning to enable AI to learn through trial and error in dynamic environments: This method allows AI systems to learn through experience in complex, real-world settings.

The Explainability Gap and Lack of Transparency in AI Decision-Making

The lack of transparency in many AI systems is a major AI learning limitation.

The "Black Box" Problem

Many sophisticated AI models, particularly deep learning systems, function as black boxes, making it difficult to understand their decision-making processes.

- Difficulty in debugging and identifying errors in complex models: Understanding why an AI made a specific error is difficult in opaque models.

- Lack of trust and accountability when AI decisions have significant consequences: Without understanding the reasoning behind a decision, it is difficult to hold the AI system accountable.

- The need for explainable AI (XAI) techniques to improve transparency: XAI aims to open the "black box" and make the decision-making process more understandable.

- The importance of interpretability for building trust and ensuring responsible AI usage: Transparency is crucial for building public trust and acceptance of AI systems.

Striving for Explainable AI

Research in explainable AI aims to make AI models more transparent and understandable, addressing this crucial AI learning limitation.

- Development of methods to visualize and interpret model decisions: Visualizations can help to understand complex models' internal workings.

- Use of simpler, more interpretable models where appropriate: Simpler models, while potentially less powerful, are often more transparent.

- Techniques for generating explanations in a human-understandable format: Explanations should be clear and concise, even for non-experts.

- The ongoing challenge of balancing explainability with model performance: There is often a trade-off between a model's accuracy and its explainability.

Conclusion

Understanding AI's learning limitations – encompassing data bias, limited generalizability, and the explainability gap – is crucial for responsible AI application. By acknowledging these limitations and actively working to mitigate them through robust data handling, advanced learning techniques, and the development of explainable AI, we can harness the power of AI while minimizing potential risks and ensuring its ethical and beneficial deployment. Addressing these AI learning limitations is not merely a technical challenge; it's a shared responsibility for building a future where AI serves humanity responsibly. Let's collaborate to develop and deploy AI solutions that are both powerful and ethical.

Featured Posts

-

Essex Bannatyne Health Club Padel Court Proposal Unveiled

May 31, 2025

Essex Bannatyne Health Club Padel Court Proposal Unveiled

May 31, 2025 -

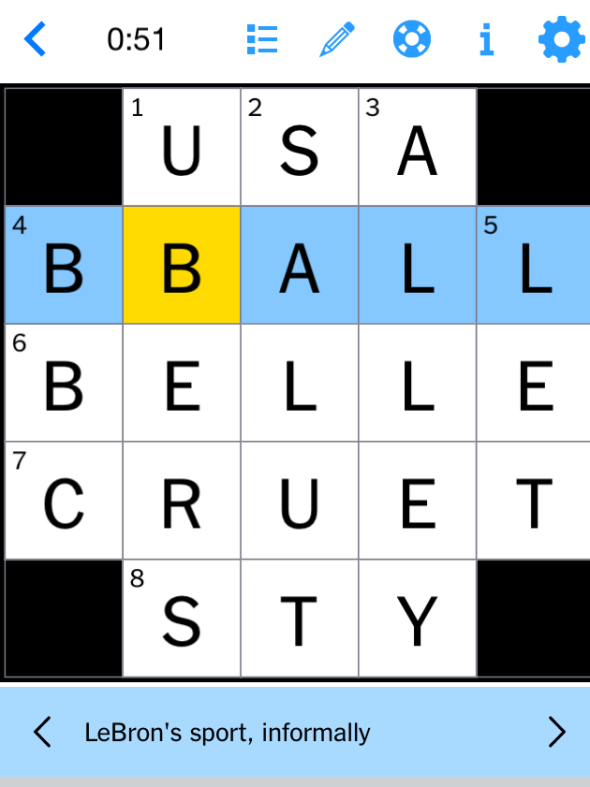

Nyt Mini Crossword Clues And Answers Wednesday April 9th

May 31, 2025

Nyt Mini Crossword Clues And Answers Wednesday April 9th

May 31, 2025 -

Rising Pet Vet Bills In The Uk The Role Of Corporate Veterinary Structures

May 31, 2025

Rising Pet Vet Bills In The Uk The Role Of Corporate Veterinary Structures

May 31, 2025 -

Nyt Mini Crossword Solutions May 13 2025

May 31, 2025

Nyt Mini Crossword Solutions May 13 2025

May 31, 2025 -

Receta De Lasana De Calabacin De Pablo Ojeda Facil Y Deliciosa Mas Vale Tarde

May 31, 2025

Receta De Lasana De Calabacin De Pablo Ojeda Facil Y Deliciosa Mas Vale Tarde

May 31, 2025