Character AI Chatbots And Free Speech: A Legal Grey Area

Table of Contents

The First Amendment and AI-Generated Content

The advent of AI chatbots like Character AI has introduced a significant challenge to established legal frameworks around free speech. The question of whether the First Amendment, or equivalent protections in other jurisdictions, applies to AI-generated content is a critical one, largely unanswered.

Defining "Speech" in the Age of AI

Does the First Amendment protect AI-generated content? The legal framework surrounding free speech was primarily established long before the advent of AI chatbots, creating considerable uncertainty in this novel context.

- Authorship and Intent: A central question revolves around authorship and intent. Who is responsible for the content generated by a Character AI chatbot – the user prompting the AI, the developers who created the algorithm, or the AI itself? Current legal frameworks struggle to assign responsibility in this scenario.

- Adapting Legal Precedents: Existing legal precedents primarily focus on human expression, making their application to AI-generated content challenging. The absence of human intent and the algorithmic nature of AI responses raise significant questions about the applicability of existing laws.

- Circumventing Existing Laws: The potential for malicious actors to use AI chatbots to circumvent existing laws related to defamation, hate speech, or incitement to violence is a serious concern. The ability of AI to generate large volumes of harmful content quickly necessitates a reevaluation of legal frameworks.

Content Moderation and Censorship Concerns

Character AI platforms face a considerable challenge in balancing free expression with the need to prevent the generation and dissemination of harmful content. This balancing act raises significant concerns about potential censorship and the limitations placed on AI-generated speech.

The Dilemma of Balancing Free Expression and Harmful Content

How do platforms like Character AI determine what constitutes "harmful" content in the context of AI-generated responses? The subjective nature of this judgment, coupled with the potential for bias in algorithms, creates a difficult and potentially precarious situation.

- Subjectivity in Defining Harm: Defining what constitutes harmful content is inherently subjective and culturally influenced. Establishing clear guidelines for content moderation is crucial, yet exceedingly difficult to achieve in a universally acceptable manner.

- Algorithmic Bias and Censorship: Content moderation systems rely on algorithms that can inherit and amplify existing societal biases. This can lead to disproportionate censorship of certain viewpoints or groups, exacerbating existing inequalities.

- Over-Moderation and the Chilling Effect: Overly aggressive content moderation can create a "chilling effect," discouraging users from engaging with the platform for fear of their content being unfairly removed or their accounts being suspended. This undermines the very principles of free expression the platform aims to protect.

Legal Liability and Accountability for AI-Generated Harm

If a Character AI chatbot generates defamatory, libelous, or otherwise harmful content, who is legally responsible? This issue of liability remains a significant legal grey area.

Determining Responsibility for Defamation or Other Legal Wrongs

Establishing legal responsibility for AI-generated harm is fraught with complexity. The decentralized nature of AI development and deployment makes assigning accountability challenging.

- Accountability for Developers and Users: Legal avenues for holding Character AI developers, users, or both accountable for harmful content need to be clearly defined. This requires a careful consideration of negligence, intent, and the level of control each party exerts over the AI's output.

- Proving Causation: Establishing a clear causal link between the AI's output and any subsequent harm is crucial for legal action. This can be particularly challenging given the unpredictable nature of AI and the potential for multiple contributing factors.

- Legal Precedents from Other Areas: Examination of legal precedents from other areas of technology law, such as those governing online platforms and social media, may offer guidance, but significant adaptation will be necessary to address the unique aspects of AI-generated content.

The Role of Data Privacy in Character AI Conversations

The use of Character AI chatbots raises important data privacy considerations. The data used to train and operate these AI models, as well as the data generated through user interactions, must be handled responsibly and in compliance with relevant data protection laws.

- Data Used to Train AI Models: Examining the privacy implications of the data used to train Character AI chatbots is crucial. This data may include personal information obtained from various sources, and its use must comply with applicable privacy regulations.

- User Data Protection: Legal frameworks like GDPR and CCPA protect user data, and their intersection with the use of this data to power AI models must be carefully considered.

- Risks Associated with Data Collection: Potential risks associated with the collection and use of personal information through Character AI interactions, including the risk of data breaches and misuse, necessitate strong security measures and transparent data handling practices.

Conclusion

Character AI chatbots represent a fascinating intersection of technological advancement and fundamental legal principles. The legal grey area surrounding free speech, content moderation, and liability necessitates careful consideration and proactive development of appropriate regulations. Navigating this complex landscape will require collaborative efforts between lawmakers, technology developers, and users. We must find a balance that protects freedom of expression while mitigating the potential harms associated with AI-generated content. Further exploration of the legal implications of Character AI and similar technologies is crucial to ensure responsible innovation and prevent potential misuse. Understanding the legal implications surrounding Character AI is paramount for all users and developers. Let's work together to foster responsible development and use of Character AI chatbots.

Featured Posts

-

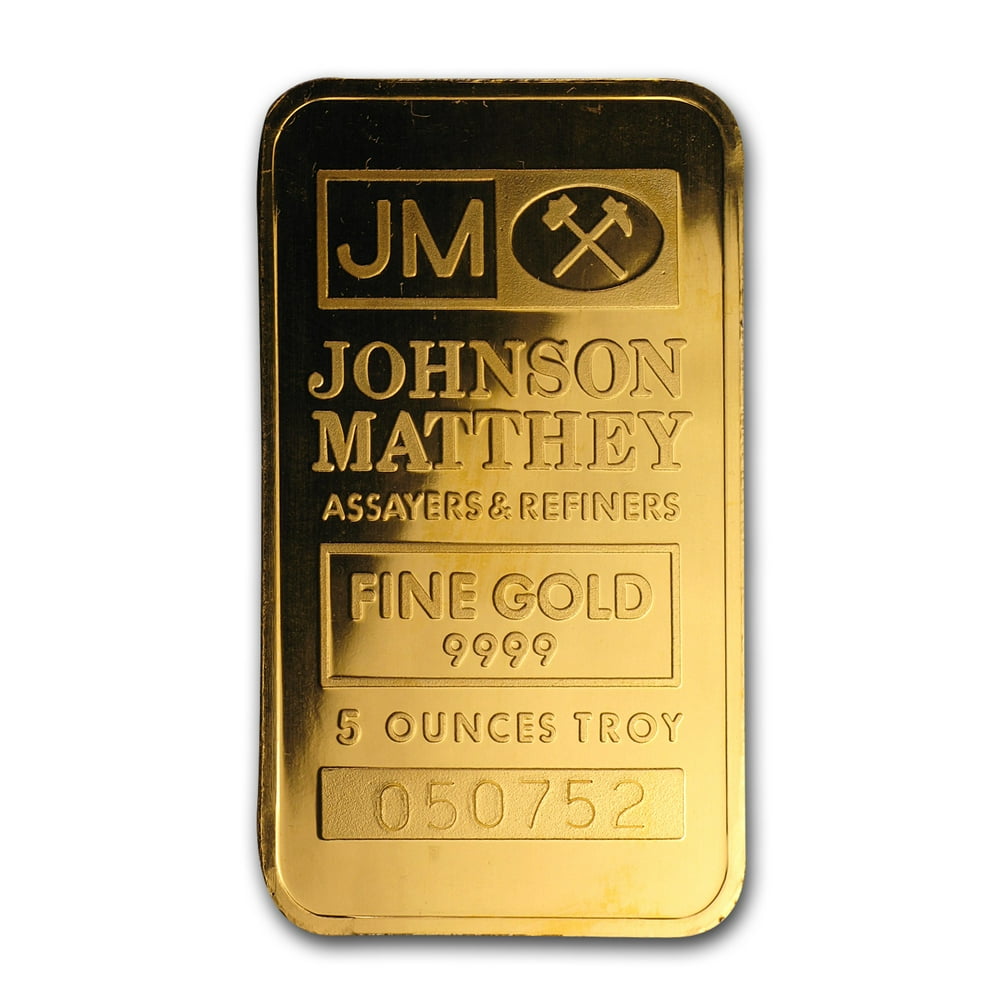

Honeywell Poised For Major Acquisition 1 8 Billion Johnson Matthey Deal

May 23, 2025

Honeywell Poised For Major Acquisition 1 8 Billion Johnson Matthey Deal

May 23, 2025 -

Post Revision House Passes Trump Tax Bill

May 23, 2025

Post Revision House Passes Trump Tax Bill

May 23, 2025 -

Freddie Flintoffs Post Crash Recovery One Month Off The Road At Home

May 23, 2025

Freddie Flintoffs Post Crash Recovery One Month Off The Road At Home

May 23, 2025 -

The Accessibility Crisis In The Video Game Industry

May 23, 2025

The Accessibility Crisis In The Video Game Industry

May 23, 2025 -

Memorial Day Gas Prices A Decade Low Predicted

May 23, 2025

Memorial Day Gas Prices A Decade Low Predicted

May 23, 2025

Latest Posts

-

Forbes Top Picks Memorial Day Appliance Sales 2025

May 23, 2025

Forbes Top Picks Memorial Day Appliance Sales 2025

May 23, 2025 -

The Last Rodeo Neal Mc Donoughs Unexpected Challenge

May 23, 2025

The Last Rodeo Neal Mc Donoughs Unexpected Challenge

May 23, 2025 -

Actor Neal Mc Donough Tackles Pro Bull Riding In New Film

May 23, 2025

Actor Neal Mc Donough Tackles Pro Bull Riding In New Film

May 23, 2025 -

Memorial Day 2025 The Ultimate Guide To The Best Sales And Deals

May 23, 2025

Memorial Day 2025 The Ultimate Guide To The Best Sales And Deals

May 23, 2025 -

Damien Darhks Power Could He Take Down Superman An Exclusive Interview With Neal Mc Donough

May 23, 2025

Damien Darhks Power Could He Take Down Superman An Exclusive Interview With Neal Mc Donough

May 23, 2025