Improving Siri With Large Language Models: Apple's Plan

Table of Contents

The Current Limitations of Siri

Siri, while functional, lags behind competitors like Google Assistant and Amazon Alexa in several key areas. Its current strengths lie primarily in its seamless integration within the Apple ecosystem and its strong performance with basic commands. However, significant weaknesses hinder its potential.

- Limited contextual understanding: Siri often struggles to understand the context of a conversation, leading to inaccurate or irrelevant responses. A simple follow-up question can easily derail the interaction.

- Difficulty handling complex or nuanced requests: Multi-step instructions or requests requiring sophisticated reasoning often confuse Siri. Planning a complex trip or making a nuanced comparison between products can prove frustrating.

- Less sophisticated natural language processing (NLP) compared to LLMs: Siri's NLP capabilities are comparatively less advanced than those powered by LLMs. This limits its ability to interpret ambiguous phrasing, slang, and colloquialisms accurately.

- Dependence on pre-programmed commands versus genuine understanding: Siri relies heavily on pre-programmed commands and keywords, rather than a true understanding of the user's intent. This limits its flexibility and adaptability.

These limitations significantly impact user experience, leading to frustration and a reliance on alternative methods for completing tasks. For example, trying to get Siri to understand a complex search query often results in a less satisfactory outcome than using a search engine directly. The need for a more intuitive and powerful virtual assistant is clear.

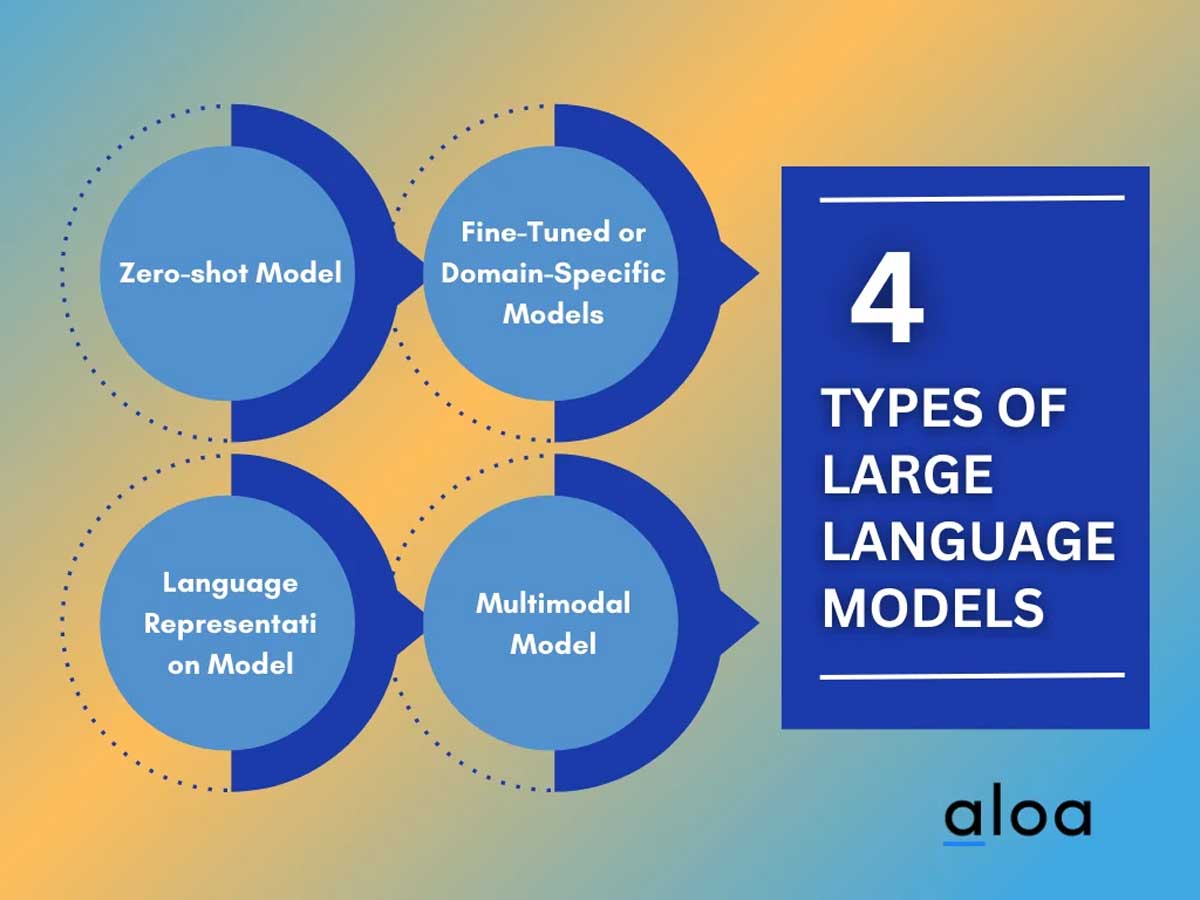

How LLMs Can Revolutionize Siri

Large language models offer a significant opportunity to revolutionize Siri's capabilities, addressing many of its current shortcomings.

Enhanced Natural Language Understanding

LLMs dramatically improve Siri's NLP capabilities. By training on massive datasets of text and code, LLMs learn to understand the nuances of human language, including:

- Improved accuracy in interpreting complex sentences and questions: LLMs can better parse complex grammatical structures and understand the underlying meaning, even with ambiguous phrasing.

- Better handling of slang, colloquialisms, and regional dialects: LLMs can be trained to understand a wider range of language variations, leading to more inclusive and accurate interpretations.

- More robust ability to understand context and maintain conversational flow: LLMs excel at maintaining context across multiple turns of a conversation, allowing for more natural and fluid interactions.

This improved understanding forms the foundation for a significantly more powerful and user-friendly Siri.

More Contextual and Personalized Responses

LLMs enable Siri to access and process vast amounts of data to deliver more relevant and personalized responses. This includes:

- Access to user data for tailored recommendations and information: Siri can leverage user data from various Apple services to provide personalized recommendations, reminders, and information.

- Integration with other Apple services (e.g., Calendar, Music) for richer context: Seamless integration with other Apple services provides richer context for Siri's responses, making them more relevant and helpful.

- Adaptive learning based on user interactions to improve accuracy over time: LLMs can learn from user interactions, continuously improving accuracy and personalization over time.

This personalized approach will transform Siri from a simple assistant into a proactive and intuitive partner.

Improved Multi-Turn Conversations

LLMs are key to facilitating more natural and fluid multi-turn conversations. This means:

- Improved memory of previous interactions: Siri will be able to remember previous interactions within a conversation, enabling more coherent and meaningful exchanges.

- Ability to handle follow-up questions and clarify ambiguous requests: LLMs can seamlessly handle follow-up questions and clarify ambiguous requests, improving the overall conversational flow.

- More engaging and human-like conversational experience: The enhanced understanding and context awareness will lead to a more engaging and human-like conversational experience.

This ability to maintain context and engage in meaningful dialogue will significantly enhance user satisfaction.

Challenges and Considerations for Apple

While the potential benefits are immense, Apple faces several challenges in integrating LLMs into Siri:

Privacy Concerns

The use of LLMs raises significant privacy concerns. Apple must address these concerns proactively:

- Data security and encryption: Robust security measures are essential to protect user data from unauthorized access.

- Transparency regarding data collection and usage: Clear and transparent communication about data collection and usage practices is crucial to build user trust.

- Compliance with privacy regulations (e.g., GDPR, CCPA): Adherence to relevant privacy regulations is paramount.

Addressing these concerns is critical for maintaining user trust and ensuring responsible innovation.

Computational Resources

Training and deploying LLMs require significant computational resources:

- Need for powerful hardware and infrastructure: Apple needs powerful hardware and infrastructure to support the training and deployment of LLMs.

- Energy efficiency considerations for large-scale model deployment: Efficient energy usage is crucial for large-scale deployment.

Apple's resources are considerable, but managing these demands effectively is a key challenge.

Integration with Existing Siri Infrastructure

Seamlessly integrating LLMs into the existing Siri architecture without disrupting functionality is a significant undertaking:

- Compatibility with current devices and operating systems: Ensuring compatibility with a wide range of devices and operating systems is essential.

- Maintaining responsiveness and speed: The integration must not compromise Siri's responsiveness and speed.

- Minimizing battery drain: Efficient resource management is crucial to minimize battery drain on user devices.

Conclusion

The integration of large language models promises to significantly improve Siri's capabilities, addressing its current limitations and creating a more natural, intuitive, and personalized user experience. By focusing on enhanced natural language understanding, contextual awareness, and fluid conversations, Apple can propel Siri to the forefront of voice assistant technology. However, addressing privacy concerns and the substantial computational demands will be crucial for successful implementation. Stay informed about Apple's advancements in improving Siri with large language models and witness the evolution of this critical Apple technology. The future of Siri, and perhaps the future of voice assistants, hinges on the successful integration of LLMs.

Featured Posts

-

Nyt Mini Crossword Solutions March 16th 2025

May 21, 2025

Nyt Mini Crossword Solutions March 16th 2025

May 21, 2025 -

Love Monster Exploring The Different Interpretations

May 21, 2025

Love Monster Exploring The Different Interpretations

May 21, 2025 -

Descubre 5 Podcasts Que Te Atraparan Si Te Gusta El Misterio Y El Terror

May 21, 2025

Descubre 5 Podcasts Que Te Atraparan Si Te Gusta El Misterio Y El Terror

May 21, 2025 -

Britons Epic Australian Run Pain Flies And Controversy

May 21, 2025

Britons Epic Australian Run Pain Flies And Controversy

May 21, 2025 -

Superalimentos Para La Salud Descubre Por Que Este Supera Al Arandano En Beneficios

May 21, 2025

Superalimentos Para La Salud Descubre Por Que Este Supera Al Arandano En Beneficios

May 21, 2025

Latest Posts

-

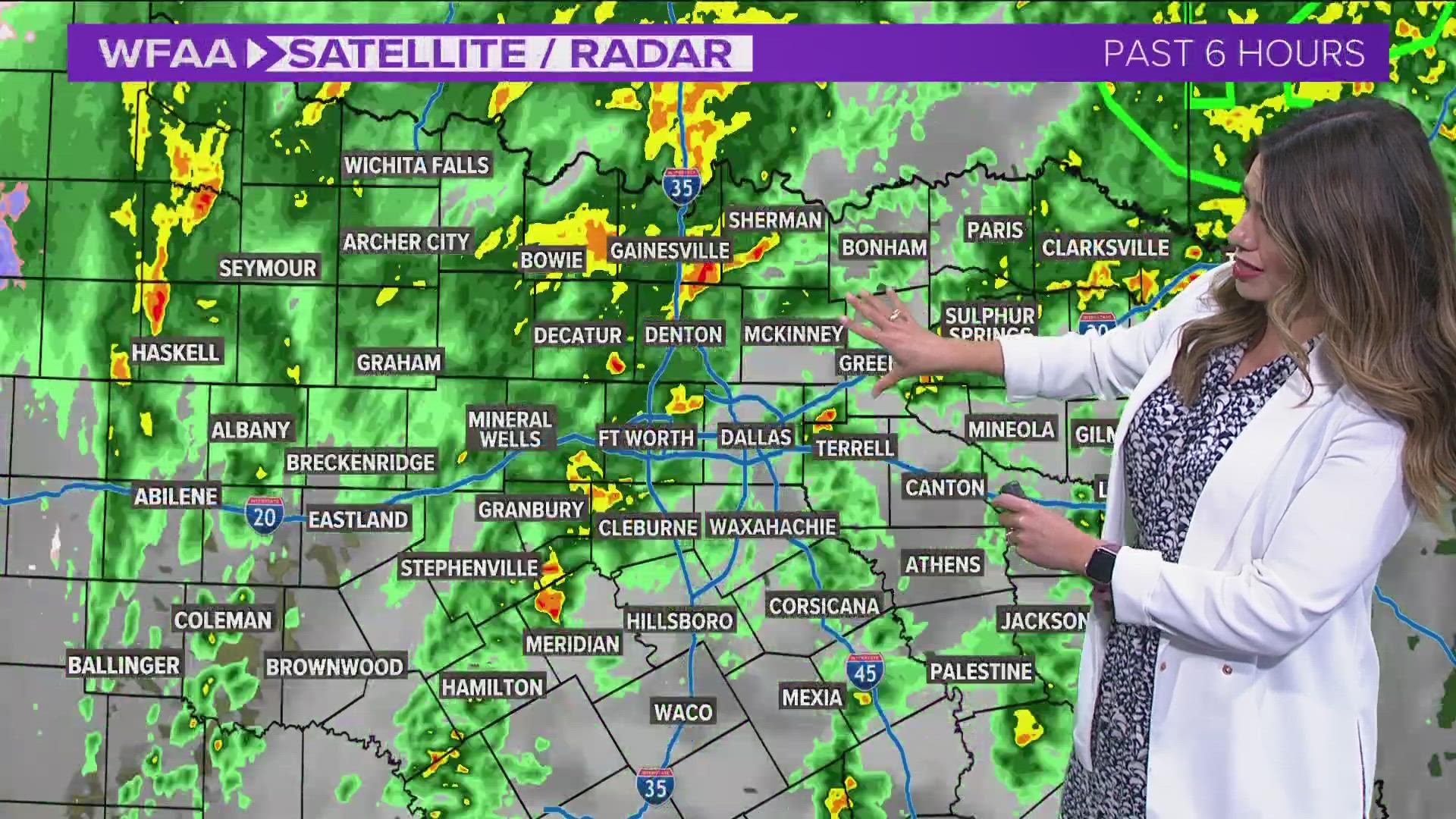

When Will It Rain Precise Timing And Chances Of Precipitation

May 21, 2025

When Will It Rain Precise Timing And Chances Of Precipitation

May 21, 2025 -

Checking For Rain Get The Latest Timing And Forecast Updates

May 21, 2025

Checking For Rain Get The Latest Timing And Forecast Updates

May 21, 2025 -

Current Rain Predictions Accurate Timing Of On And Off Showers

May 21, 2025

Current Rain Predictions Accurate Timing Of On And Off Showers

May 21, 2025 -

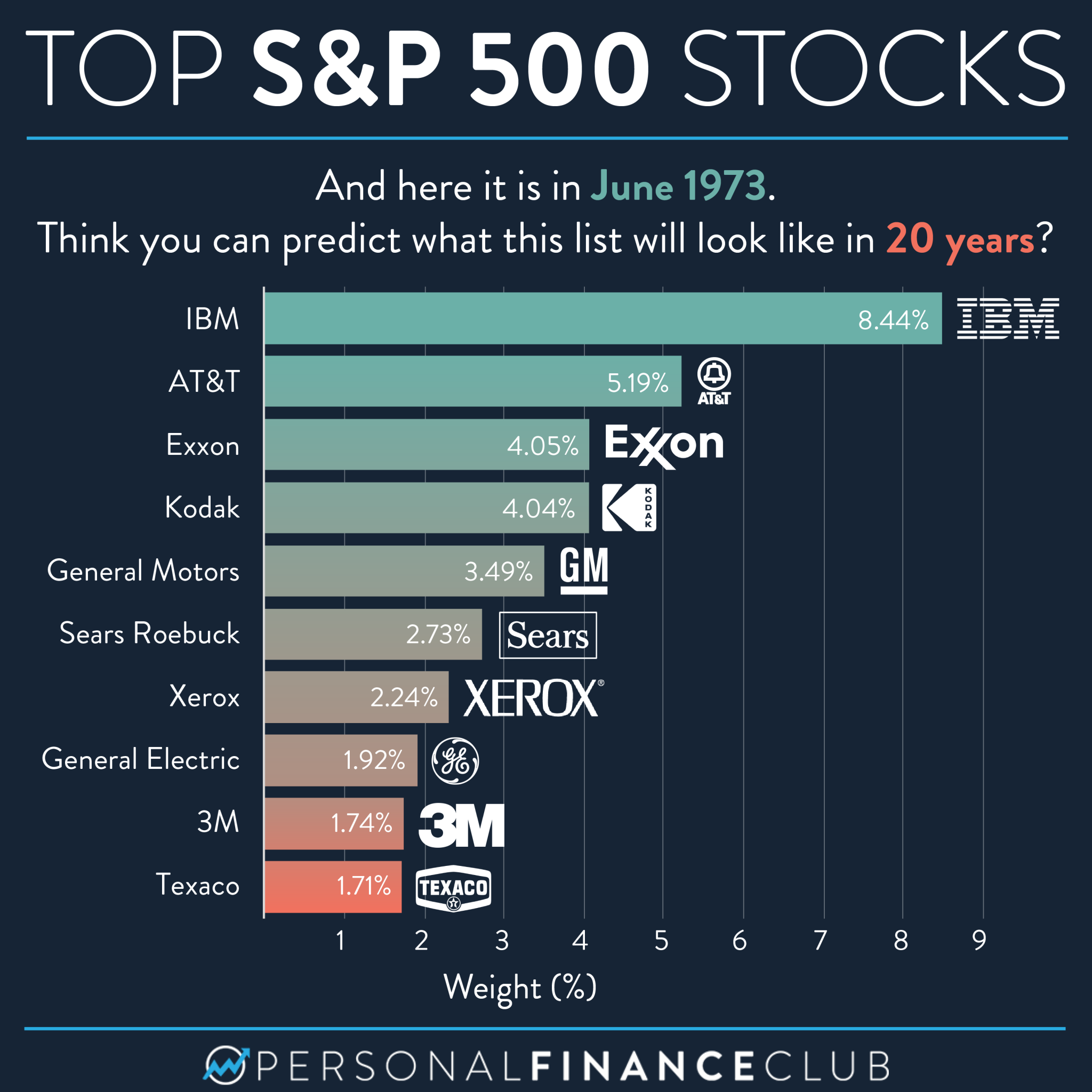

Reddits 12 Hottest Ai Stocks Should You Invest

May 21, 2025

Reddits 12 Hottest Ai Stocks Should You Invest

May 21, 2025 -

Big Bear Ai Stock Risks And Rewards For Investors

May 21, 2025

Big Bear Ai Stock Risks And Rewards For Investors

May 21, 2025