OpenAI Facing FTC Investigation: Understanding The Concerns

Table of Contents

Data Privacy and Security Concerns in the OpenAI FTC Investigation

The OpenAI FTC investigation heavily scrutinizes OpenAI's data handling practices. Concerns revolve around the collection, storage, and potential misuse of user data. The FTC's focus is on ensuring compliance with privacy laws and protecting user rights.

Unauthorized Data Collection and Use

OpenAI's data collection practices have come under fire. The company trains its AI models on vast datasets, including user inputs and interactions. This raises questions about:

- Consent: Was informed consent properly obtained for the use of this data in AI model training?

- Transparency: Is OpenAI transparent about what data is collected, how it's used, and with whom it's shared?

- Data Minimization: Does OpenAI collect only the data necessary for its stated purposes, or is excessive data being collected?

The FTC's investigation will likely focus on whether OpenAI complies with laws like the California Consumer Privacy Act (CCPA) and other relevant regulations regarding data collection and user consent. A lack of transparency and potential misuse of data are key areas of concern within this OpenAI FTC investigation.

Security Vulnerabilities and Data Breaches

The OpenAI FTC investigation also examines potential security vulnerabilities within OpenAI's systems. The massive datasets used to train AI models represent a significant target for cyberattacks. Potential vulnerabilities include:

- Data breaches: The risk of unauthorized access to sensitive user data is significant.

- System hacking: Compromised systems could lead to the manipulation of AI models or the theft of intellectual property.

- Lack of robust security measures: Insufficient security protocols could exacerbate the risks mentioned above.

The FTC’s role is to ensure OpenAI implements and maintains robust security measures to protect user data and prevent breaches. The investigation's outcome will have significant implications for data security standards within the AI industry.

Bias and Discrimination in AI Models: An OpenAI FTC Investigation Focus

Another significant aspect of the OpenAI FTC investigation is the potential for bias and discrimination in OpenAI's AI models. AI systems are trained on data, and if that data reflects existing societal biases, the resulting AI models will likely perpetuate and even amplify those biases.

Algorithmic Bias and its Societal Impact

Bias in AI models can lead to unfair or discriminatory outcomes. Examples include:

- Racial bias: AI systems may unfairly target or disadvantage certain racial groups.

- Gender bias: AI models might perpetuate stereotypes and discriminate against individuals based on gender.

- Socioeconomic bias: AI systems could exacerbate existing socioeconomic inequalities.

The FTC is interested in ensuring fairness and equity in AI systems. The OpenAI FTC investigation aims to determine whether OpenAI is taking sufficient steps to mitigate bias in its AI models.

Mitigating Bias and Promoting Responsible AI Development

Mitigating bias in AI requires a multi-pronged approach, including:

- Data auditing: Carefully examining training data to identify and address biases.

- Algorithm transparency: Making the inner workings of AI models more transparent to allow for bias detection.

- Diverse development teams: Ensuring diverse perspectives are incorporated into the design and development process.

- Ongoing monitoring and evaluation: Continuously monitoring AI systems for bias and making adjustments as needed.

OpenAI's current efforts to address bias are under scrutiny within the OpenAI FTC investigation. The investigation's outcome will significantly influence future AI development practices.

The Potential for Misinformation and Malicious Use of OpenAI Technology

The OpenAI FTC investigation also considers the potential for OpenAI's technology to be misused for malicious purposes. The ability to generate realistic text, images, and other forms of media raises significant concerns.

Deepfakes and the Spread of Disinformation

OpenAI's technology can be used to create convincing deepfakes, leading to:

- Spread of misinformation: Deepfakes can be used to create fake news and propaganda.

- Damage to reputations: Individuals can be falsely implicated in events through manipulated media.

- Erosion of public trust: The proliferation of deepfakes can undermine trust in information sources.

The FTC is concerned about the potential harm caused by the malicious use of AI-generated content. The OpenAI FTC investigation is looking at measures OpenAI is taking to prevent this misuse.

Safeguards and Mitigation Strategies

Mitigating the risks associated with malicious use requires proactive measures, including:

- Detection technologies: Developing tools to identify and flag AI-generated content.

- Content moderation strategies: Implementing robust mechanisms to remove or flag harmful content.

- Collaboration with other organizations: Working with researchers, policymakers, and other stakeholders to address these challenges.

- Government regulation: Developing appropriate regulations to govern the development and use of AI technologies.

OpenAI's responsibility in developing robust safety mechanisms is a key focus of the OpenAI FTC investigation. The investigation underscores the importance of responsible innovation and ethical considerations in the development and deployment of AI technologies.

Conclusion

The OpenAI FTC investigation highlights the crucial need for careful consideration of the societal impacts of powerful AI technologies. Data privacy, algorithmic bias, and the potential for malicious use are critical issues that require immediate attention. The investigation’s outcome will significantly shape the future of AI regulation and responsible development. Understanding the implications of this OpenAI FTC investigation is crucial. Stay informed about ongoing developments and advocate for responsible AI development and regulation to ensure a safer and more equitable AI future. Learn more about the OpenAI FTC investigation and how you can contribute to a safer and more equitable AI future.

Featured Posts

-

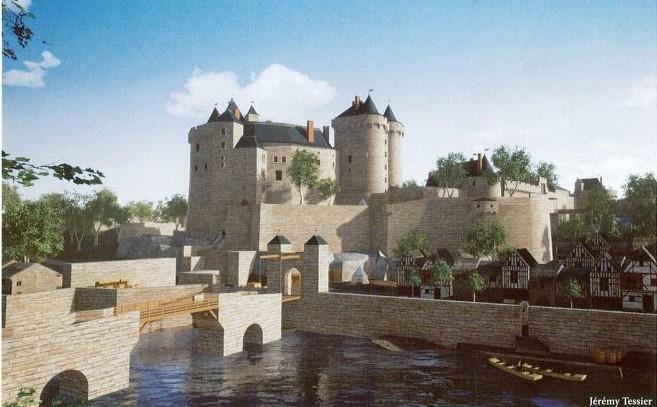

College De Clisson Le Port De La Croix Catholique Questionne

May 21, 2025

College De Clisson Le Port De La Croix Catholique Questionne

May 21, 2025 -

Colombian Models Murder And Mexican Influencers Killing A Wave Of Condemnation Against Femicide

May 21, 2025

Colombian Models Murder And Mexican Influencers Killing A Wave Of Condemnation Against Femicide

May 21, 2025 -

Resistance Grows Car Dealerships Oppose Mandatory Ev Sales

May 21, 2025

Resistance Grows Car Dealerships Oppose Mandatory Ev Sales

May 21, 2025 -

Fastest Crossing Of Australia On Foot A New Record

May 21, 2025

Fastest Crossing Of Australia On Foot A New Record

May 21, 2025 -

Its A Girl Peppa Pigs Family Grows

May 21, 2025

Its A Girl Peppa Pigs Family Grows

May 21, 2025

Latest Posts

-

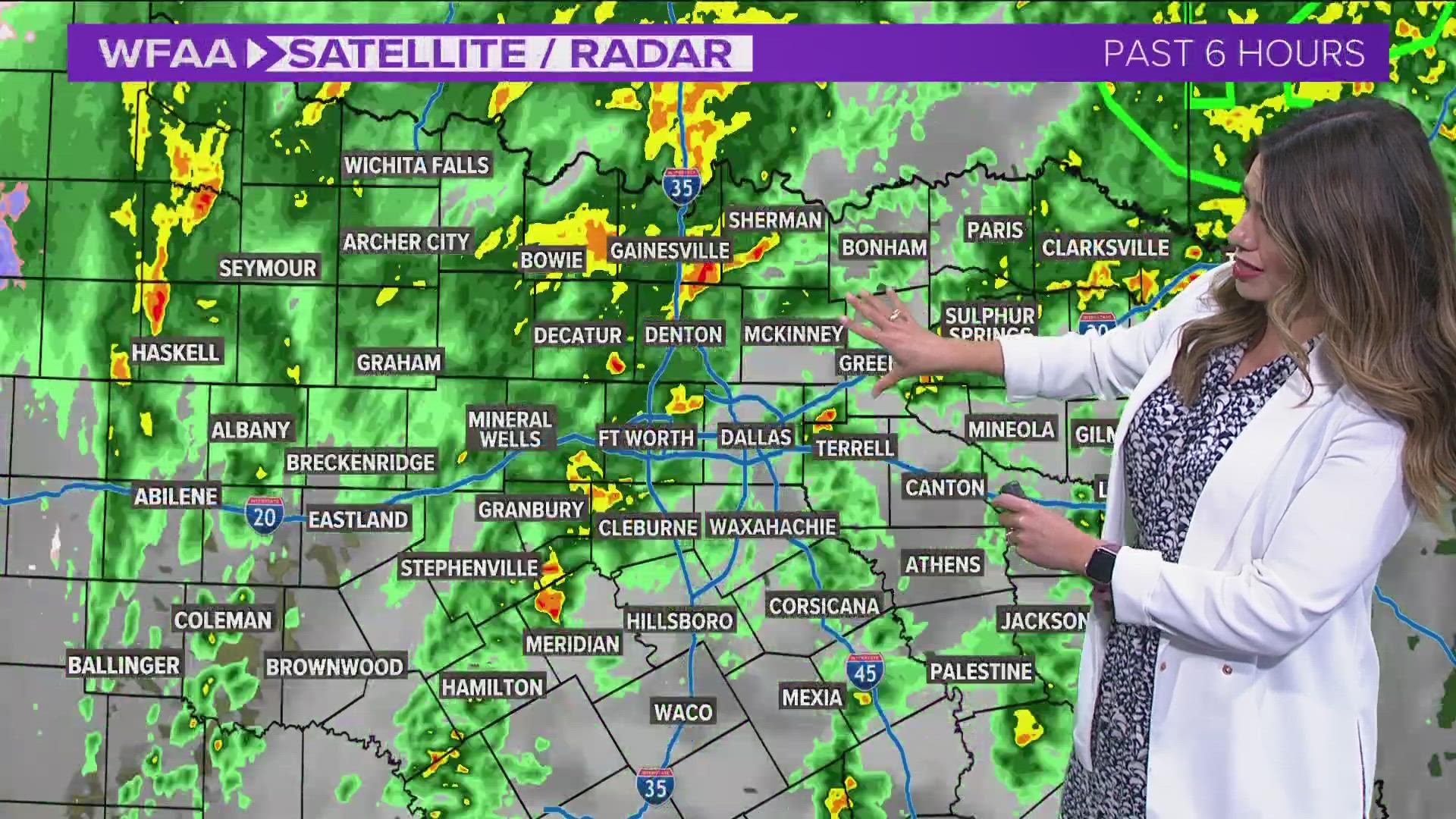

When Will It Rain Precise Timing And Chances Of Precipitation

May 21, 2025

When Will It Rain Precise Timing And Chances Of Precipitation

May 21, 2025 -

Checking For Rain Get The Latest Timing And Forecast Updates

May 21, 2025

Checking For Rain Get The Latest Timing And Forecast Updates

May 21, 2025 -

Current Rain Predictions Accurate Timing Of On And Off Showers

May 21, 2025

Current Rain Predictions Accurate Timing Of On And Off Showers

May 21, 2025 -

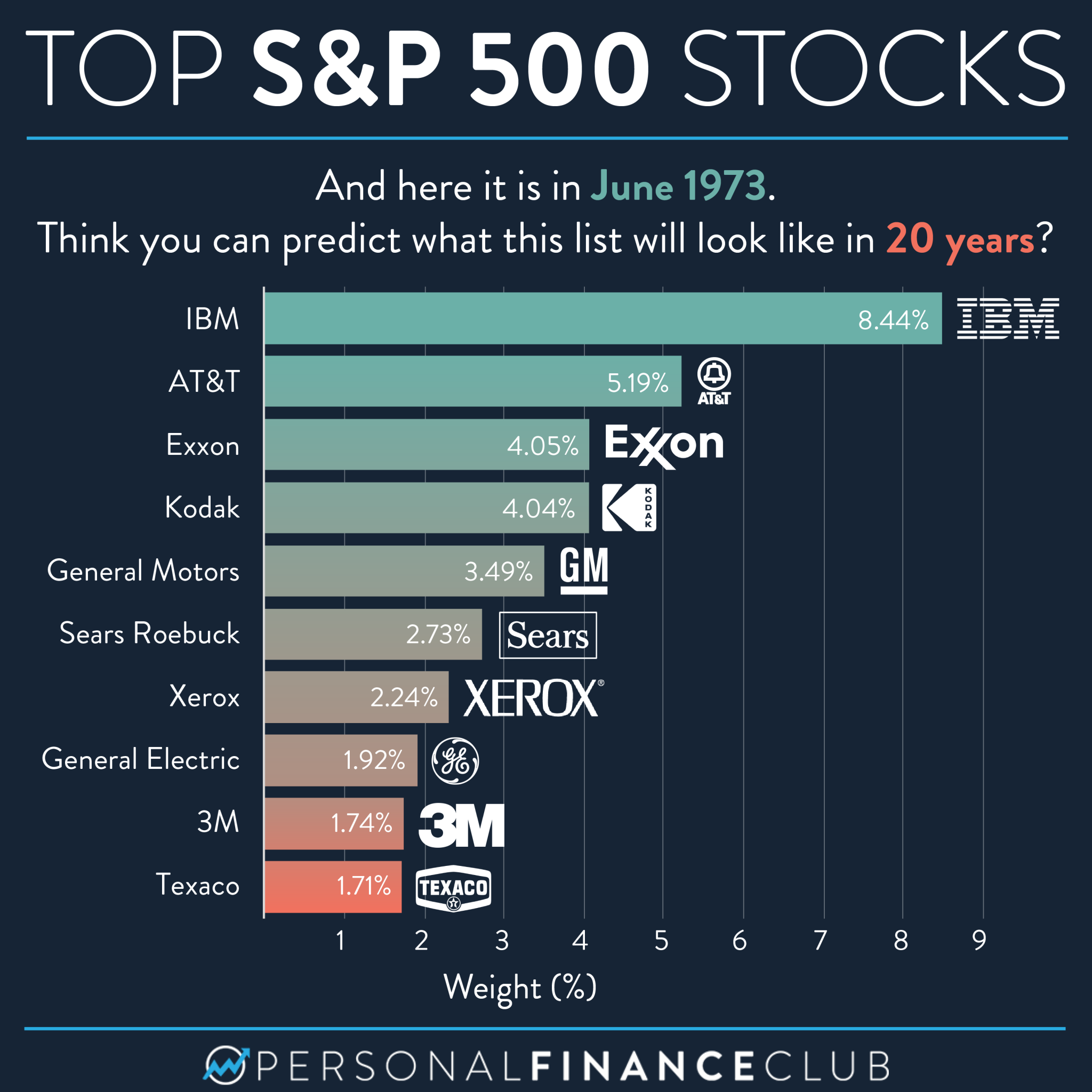

Reddits 12 Hottest Ai Stocks Should You Invest

May 21, 2025

Reddits 12 Hottest Ai Stocks Should You Invest

May 21, 2025 -

Big Bear Ai Stock Risks And Rewards For Investors

May 21, 2025

Big Bear Ai Stock Risks And Rewards For Investors

May 21, 2025